As expected by mid-year, we’re much further along with AI tool development (and Generative AI Tools) than what one could have imagined back in January 2023 when I first launched this series in AI Tools part 1: Why we need them, and then in March with updates in AI Tools Part 2: A Deeper Dive, Generative AI has grown to a point of practical usability in many cases, and advanced at a rate where we can more clearly see the path it’s heading in aiding production and post processes for video, imaging and creative content industries.

But as much as I’m excited about sharing the latest technological updates with our readers this month, I also need to open up this forum to address the elephant in the room: “Is AI going to take my job?” All we have are facts and opinions… and the line that divides them is pretty blurry. Mostly because we can’t really predict the future of AI development as it’s happening so fast. But for now, let’s take a minute and look at where we are – and what AI really is.

I’ve seen a lot of talk about what AI is exactly, and why is it called “Artificial Intelligence” if it requires human interaction to make it work correctly?

The above quoted response from Stephen Ford on Quora is probably the most succinct response I’ve seen to this question – fueled with a bit of speculation and sci-fi novel appeal. But in the end, we really don’t know the outcome of what we’re developing right now. You can read his whole response in the link.

Most everyone in the developed world has already been “feeding the machine” for the past several decades, in one form or another. Ever since we started communicating electronically, our clicks, words, images and opinions have been collected and used in a form of data harvesting and marketing back to us. At least since the early 90s, everything you buy at the store, or that Crapaccino you get at Starbux, or the shows you’ve watched on Cable or Dish network, or even content you shared on AOL and searches made in Yahoo were being collected and used to target messaging back to you in the form of direct mail or other advertising materials. (I used to create much of it for ad agencies back in the day). The only difference is, since everyone has connected to the Internet many times over (the iOT included) it’s happening at lightning speed.

And while it might feel like it, none of this has happened overnight. It’s just the developers are now taking all this data and applying it to machine learning models and spitting it out in various forms. And yes, the machines are learning faster and increasing accuracy with their results

But I think it’s equally important to understand HOW all this works, to best answer the “Whys”.

How does Generative AI work?

A good explanation in layman’s terms provided by Pinar Seyhan Demirdag of Seyhan Lee in her brilliant AI course on LinkedIn:

https://www.linkedin.com/learning/what-is-generative-ai/how-generative-ai-works

Generative AI enables users to quickly generate new content based on a variety of inputs. Inputs and outputs to these models can include text, images, sounds, animation, 3D models, or other types of data.

NVIDIA’s website explains how Generative AI models work:

Generative AI models use neural networks to identify the patterns and structures within existing data to generate new and original content.

One of the breakthroughs with generative AI models is the ability to leverage different learning approaches, including unsupervised or semi-supervised learning for training. This has given organizations the ability to more easily and quickly leverage a large amount of unlabeled data to create foundation models. As the name suggests, foundation models can be used as a base for AI systems that can perform multiple tasks.

Examples of foundation models include GPT-3 and Stable Diffusion, which allow users to leverage the power of language. For example, popular applications like ChatGPT, which draws from GPT-3, allow users to generate an essay based on a short text request. On the other hand, Stable Diffusion allows users to generate photorealistic images given a text input.

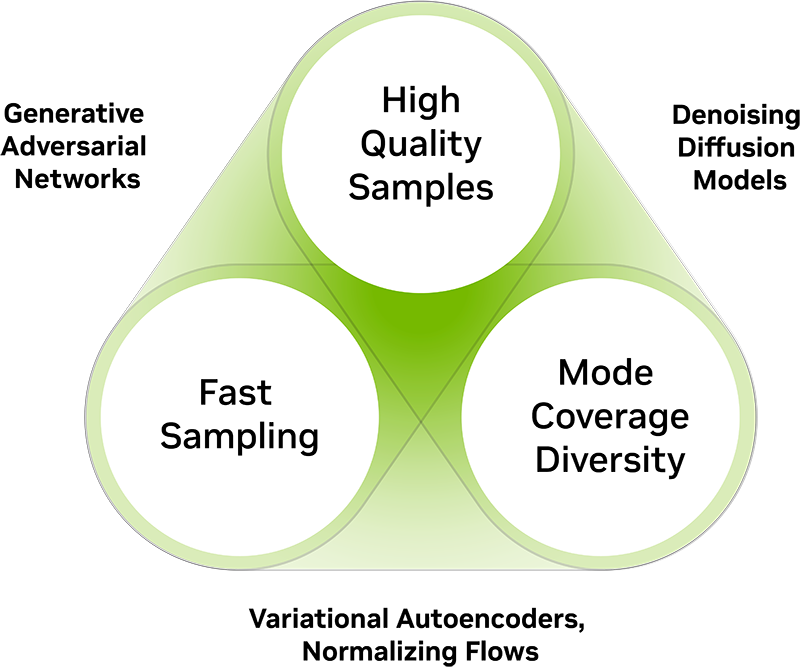

The three key requirements of a successful generative AI model are:

- Quality: Especially for applications that interact directly with users, having high-quality generation outputs is key. For example, in speech generation, poor speech quality is difficult to understand. Similarly, in image generation, the desired outputs should be visually indistinguishable from natural images.

- Diversity: A good generative model captures the minority modes in its data distribution without sacrificing generation quality. This helps reduce undesired biases in the learned models.

- Speed: Many interactive applications require fast generation, such as real-time image editing to allow use in content creation workflows.

So just to be clear – this isn’t a case of “search/copy/paste” from collected content harvested across the internet. It’s much more complex, and will continue to be.

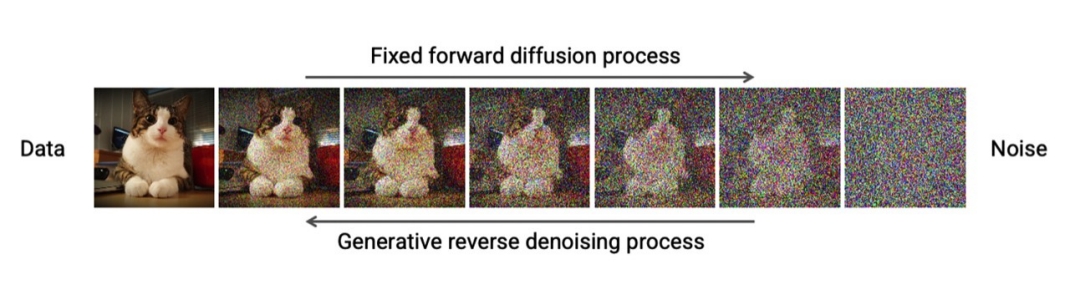

In regards to developing Diffusion models (Midjourney, DAL-E, Stable Diffusion, etc.) this is provided:

- Diffusion models: Also known as denoising diffusion probabilistic models (DDPMs), diffusion models are generative models that determine vectors in latent space through a two-step process during training. The two steps are forward diffusion and reverse diffusion. The forward diffusion process slowly adds random noise to training data, while the reverse process reverses the noise to reconstruct the data samples. Novel data can be generated by running the reverse denoising process starting from entirely random noise.

But what about generative text models like ChatGPT?

An explanation in layman’s terms from Zapier.com’s blog helps it to make sense:

ChatGPT works by attempting to understand your prompt and then spitting out strings of words that it predicts will best answer your question, based on the data it was trained on.

Let’s actually talk about that training. It’s a process where the nascent AI is given some ground rules, and then it’s either put in situations or given loads of data to work through in order to develop its own algorithms.

GPT-3 was trained on roughly 500 billion “tokens,” which allow its language models to more easily assign meaning and predict plausible follow-on text. Many words map to single tokens, though longer or more complex words often break down into multiple tokens. On average, tokens are roughly four characters long. OpenAI has stayed quiet about the inner workings of GPT-4, but we can safely assume it was trained on much the same dataset since it’s even more powerful.

This humongous dataset was used to form a deep learning neural network […] modeled after the human brain—which allowed ChatGPT to learn patterns and relationships in the text data […] predicting what text should come next in any given sentence.

All the tokens came from a massive corpus of data written by humans. That includes books, articles, and other documents across all different topics, styles, and genres—and an unbelievable amount of content scraped from the open internet. Basically, it was allowed to crunch through the sum total of human knowledge.

This humongous dataset was used to form a deep learning neural network—a complex, many-layered, weighted algorithm modeled after the human brain—which allowed ChatGPT to learn patterns and relationships in the text data and tap into the ability to create human-like responses by predicting what text should come next in any given sentence.

Though really, that massively undersells things. ChatGPT doesn’t work on a sentence level—instead, it’s generating text of what words, sentences, and even paragraphs or stanzas could follow. It’s not the predictive text on your phone bluntly guessing the next word; it’s attempting to create fully coherent responses to any prompt. (follow the link to read further)

But the bottom line is, HOW you use ChatGPT or Bard or any other generative text model, will garner the results you might expect. In most cases, blindly asking them for help or information on a subject like you might with Google – without any kind of input or “training” on the topic/subject, you’ll get sketchy results and incomplete or just plain wrong information.

Here’s a great start to understanding “Prompt Engineering” from the All About AI YouTube channel:

So is AI really going to take my job?

That depends on what you consider your job is.

Are you simply doing only ONE TASK in your job such as creating generic graphics or editing someone else’s marketing copy or scripts? Then chances are, eventually, yes. Anything involving writing or editing, analysts, basic programming, design and content creation/conceptualization and even V.O. artists are already at risk. Ref. Business Insider 4 June 2023

You must conform and adapt and diversify your abilities and embrace the change, or you will be found redundant.

My experiences so far have been everything the opposite – with a newfound creative vigor and excitement for what these new tools and technologies have to offer. I’ve reinvented myself so many times over the past 40+ years in may career (starting as an airbrush illustrator/mechanical engineering draftsman) and changed what I “do” all along the way as the technology changed courses. And I’m still looking ahead to see how it’s going to change much more before I finally (if ever) decide to retire!

So make sure you’re constantly diversifying your capabilities and get in front of the wave NOW. Don’t wait for the inevitable before you make changes in your career/income stream.

Write your comments below at the end of this article and tell us your thoughts about AI and the industry.

Major AI Tool Updates

I’ve been working on getting you all a deeper dive with some updates on the major players in Generative AI tool development, and this month is NOT disappointing!

Adobe Photoshop (Beta) & Firefly AI Generative Fill

The public beta of Photoshop 2023 was released last month and I covered some details in a short article on the release so there might be a little redundancy in this section if you read that already. But it’s worth pointing out what a game changer this is.

Accessible from your Creative Cloud app, download the latest Photoshop (Beta) and start the fun of exploring new capabilities within your own images using the Adobe Firefly Generative Fill.

Here’s more info and be sure to watch the demo video: https://www.adobe.com/products/photoshop/generative-fill.html

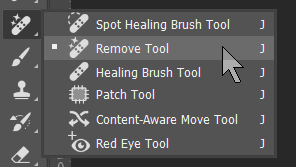

AI Remove Tool

I’m really excited about this new Remove Tool that is part of the healing brush palette.

It’s pretty straight-forward. Apply it as you might the Spot Healing Brush tool over an object you want removed, and Presto!

Check out this city scene where I removed the cars and people in less than a minute! It’s quite amazing!

(Click image to see details)

Firefly AI Generative Fill

It’s quite easy to use the Generative Fill – either to “fix” images, place new objects into a scene or expand the boundaries of an existing image. This is also called “outpainting” or “zooming-out” of an image.

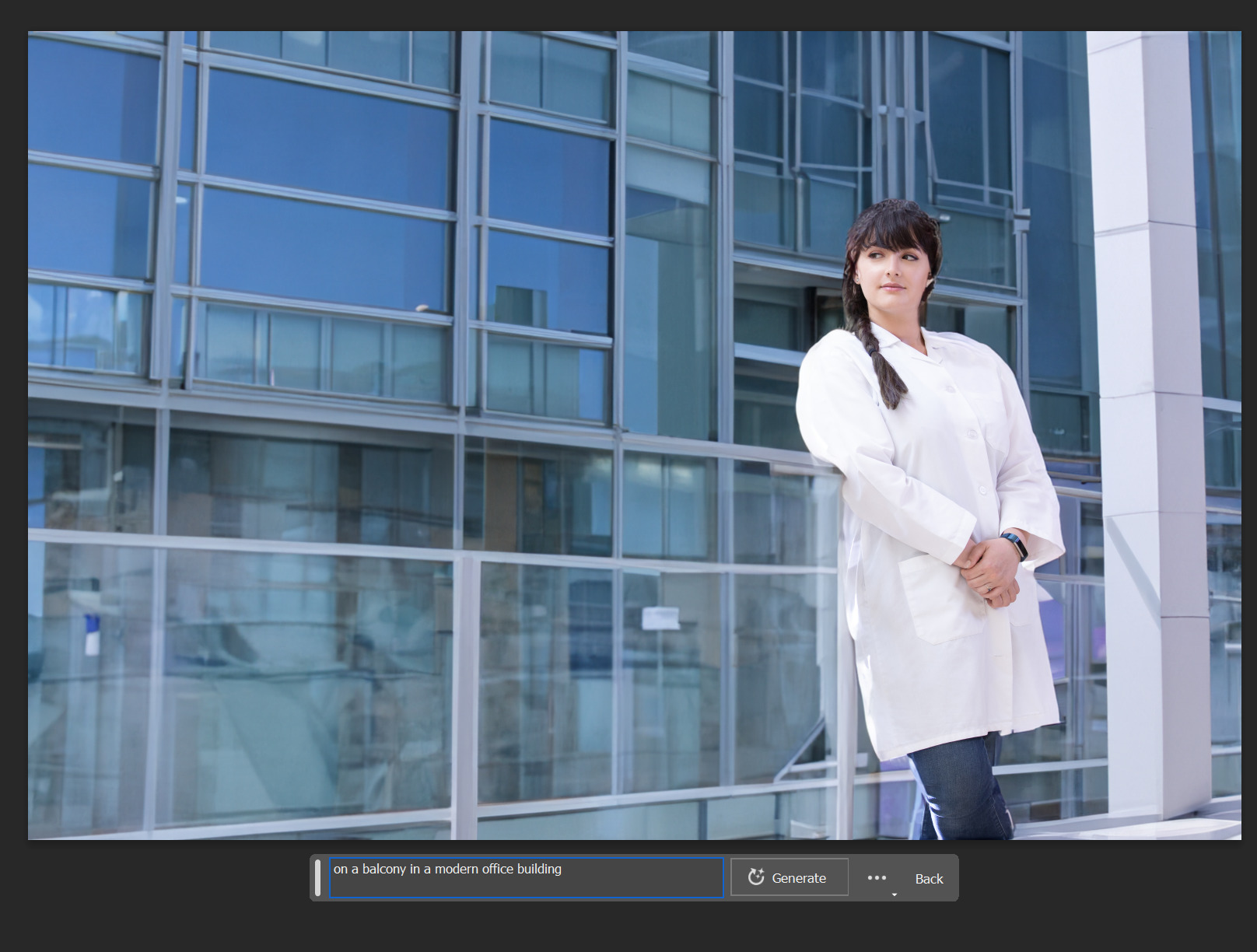

When working in marketing, you often need to utilize stock images for ads and brochures. Since I work for a biotech company at my day gig, I thought it was appropriate to look for some bioscience images in Adobe Stock and found one as a good example of an issue we often run into with stock photos. The image is perfect except what if the client wants to run an ad with lots of type on the side or they want tradeshow booth graphics that fit a specific dimension. Usually we need to generate a fade off to the side or crop out the top/bottom to accommodate and then it’s too busy for text on top.

The solution is really simple now. Expand the Canvas size of the image and selecting that negative space with just a slight overlap onto the image layer and just hit Generate Fill and let it do it’s magic.

The resulting image is about 95% of the way there – including the extension of the scientist’s gloves, arm and lab coat sleeve, as well as the DNA strand that runs vertically down the page. There’s very little additional work to this image that would smooth it out to work for various marketing materials.

Another example was to use a photo that our team shot a few years ago of a scientist leaning against the railing at one of our HQ buildings on campus. I simply used the Object Select Tool to mask her out.

I reversed the selection and entered “on a balcony in a modern office building” in the Generative Fill panel and it produced a remarkable image – complete with reflections into the chrome and glass from her lab coat and matching the light direction and shadows. Literally two clicks and a little text prompt away from the original image.

Working with AI Generated Images

I used a Midjourney image to outpaint the edges to fill an iPhone screen. (You can read more about how the image was initially generated using ChatGPT for the prompt in the Midjourney section below)

I opened the image in Photoshop (Beta) and increased the Canvas size to the pixel dimensions of my iPhone. I then selected the “blank space” around the image and just let Photoshop do an unprompted Generative Fill and it created the results below:

Using Photoshop (Beta) Generative Fill to zoom out to the dimensions of the iPhone screen:

I was really impressed that it maintained the style of the original AI generated art and embellished on that “fantasy” imagery as it more than doubled in size.

For a good tutorial on using both the Remove Tool and Generative Fill to modify your images in the Photoshop (Beta), check out this video from my friend and colleague, Colin Smith from PhotoshopCAFE:

Midjourney 5.2 (with Zoom Out fill)

Some big changes come to Midjourney with it’s latest build v5.2 and worthy of a spotlight here.

With v5.2 the quality of the image results are much more photorealistic – perhaps at the sacrifice of the “fantasy art” and super creative images we’ve been used to in previous versions. But let’s just look at that quality a moment.

This is from a year ago June 2022 in Midjourney, where faces, hands and even size of the rendered results were much lower – then v4 in Feb of 2023 and then today in June of 2023. All straight out of Midjourney (Discord).

My prompt has remained the same for all three of these images: “Uma Thurman making a sandwich Tarantino style”. (Don’t ask me why – it was just some obscure prompt that I thought would be funny at the time. I can’t recall if I was sober or not.) 😛

The image I created for my first article in this series, AI Tools Part 1: Why We Need Them back in January of this year, I utilized a prompt that was generated by ChatGPT.

“As the spaceship hurtled through the vast expanse of space, the crew faced a sudden malfunction that threatened to derail their mission to save humanity from a dying Earth. With limited resources and time running out, they must come together and use their unique skills and expertise to overcome the obstacles and ensure their survival.”

One of several results was the image I used (I added the text of course – Midjourney still messes up text horribly)

Using the exact same prompt today in v5.2 gave a very different result:

v5.2 Zoom-out Feature (Outpainting)

One of the most significant updates in v5.2 is the ability to “Zoom Out” of an image. Similar to how Generative Fill works in Photoshop (Beta), only the process isn’t as selective and you still guess at what Midjourney is going to create outside the boundaries of your initial image.

Currently, it only works with images generated in v5.2 and I couldn’t get it to work with uploaded images. This image was based off another prompt I did months ago – using another image to generate a more stylized result. I got a variation of images and chose this one to upscale to provide the Original pictured below on the left. I then used the Zoom Out 1.5x option to create the image in the center and Zoom Out 2x from there to generate the image on the right.

Here’s a great tutorial from Olivio Sarikas on how to use MJ v5.2 with the new Zoom-out options:

Taking other images created with v5.2, I used the Zoom Out option to further expand the image to create something completely different. This was a variety of images generated from the original up above. Click on the image to see details.

Another example with the original on the left – and the multiple-stage Zoom out on the right:

It’s often surprising where Midjourney decided to take an image – often with multiple options to drill down further with variations and further zooming.

Wonder Studio

Wonder Dynamics has completed the closed, private beta of Wonder Studio that we’ve been playing with the past few months, and will be opening its doors to new users on June 29th and will be announcing their pricing structure soon as well.

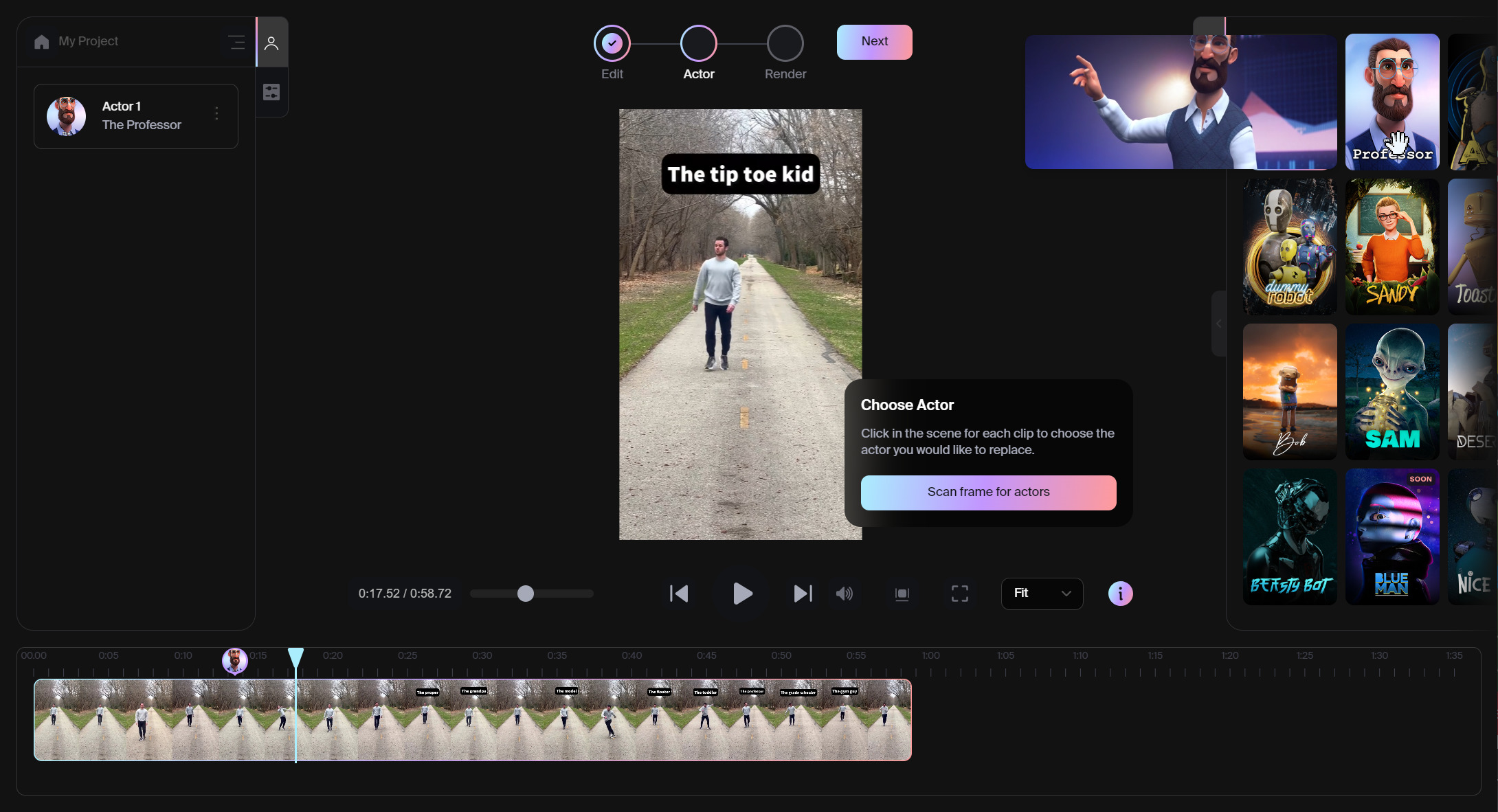

Wonder Studio is an AI tool that automatically animates, lights and composes CG characters into a live-action scene. Currently, you can only choose from pre-made characters, but in the future you can add your own rigged 3D characters to animate on-screen to replace your actors on-camera – all without motion tracking markers and massive roto work.

It can produce a number of outputs for your production pipeline as well, such as a Clean Plate, Alpha Mask, Camera Track, MoCap, and even a Blender 3D file. I could immediately see the character alpha useful for color work at minimum.

The workflow is really simple: upload your video to the Wonder Studio web portal and select an actor to track from your scene. Then select a 3D character from the library and set to render. Wonder Studio does all the work for you in the cloud.

I used this viral video from @DanielLaBelle on YouTube of his Various Ways People Walk as a test to see how close Wonder Studio could match his motion. Judge for yourself the side-by-side results. Note that this video took approximately 30-40 minutes to render fully.

You can see some artifacts and blurriness at times where the original actor was removed – or action scenes that didn’t quite capture the original actor completely and they “pop on screen” for a moment, like in this action scene posted by beta tester Solomon Jagwe on YouTube.

It’s still super impressive this was done all in Wonder Studio on a web browser! I can only imagine how this AI technology will improve over time.

ElevenLabs AI

A couple big updates from ElevenLabs – AI Speech Classifier and Voice Library

AI Speech Classifier: A Step Towards Transparency (from their website)

Today, we are thrilled to introduce our authentication tool: the AI Speech Classifier.This first-of-its-kind verification mechanism lets you upload any audio sample to identify if it contains ElevenLabs AI-generated audio.

The AI Speech Classifier is a critical step forward in our mission to develop efficient tracking for AI-generated media. With today’s launch, we seek to further reinforce our commitment to transparency in the generative media space. Our AI Speech Classifier lets you detect whether an audio clip was created using ElevenLabs. Please upload your sample below. If your file is over 1 minute long, only the first minute will be analysed.

A Proactive Stand against Malicious Use of AI

As creators of AI technologies, we see it as our responsibility to foster education, promote safe use, and ensure transparency in the generative audio space. We want to make sure that these technologies are not only universally accessible, but also secure. With the launch of the AI Speech Classifier, we seek to provide software to supplement our wider educational efforts in the space, like our guide on the safe and legal use of Voice Cloning.

Our goal at ElevenLabs is to produce safe tools that can create remarkable content. We believe that our status as an organization gives us the ability to build and enforce the safeguards which are often lacking in open source models. With today’s launch we also aim to empower businesses and institutions to leverage our research and technology to bolster their respective safeguards.

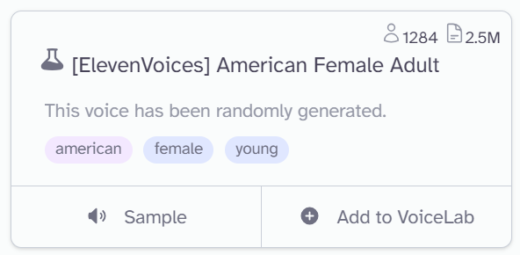

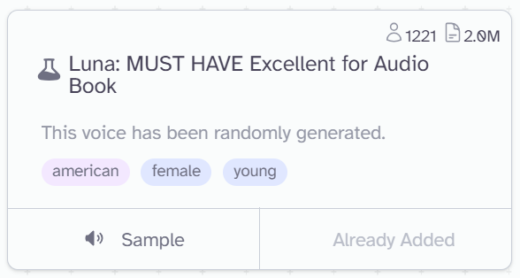

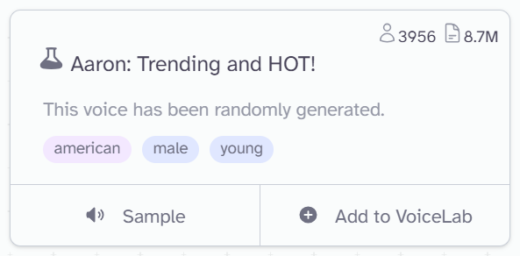

Voice Library is a community space for generating, sharing, and exploring a virtually infinite range of voices. Leveraging our proprietary Voice Design tool, Voice Library brings together a global collection of vocal styles for countless applications.

You can equally browse and use synthetic voices shared by others to uncover possibilities for your own use-cases. Whether you’re crafting an audiobook, designing a video game character, or adding a new dimension to your content, Voice Library offers unbounded potential for discovery. Hear a voice you like? Simply add it to your VoiceLab.

All the voices you find in Voice Library are purely artificial and come with a free commercial use license.

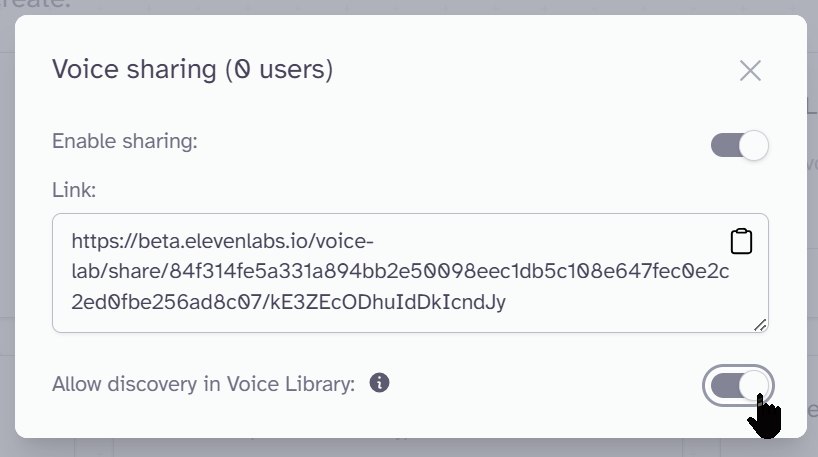

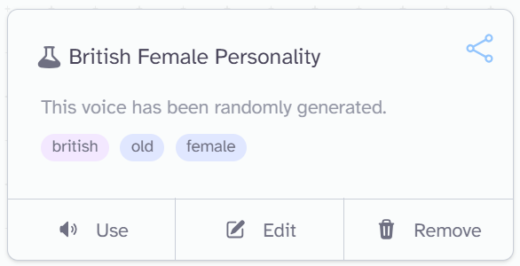

In addition to making your generated voices sharable, they can now be part of the extensive Voice Library on the ElevenLabs site (provided you have any level paid account).

Sharing via Voice Library is easy:

- Go to VoiceLab

- Click the share icon on the voice panel

- Toggle enable sharing

- Toggle allow discovery in Voice Library

You can disable sharing at any time. When you do, your voice will no longer be visible in Voice Library, but users who already added it to their VoiceLab will keep their access.

Here are just a few examples currently available in the library of hundreds of voices generated and shared:

Okay – I made that last one… and provided the sample text for her to speak, of course! You can now find it in the Voice Library too 😀

AI Tools: The List You Need Now!

Be sure to check in with the ongoing thread AI Tools: The List You Need Now! as I update it regularly to keep it current and relevant.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now