Everything changes next Summer, when MetaHuman Animator allows to capture with fidelity an actor’s performance, with just an iPhone or stereo helmet-mounted camera.

Everything changes next Summer, when MetaHuman Animator allows to capture with fidelity an actor’s performance, with just an iPhone or stereo helmet-mounted camera.

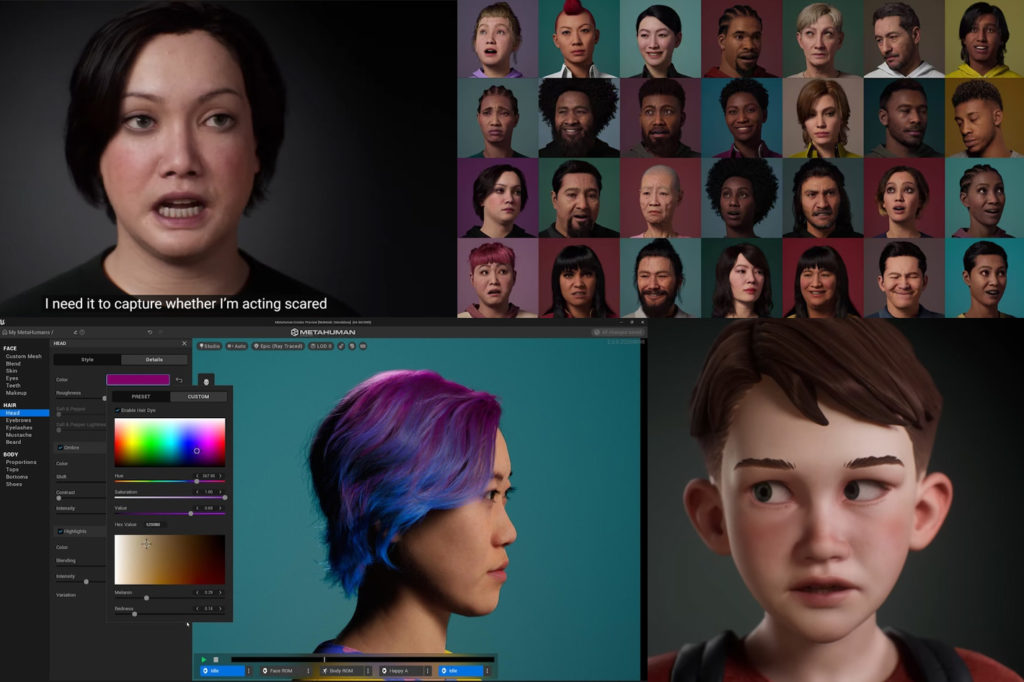

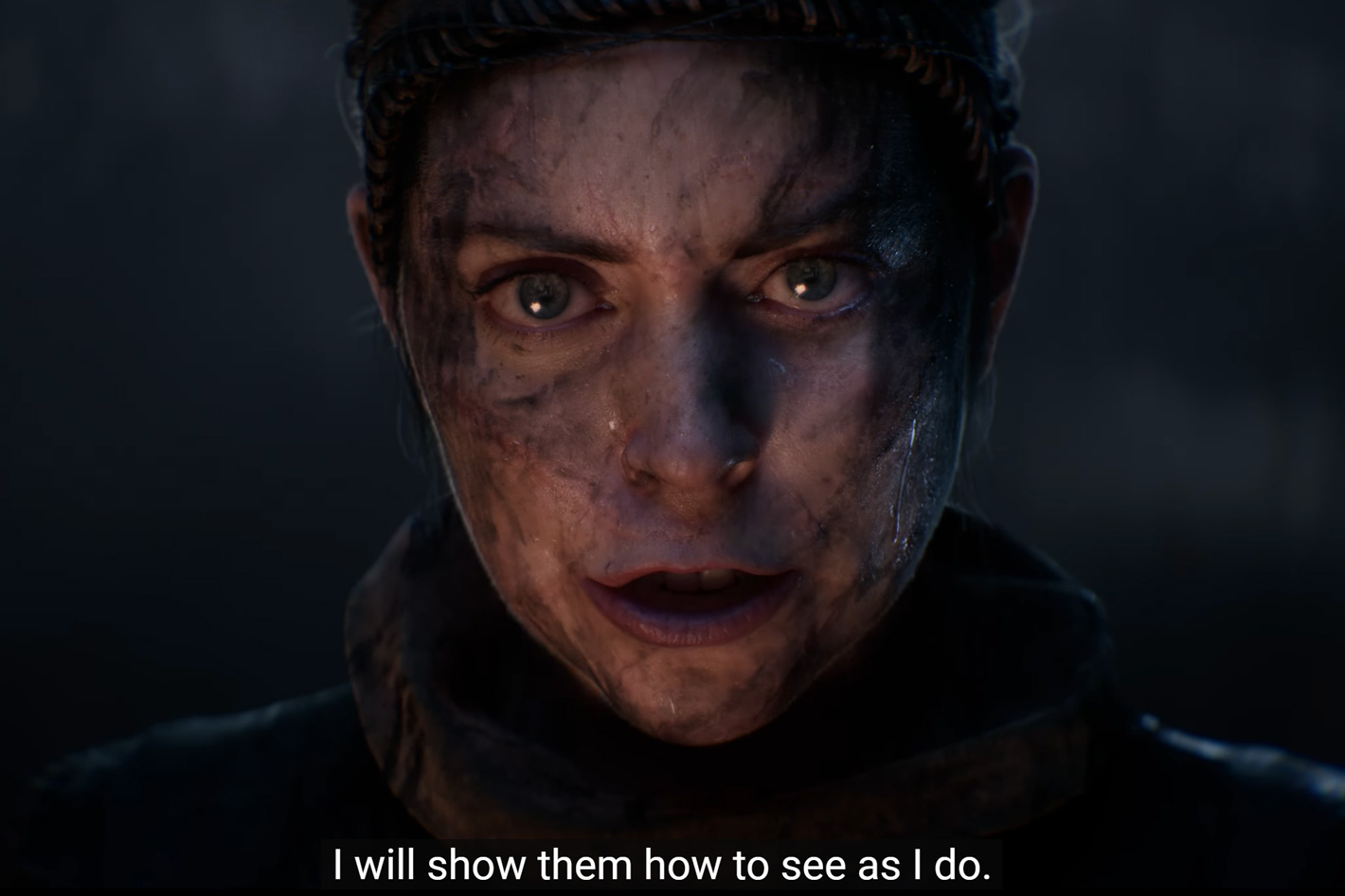

Although we’ve come a long way, it continues to be incredibly difficult for existing performance capture solutions to faithfully recreate every nuance of the actor’s performance on your character. For truly realistic animation, skilled animators are often required to painstakingly tweak the results in what is typically an arduous and time-consuming process. Epic Games has tried to change all that and their MetaHumans platform has helped to move the industry forward. Now the company says that drastic changes are coming!

The next step arrives in the coming months, with Summer as the probable launch date for MetaHuman Animator, a new feature set to bring easy high-fidelity performance capture to MetaHumans. Epic Games says that MetaHuman Animator will enable you to use your iPhone or stereo helmet-mounted camera (HMC) to reproduce any facial performance as high-fidelity animation on MetaHuman characters. With it, you’ll be able to capture the individuality, realism, and fidelity of your actor’s performance, and transfer every detail and nuance onto any MetaHuman to bring them to life in Unreal Engine.

According to Epic Games, MetaHuman Animator will produce the quality of facial animation required by AAA game developers and Hollywood filmmakers, while at the same time being accessible to indie studios and even hobbyists. With the iPhone you may already have in your pocket and a standard tripod, you can create believable animation for your MetaHumans that will build emotional connections with your audiences—even if you wouldn’t consider yourself an animator.

Works on iPhone 11 or later

Works on iPhone 11 or later

It’s not just for iPhone as, according to Epic Games, MetaHuman Animator also works with any professional vertical stereo HMC capture solution, including those from Technoprops, delivering even higher-quality results. And if you also have a mocap system for body capture, MetaHuman Animator’s support for timecode means that the facial performance animation can be easily aligned with body motion capture and audio to deliver a full character performance. It can even use the audio to produce convincing tongue animation.

MetaHuman Animator’s processing capabilities will be part of the MetaHuman Plugin for Unreal Engine, which, like the engine itself, is free to download. If you want to use an iPhone—MetaHuman Animator will work with an iPhone 11 or later—you’ll also need the free Live Link Face app for iOS, which will be updated with some additional capture modes to support this workflow. Follow the link to read more about how the software works.

MetaHuman Animator was announced at Game Developers Conference 2023, during Epic Games’ State of Unreal where the company revealed its long-term vision for the future of content creation, distribution, and monetization—including how it is laying the foundations for a connected, open ecosystem and economy that will enable all creators and developers to benefit in the metaverse.

Real-time Electric Dreams

Real-time Electric Dreams

A peek at what’s coming in Unreal Engine 5.2 was also part of the presentation. Unreal Engine is the backbone of the Epic ecosystem. The launch of UE5 last spring put even more creative power in the hands of developers. Since that release, 77% of users have moved over to Unreal Engine 5. Unreal Engine 5.2 offers further refinement and optimizations alongside several new features.

Epic Games shared Electric Dreams, a real-time demonstration that showcases new features and updates coming as part of the 5.2 Preview release, available today via the Epic Games Launcher and GitHub. In the live demo at the State of Unreal, a photorealistic Rivian R1T all-electric truck crawls off road through a lifelike environment densely populated with trees and lush vegetation built with Quixel Megascans, and towering craggy rock structures using Quixel MegaAssemblies.

Epic notes that “The R1T’s distinct exterior comes to life in the demo thanks to the new Substrate shading system, which gives artists the freedom to compose and layer different shading models to achieve levels of fidelity and quality not possible before in real time. Substrate is shipping in 5.2 as Experimental.”

The state-of-the-art R1T showcases the latest technology and vehicle physics, with the truck’s digi-double exhibiting precise tire deformation as it bounds over rocks and roots, and true-to-life independent air suspension that softens as it splashes through mud and puddles with realistic fluid simulation and water rendering.

The opportunities for creators are limitless

The opportunities for creators are limitless

In addition, the large open world environment is built using procedural tools created by artists that build on top of a small, hand-placed environment where the adventure begins. Shipping as Experimental in 5.2, new in-editor and run-time Procedural Content Generation (PCG) tools enable artists to define rules and parameters to quickly populate expansive, highly detailed spaces that are art-directable in manner and work seamlessly with hand-placed content.

The State of Unreal featured breathtaking demos from Epic partners including Cubit, Kabam, CI Games, and NCSoft that showcased what’s possible right now with Unreal Engine 5 and other Epic tools. Awe-inspiring projects like these are proof that an exciting new era of virtual worlds, games, and experiences is already beginning to dawn.

Epic Games believes that “Armed with powerful tools, a vast marketplace for digital content, unfettered access to a global audience, and a new equitable economy, the opportunities for creators are limitless.”

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now