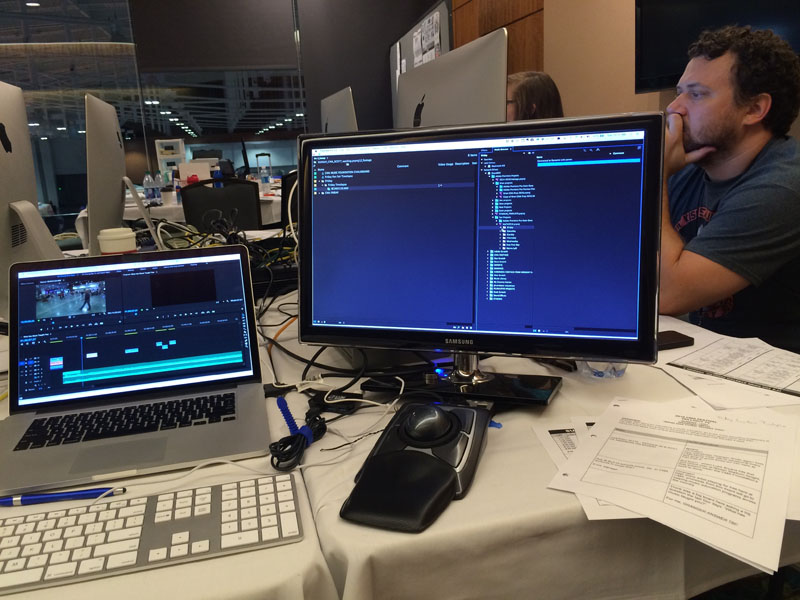

Earlier this summer I was hired on a job to edit same day content for CMA Music Festival. It’s one of the biggest music festivals around and they produce a good bit of content that shows nightly at the stadium as well as online. Working with our friends at Taillight TV the weeklong job required five warm bodies working around a table to produce this daily content; three editors and two assistants. Production teams would fan out all over downtown Nashville and capture footage during the day as well is in the evenings. Matt from Taillight wrote up a piece out our experience on Linkedin. That’s our team below in a cool 360 photograph, that is if your browser supports it.

CMA Post Ninjas – Spherical Image – RICOH THETA

Taillight would be producing and we would be editing daily wrap-ups, look aheads to the next day as well as various vignettes and fun video content bits using talent that was there for CMA Fest. Below is one of the daily wrap-ups that were produced every day of the festival.

https://youtu.be/dQc6694yke0?list=PLSR1UreQHwa3_ylC8GT9cGyUaOIhGNd44

The discussion on how we would pull this off quickly led us to the conclusion that we needed shared storage to be able to make this happen and meet our daily deadlines. I thought back to NAB 2016 and my interview with Lumaforge in their workflow suite at the Renaissance Hotel. Sam Mestman mentioned casually as I left the hotel suite that if I ever had a job where I could make use of the Jellyfish to let him know and I could try it out. CMA Fest seemed like the perfect place for desktop shared storage. I’ll admit I know very little about shared storage (most everything I know I owe to the 5 Things Series) and even less about networking but Sam assured me that someone like myself is the target market for the Jellyfish. A few discussions about what we would be shooting, what we would be cutting with, what capacity we might need and a Jellyfish arrived at the Taillight office just in time for CMA Fest.

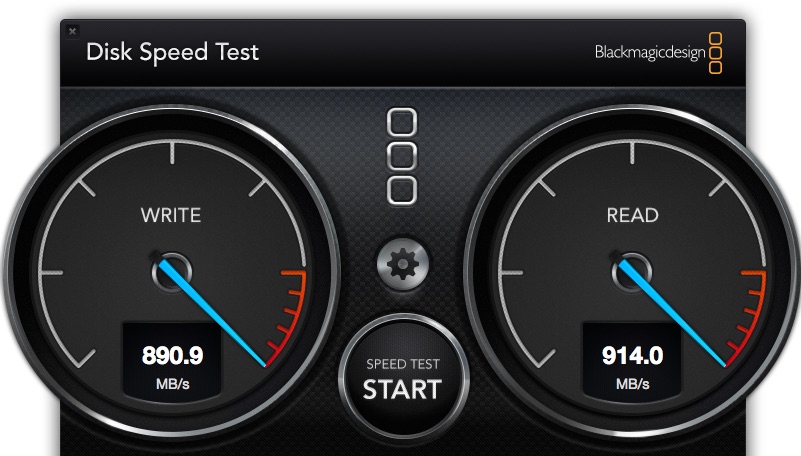

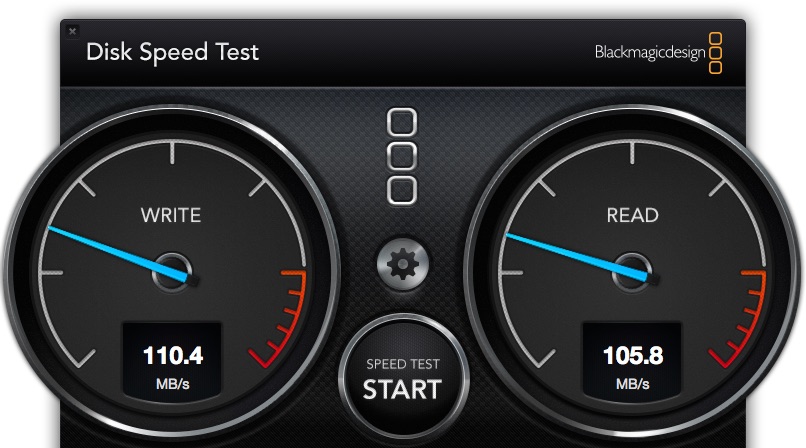

Since we were shooting at 1080 we didn’t need the absolute fastest connections for all of the editors. Shared storage uses networking to connect the computers so were all connected via Gig-E with the exception of 10-GigE connection.

The Jellyfish looks like a computer, which it essentially is. It’s a black box with bunch of networking ports in the back.

Inside there’s a bunch of hard drives but I never took the side off to really take a look. We did pull the side panel off once but more on that later. There’s a power button on the front and that’s about it. Depending on your needs you might have a lot more 10-GigE connections than you do GigE connections; for example if you’re doing 4K. You’ll need a lot more throughput for multiple 4K connections that you need for 1080. A lot more.

Our goal was to reach the last encode of the week.

Networking connections?

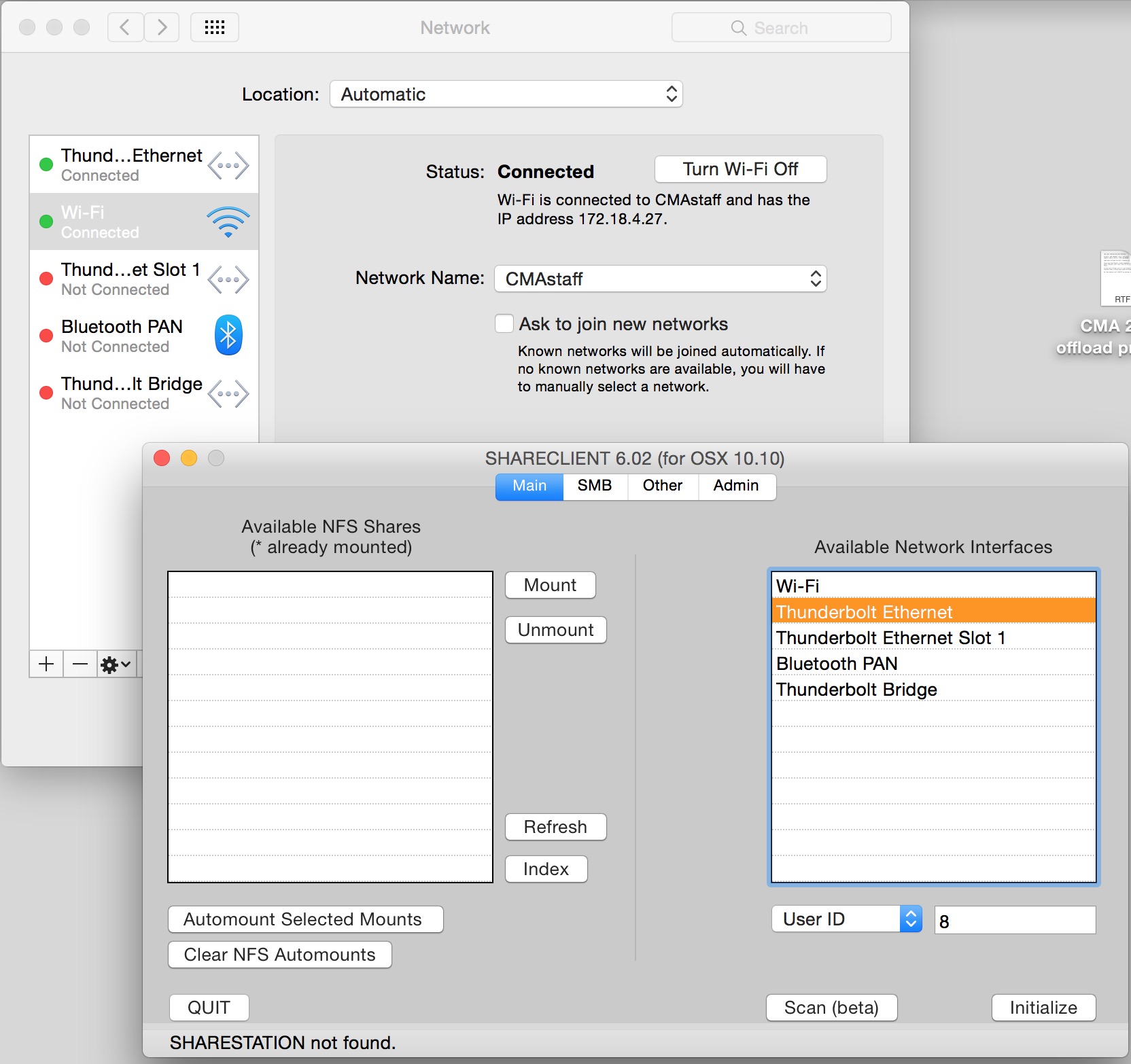

One thing I was worried about was the setup for the networking as and I do not think of networking connections to be as easy as the “plug and play” that we see with a Thunderbolt or a USB 3 connection (of course those connections are single user connections and not shared storage). Lumaforge has solved this in a sense with their software; the SHARECLIENT. All connecting computers have to install the Lumaforge SHARECLIENT. Upon turning on and connecting a computer you use their SHARECLIENT software as that is what talks to the Jellyfish. I’m not sure if “talks” is the right term in networking-speak but that’s what is happening as something has to talk between the computer and the storage. There is a way to dig into a much deeper backend which is something all shared storage has but the idea is to keep the user away from complex settings and setups.

We were cutting on Adobe Premiere Pro which may not be what most people associate with Lumafroge as as they have more of an association with Final Cut Pro X. Rest assured that the Jellyfish works just fine with Adobe Premiere Pro and I can only assume it will work just fine with Avid Media Composer as well. Lumaforge even pointed me to a product called MIMIQ that offers “real AVID sharing on any generic SMB” including bin-locking. The Lumaforge range isn’t just a Mac product either, they are Macintosh, PC and Linux compatible.

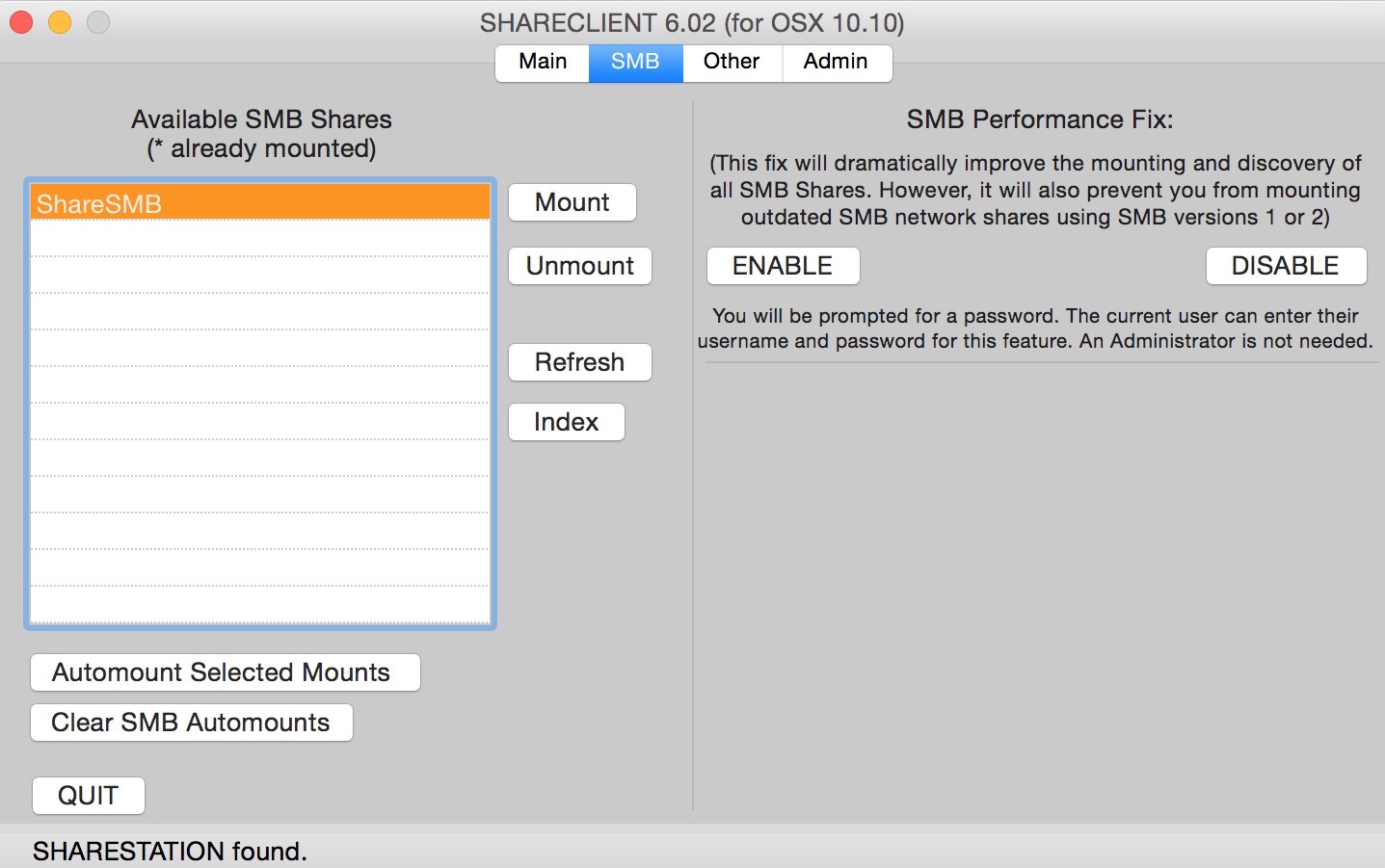

![]() One choice that must be made when connecting to the Jellyfish with the SHARECLIENT software is how that connection is made. Is it by NFS or SMB? [Insert my I don’t want to learn a ton of networking crap in order to edit face here.] We were advised that SMB is the better connection for Adobe Premiere Pro CC so this is what all five workstations used to connect to the Jellyfish. Each port on the back of the Jellyfish is assigned a number starting with 1. To connect, the SHARECLIENT has know which port you’re connected to. Each of our workstations were iMacs or Macbook Pros so we were connecting all of them via Thunderbolt to Ethernet adapters with the exception of the one that connected via a Sonnet 10GbE Twin 10G Thunderbolt adapter. You could also plug directly into the ethernet port of a MacPro tower, trashcan MacPro or any other Mac if they have networking ports built-in. You know the number of the port by properly counting the connections starting at the top. It would be helpful if looking at the ports on the back of the Jellyfish would actually show their number (maybe a sticker) as that might make it easier to troubleshoot if you’re having difficulty connecting.

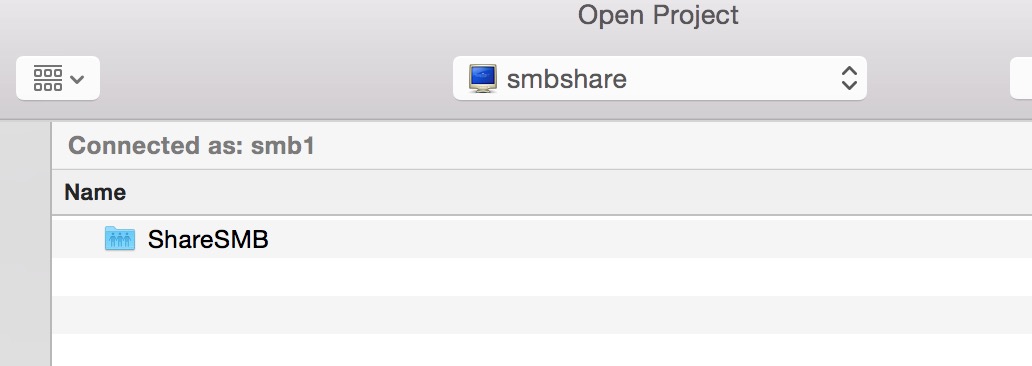

One choice that must be made when connecting to the Jellyfish with the SHARECLIENT software is how that connection is made. Is it by NFS or SMB? [Insert my I don’t want to learn a ton of networking crap in order to edit face here.] We were advised that SMB is the better connection for Adobe Premiere Pro CC so this is what all five workstations used to connect to the Jellyfish. Each port on the back of the Jellyfish is assigned a number starting with 1. To connect, the SHARECLIENT has know which port you’re connected to. Each of our workstations were iMacs or Macbook Pros so we were connecting all of them via Thunderbolt to Ethernet adapters with the exception of the one that connected via a Sonnet 10GbE Twin 10G Thunderbolt adapter. You could also plug directly into the ethernet port of a MacPro tower, trashcan MacPro or any other Mac if they have networking ports built-in. You know the number of the port by properly counting the connections starting at the top. It would be helpful if looking at the ports on the back of the Jellyfish would actually show their number (maybe a sticker) as that might make it easier to troubleshoot if you’re having difficulty connecting.

There is a Scan button that will scan the ports looking for the numbered connection. We seemed to have mixed success with the scan and that is still in beta as indicated by the button on version 6.02 of the SHARECLIENT.

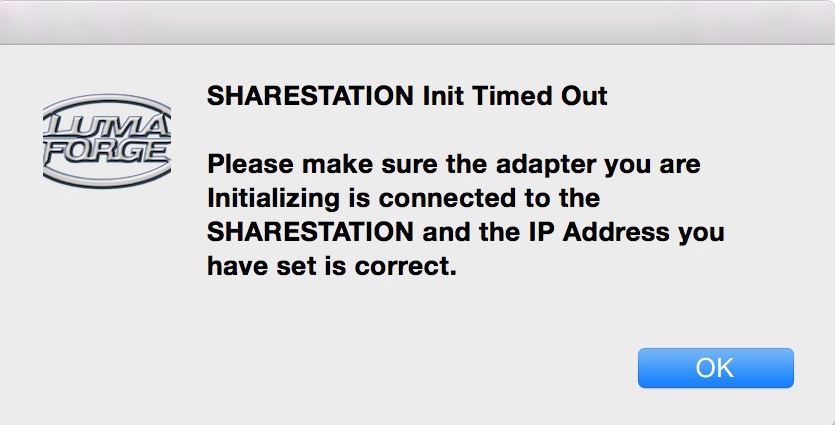

Once everyone had their ports established connecting was usually rather easy. Powering up machines in the morning the connection was usually remembered and it was just couple of seconds to mount the Jellyfish. There were a few occasions where the 10-Gig connection seemed a bit more finicky than the regular Gig-E connections and we had to power cycle the machines, or power cycle the Sonnet 10GbE adapter to get them to talk again. We were sent the wrong power supply for the 10-Gig adapter so that might have had something to do with it being finicky.

One big plus about this storage was that, for the most part, once we got it set up got and it connected it just worked. We were able to pound away without even thinking about it. I don’t know how we would have made this job happen as seamlessly as it did without shared storage. As we talked through the logistics of how we would work if each editor had local storage it quickly became apparent that it would be a file transfer traffic jam.

We gave ourselves two days of set up and prep before the real hard days of working with deadlines began which was a good thing because with that first set-up we had trouble connecting via 10GbE and had to contact Lumaforge support. The Jellyfish shipped in a big box from California to the Taillight TV offices in Nashville. An initial Jellyfish set up happened at the Taillight office before it was packed up and taken to our designated space at Nashville’s brand new Music City (convention) Center.

That’s a lot of moving for what is essentially a desktop computer with lots of spinning hard drives and networking cards seated in a chassis. What we had to do to make the initial 10-gig connection work was open up the side of the Jelly (we affectionally began calling it The Jelly) and re-seat the card. Once we did that the connection established just fine. To get to this conclusion Lumaforge was able to jack-in to one of our assistant’s iMacs and troubleshoot. This is a nice support piece-of-mind for someone who isn’t networking savvy.

One reason Lumaforge was happy send this machine out for our testing and review is that we were cutting in Adobe Premiere Pro as opposed to Final Cut Pr X. As I mentioned above Lumaforge and the Jellyfish have a bit more of a connection to Final Cut Pro X. Our friends at FCP.co did their own pounding of the Jellyfish on FCPX. I think Lumaforge was anxious to have the Jellyfish pounded on by Premiere editors on a real world job … and that’s what we did.

The Premiere Pro Media Browser was the key to the workflow

As mentioned above we were working three editors cutting content with two assistants ingesting camera cards, stringing out sequences and with time permitting pulling selects. To make this work we made extensive use of the Premiere Pro Media Browser. I wasn’t exactly sure how well all of this would go. I was a bit nervous about Jellyfish and shared storage in general because the only shared storage I’d ever used was either an Avid Unity/ISIS or a Facilis Terrablock. Those are much more enterprise level solutions that have either an IT person on staff to manage them or a service contract with a reseller to help out with set up and troubleshooting when things go wrong. With the Jellyfish we were, for the most part, the setup and IT department! Jellyfish does have support from Lumaforge but we didn’t have an IT person there on-site and had to get on the phone with them for support. They were able to easily fix any issue via the phone or via jacking into our assistant’s computer.

The second thing I was a bit nervous about was how well Premiere was going to behave as we all worked together. The Media Browser is Premiere’s tool to share data between projects and editors. I say I was bit nervous about it because I’ve had times where the Media Browser doesn’t always want to perform as quickly and as reliably as one might want. To be fair the issues I’ve seen in the past were more linking to After Effects projects rather than using it to just look into another Premiere Pro project and grab a sequence. It’s been reliable in that regard and worked as expected for us. If it’s good enough for David Fincher and the Coen Brothers I guess it’s good enough for me.

Protocol was to have assistants offload camera cards and copy them on the Jellyfish, string those cards out in a sequence, and pull selects for the editor matching what was being cut. Each editor and each assistant had their own Premiere Pro project in which they were working. When media was prepped and ready for the editor the assistant alerted the editor that the footage was ready, then we would use the Media Browser to look into the assistant’s Project and bring over the sequence and media we needed into our own editor projects.

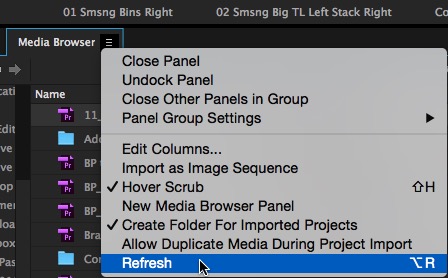

This worked exactly as planned and exactly as designed. What surprised me about this workflow (and was by far the deepest capacity with which I had worked with another editor through the Media Browser) was that I could have the assistant’s project loaded in my Media Browser window and as the assistant got footage loaded and saved their assistant project I would see the Media Browser update itself and be ready for me to grab the media. It wasn’t real-time but darn near real-time enough. The Media Browser itself has an automatic refresh built-in but but it’s worth noting there is a manual refresh refresh function as well located under the Media Browser tab menu.

I assigned Refresh to a keyboard shortcut as I used it quite often during the week. As the job wore on and it got to where I was looking for only a shot or two I would not even import the assistant’s select sequence into my project. I would just open the sequence from the Media Browser in the Source Monitor and edit the shot or two I needed into my timeline. This worked very well and enabled us to deliver all of our videos on time every night … with the exception of the first night but that was more an issue with writing our final content to XDCAM disc which was our required delivery format. There were a few occasions near the end of the day when the Media Browser began getting very sluggish and didn’t want load. Usually a restart of PPro and/or the computer fix that sluggishness. Once, about 3/4 of the way through the job, we rebooted the Jellyfish just to give it a fresh start. I once did some Adobe housekeeping with help from Digital Rebellion and that cleared my caches and pepped everything up.

I chatted with Adobe after the job and got a bit of advice for how we will configure the system next time. We had decided to make the Jellyfish the central place for every single bit of data that Premiere was going to write. Each editor kept their project file on the Jelly, all the media acquired lived on the Jellyfish and we set up separate scratch folders for each editor on the Jellyfish. All of the folders in the Project Settings with the exception of the PPro project backups when to the Jellyfish. That meant any renders were written back to the Jelly. We also had Premiere put the media cache into that Jellyfish scratch folder, assigned via the preferences. That is also what I do on my RAIDs in my edit suite. I’ve said many times how I hate Premiere’s default of putting the media cache on your system drive as those things can get really big really fast. On chatting with Adobe they recommended that the best scenario would be not putting the media cache on the RAID but rather pointing it to a speedy separate drive such as a fast SSD drive. This is similar to how I’ve heard people recommend setting up Photoshop and After Effects caches. Good advice going forward and I’ll try that on the next job. It’s great how you always learn something new on most every job that you can implement on the next one.

The Jellyfish delivered

There is a whole lineup of ShareStation shared storage products from Lumaforge. That ranges from nearly $30,000 for the Studio machine down to a starting price of $7,495 for the Jellyfish. The middle ground Indie system starts just below $20,000. If you’re familiar with heavy-duty shared storage then these prices might not come as a surprise. But if you’re new to shared storage that might seem steep if you’re used to a desktop RAID. Prices for big, heavy-duty desktop RAIDs can approach $10K depending on how they are configured but they are limited to direct attached storage, no networking with other editors. What would be really useful from the standpoint of an editor like myself who doesn’t have the need for shared storage like the Jellyfish on a regular basis would be a rental model for the Jellyfish. I don’t know if this would come from Lumaforge directly but how convenient it could be to get desktop shared storage on a short-term basis for certain jobs. Perhaps this could be an investment for the local camera rental house in your town.

Overall I have to say that the Jellyfish served us well and I don’t know how we could have pulled off the job without it. There’s no doubt that there are many jobs with editors working together cutting same-day content who aren’t using shared storage … I’ve done a fair bit of that myself. When comparing the local-storage, sneakernet plan with collaborative editing using good shared storage there is no comparison: the shared storage workflow is far superior. What made that so much more bearable in our case was the ease with which we were able to implement the Lumaforge Jellyfish into a multi-editor workflow. Delivering on-time and on-budget = success. I don’t know how to measure it any better than that.

Do you have same-day editing content that you need produced with a team like this? Give me a shout and we can talk about it or reach out to Taillight and ask for Matt Houser. We would love to get this team together again!

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now