In developing the first episode for season two of 5 THINGS, I did quite a bit of research on HDR. During that time, I came across many of incorrect assumptions and myths about HDR. Surprisingly, many were from other tech minded folks in the industry. In honor of the recent Mythbusters swan song, I’ve decided to bust 3 frequently repeated myths about HDR.

1. HDR and 4K are mutually exclusive. They are either/or.

False.

In fact, folks have been shooting in formats that allow for greater dynamic range for almost 160 years – way before video was even invented. We now call that HDRi – High Dynamic Range Imaging – and involved multiple photographic exposures of different time lengths of the same image combined after the fact. It’s low tech nowadays to be sure, but it certainly produced a viewable image with a much wider range in lightness and darkness than traditional one exposure film.

Fast-forwarding almost 130 years, HDR with video became a reality, albeit only known to enthusiasts and folks with deep pockets. It followed the same methodology – shooting the same subject at the same time at different exposure levels, and then combining them downwind of the image acquisition.

It was known then that acquisition was possible, but the immediate limitation was the exhibition of said HDR content. That and the exorbitant price tag. And so it became a video tool available in high-end industrial application and to enthusiasts.

It wasn’t until the era of the Y2K bug that the price tag for sensors that could capture a wider dynamic range began to be somewhat accessible and nearly affordable, and physically small enough to be developed into an affordable acquisition tool.

That puts us squarely into today’s marketplace, which just so happens to be right as UHD/4K acquisition is being pushed (as for who is doing the pushing, we’ll save that for flame wars on forums, I’m sure).

Thus, 4K and HDR happen to converge at a point in consumer technology time…and they just so happen to coexist in relative harmony.

2. I’ll have to develop brand new workflows for HDR!

False.

Well, you might. But the industry already has a good foundation for this. Remember offline/online workflows? I know, it seems so long ago…

In the past ~12 years, post production has enjoyed a digital luxury in the media creation realm: content acquisition, encoded at the time of recording that could be edited robustly in post production – as is, without any transcoding. News organizations enjoyed XDCAM formats, and many cinema type cameras worked with post production to shoot in more robust, post friendly formats in camera. Shooting ProRes and DNxHD flavors allowed for quality formats that met and even exceeded broadcast and exhibition specifications to be useable throughout post. And for a short time, for a good majority of projects, we enjoyed a homeostasis. Sure, we had the feature film market that always works this way, and many TV show that still followed this model, normally for storage costs…but I digress.

But there was a time. A time…before. A time when folks shot on a physical medium – film! – and had to do an offline edit; that is, edit with a lower quality version of the raw footage, for sake of ease of use. And with HDR, that’s just what we have to do now.

We’ve been spoiled by the “edit natively” NLE battle cry. Those who edit often are well aware that expecting a smooth edit with high quality camera originals only occurs when all of the post stars (and codecs and CPUs) align. The desire for immediate gratification must be tempered with the reality of technology, coupled with a pleasurable post experience.

Thus, it’s imperative you remember the post days of yore. Shoot – create proxies with a 1 light – edit – reconform—grade – export. It’s not immediate joy joy feelings, but you will certainly enhance your calm.

3. All I have to do is put a LUT (Look Up Table) on my HDR image and all will be good, right?

False.

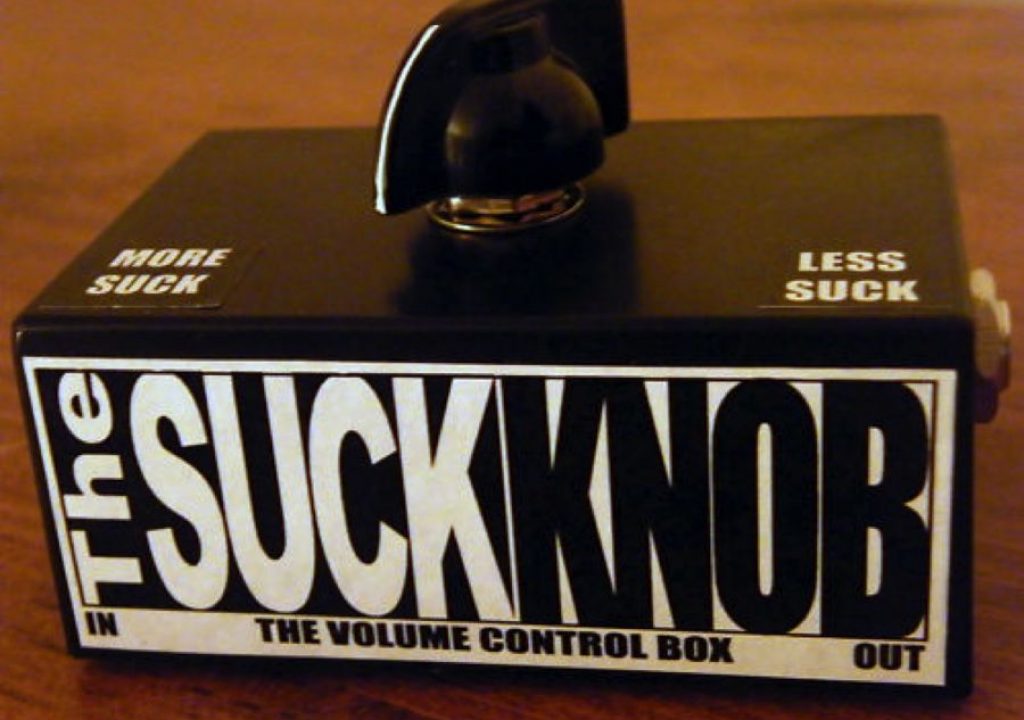

In audio, we have the term “suck knob”. As in, your audio sucks, let me turn down (“fix”) the amount of suckage with this single knob.

A LUT – either used in post for footage shot with HDR in mind, or for footage simply shot flat to have better color latitude in post – will have just as many color flaws as the footage you used to shoot in a non-flat format. You simply now have more latitude to FIX this color later. It’s not a Staples red button (“That was easy!”). A LUT is not a fix; a LUT is a starting point.

I’m serving up a whole heapin’ amount of info on HDR in the upcoming episode. Check out Episode One of 5 THINGS on HDR, available on Tuesday March 15th!

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now