The Tech Retreat is an annual four-day conference (plus Monday bonus session) for HD / Video / cinema geeks, sponsored by the Hollywood Post Alliance. Day 4 offered a SMPTE update, lens metadata, lightfield cameras, new encoding paradigms, Ethernet AVB and video, upconverting to 4K, and the REDRAY player.

Herewith, my transcribed-as-it-happened notes and screengrabs from today's session; please excuse the typos and the abbreviated explanations. (I'll follow up with demo room stuff and the post-retreat treat later this weekend.)

SMPTE Update (including the economics of standards)

Peter Symes, SMPTE

Very thriving community; more standards work going on. User sessions December 2011, asking “what do you want out of SMPTE?” Led to 48-bit RGB, 48fps timecode. Another session will be held this year.

Technical committee on cinema sound; study of UHDTV; working on processes for software-based standards (not just written-on-paper standards, but dynamically updatable standards); study group on media production system network architecture.

New communications: outcome reports from each meeting block; summarize all in-process projects (139 at present); available to all:

https://www.smpte.org/standards/learn-about-standards-committees/meeting-reports

SMPTE Digital Library: SMPTE Journal from 1916 onwards (free to Professional members); Conference Proceedings and Standards (discounted to members). library.smpte.org. Fully searchable and cross-referenced. We don't necessarily have all the older conference proceedings; if you have a copy of an older doc and would consider giving it to us, let us know.

The economics of standards: Not well understood. “Standards are just for manufacturers; users can't make a difference; expensive.” Not true! Standards reduce repetitive R&D, increase interoperability. For users? Wider choices of vendors and products, ability to mix 'n' match. Value for everyone: manufacturers don't need to build everything, small companies can enter a niche (more competition; products not viable for large manufacturers).

Participation: yes, it's best to be there. But you can participate remotely, too; all SMPTE standards meetings have voice / web remote access.

Why should users participate? Do you think manufacturers understand what you really need? You don't get good standards without user input. Those who don't participate get the standards they deserve!

“Why do we have to pay for the standards we helped to create?” The benefit of participation is better standards; selling access to standards is an important element of SMPTE revenue.

To participate: Professional membership, $125/year, or pay a one-off fee; $250/year extra ($200 until 1 April) to participate in person (keeps the tire-kickers and whiners out). Exemptions for volunteers. VOIP or call in for free.

Contact info: Peter Symes, psymes@smpte.org, or Mauricio Roldan, mroldan@smpte.org.

centennial@smpte.org for suggestions to celebrate the 2016 centennial celebration.

New Developments in Camera & Lens Positioning Metadata Capture and Their Applications for Matchmove, CGI and Compositing

Mike Sippel, Director of Engineering, Fletcher Camera

Lens metadata: lens ID including serial #, focal length (and zoom range), focus distance, iris.

Camera positioning metadata: GPS location, pitch/roll/yaw, relative and absolute positions to other references.

Originally written by hand on camera report; not always legible, not always done, not always as detailed as we'd like. Typically only shot-based, not frame-based (doesn't track parameters as things change). Must be transcribed and managed separately from the picture data (this is a BIG problem, often isn't passed along).

Lens metadata: Cooke/i, Arri LDS (Lens Data System), Canon EF. Lens data embedded in raw files. Frame-based, dynamic data. Always on, no user action required. All-internal electronics with no external encoders needed. Negligible additional cost in money and bandwidth.

Sensors inside the lens convert iris, zoom, focus positional data to onboard logic, which translates code values to f-stop, distance, focal length data, fed to camera through lens-mount contacts.

Why? VFX. VFX are typically 400-500 shots in a non-special-effects film. In the new “Planet of the Apes”, almost every shot is a VFX shot. Matchmove, CGI, compositing.

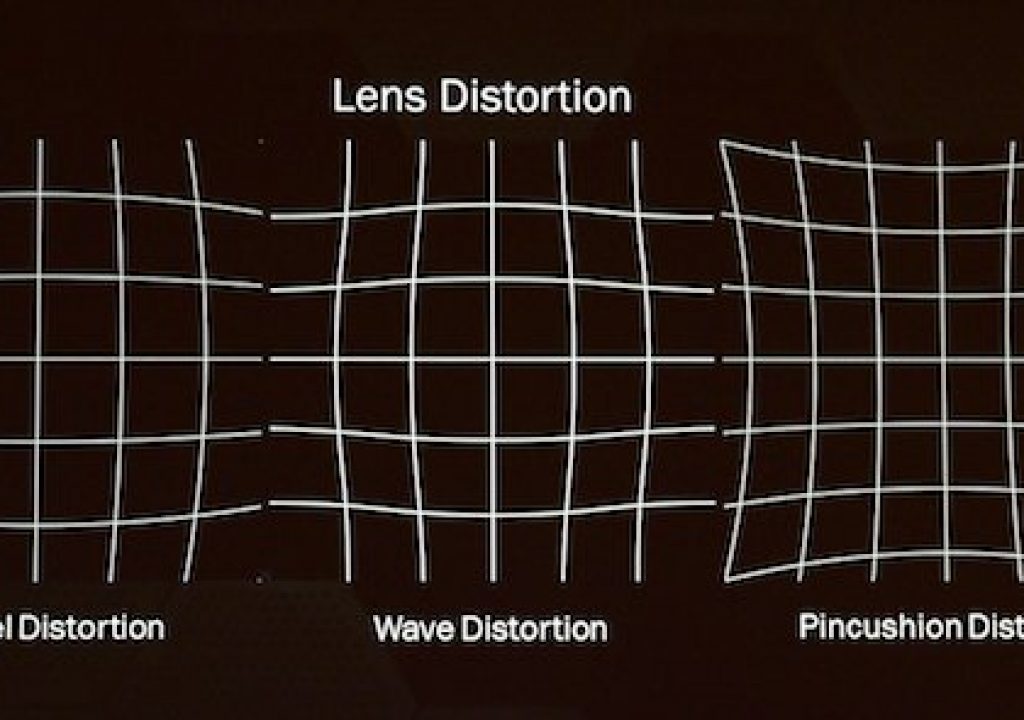

Lens distortion: barrel, pincushion, or wave (mustache) distortion.

And these are just the three most simple ones! Important for VFX: distortions have to match between lens image and composited elements. “Unwarping” software used to undistort source images.

Typically a checkerboard or other distortion chart is shot to characterize a lens.

When generating CGI, the artists need to guess at distances, angles, positions unless detailed metadata available. It can take several passes (rendered every time) to refine these guesses. With metadata, the artist will know the position and angle data; iris and focal distance lets 'em render proper focus / bokeh as well, all in the first pass.

Typical matchmove workflow: characterize lens distortion, correct distortion in plate, track scene and solve, composite in CGI elements, re-distort. First three steps greatly aided with lens metadata.

No Panavision on the list yet, but Panavision is working on it.

Summary: the lenses have metadata at no additional cost, the metadata travels in the raw files with separate conveyance required, improved accuracy of CGI geometry, reduces VFX guesswork. With ACES IIF, metadata can be preserved through entire post process.

Lens metadata does NOT replace having an on-set VFX data wrangler.

The Design of a Lightfield Camera

Siegfried Foessel, Fraunhofer Institut

Lightfield: each object emits or reflects light, reflection can be specular or diffuse. All light rays combined form a lightfield.

Conventional cameras collect light from one beam of directions. One each point of focal plane several light rays will be integrated. Aperture determines how many rays will be integrated, if large there's blur from having more rays.

A pinhole cameras can capture lightfield on a specific point (the pinhole) But the hole can't be infinitely small, intensity loss; the larger the hole the less sharp the image.

Microlens array (MLA). Complete lightfield can't be captured by a sparse representation.

Or can use a camera array.

First experiment: one camera with 2-axis positioning system, 17×17 positions.

Tests creating depth maps:

Designed microlens array. What dimensions are needed? Try a multiplicity of 5: each object is imaged 5×5 times on sensor (oversampling). Used a 5 Mpixel industrial camera with array in front of sensor.

Image captured into computer for processing, got 1-3 fps on a 24-core computer for a VGA-sized final image. If you only have a small number of images you get artifacts:

You need a fixed focus, fixed aperture on main lens, otherwise you need to recalibrate everything.

Several challenges: assembling MLA on sensor for absolute defined separation distance; calibration; real-time computation. Needs dense sampling. High-res video a challenge. New strategy: use camera arrays instead of microlens array in the future. Motivation: each camera can capture high-res data. Challenges: need lots of cameras, cameras need to be small (mobile phone cams). Built small test camera using embedded linux; version 1 at NAB should shoot 1 fps, version 2 will shoot 30-60 fps. Cameras capture images, send to central processing over IP, images get computed and output.

Conclusions: new creative opportunities in post. Microlens cameras are small and compact, but compromise between resolution and flexibility; hard to calibrate, low res depth maps. Multi-camera arrays more promising.

Qs: what about handling the masses of data? Looking at low complexity coding for compression, 4:1 to 8:1. Resolution of depth map? Depends on triangulation from image data (multiple views, 5×5 on our system).

Image res? About 1/3 of the sensor res (on our multiplicity-of-5 array), so if sensor res is 3K, final image map res is 1K.

Next: Encoding; Steve Lampen on video over AVB; upconverting to 4K, and REDRAY

New Paradigm for Digital Cinema Encoding

Chad Fogg (presenter) and Keith Slavin, isovideo LLC

[Actually seems to be a discussion of frame-rate conversion. -AJW]

Frame transformations: traditional broadcast codec chain:

Most common input: 1/24 sec frame input converted to 1/30 sec frames; normally map 4 frames to 10 fields, or 3:2 pulldown. Codecs sometimes synthesize frames at cut points to maintain cadence. Another reality: mixed content with progressive, interlaced, and 3:2 content intermixed.

HEVC requires all fields or all frames. The display needs to figure out how to reassemble. Back to display preprocessing: no metadata to assist. Display devices attempt to recover source, usually works, sometimes not.

In ideal universe each code frame has unique metadata, frames have variable timing, etc.

Motivation: Frame rate downconversion: mobile devices, fixed frame rate services 60-to-50fps, HFR to 24fps, preprocessing to reduce entropy, blur (panic mode for STBs).

Encoding for tablets: needs more blur for lower frame rates. Mobiles lack sophisticated display processing. Proper blurring saves 15% bandwidth.

How to convert: simple frame dropping (leads to motion aliasing). Consider adding motion blur, relational to exposure time.

[many slides missed due to very fast presentation. I'll just give up, and grab photos. FRC is Frame Rate Conversion, DI is De-Interlacing. -AJW]

Ethernet AVB and Video

Steve Lampen, Belden

Audio is easy on Ethernet AVB (Audio Video Bridging: http://en.wikipedia.org/wiki/Audio_Video_Bridging): GigE = 300+ channels. Video, not so easy: GigE = 1 compressed stream, 10Gig = 6 HD-SDI streams. What about 4K? 8K?

Where is Ethernet going?

10Gig copper shielded or unshielded 625 MHz / pair, 4 pair, 100 meters, PAM16 coding scheme.

40 Gig copper, 40GBaseT, Cat8, study results by 2015. 2 GHz per pair? overall shield or per-pair shield Alien crosstalk +20 dB. 4 pair? 30 meters only (supports 90% of data center needs)???

40 Gig fiber, 40GBaseSR4 or LR4, laser-based optical cabling, 8 fiber = 4 lanes each direction, 10 km distances. Re-use 10GB connectors; break out the fibers to multiple 10GB ports.

100 Gig, 100GbaseT, probably beyond the capability of copper. 100G fiber: 100GbaseLR10, 2 GHz per fiber, WDM 4 wavelengths, or 10G fiber x 10 lanes x 2 directions = 20 fibers!

Where is video going?

What could change this would be room-temperature semiconductors: infinite bandwidth, infinite distance… but what would it cost?

Bandwidth on copper UTP, based on 3rd harmonic:

“Hmm, 4:2:0. Look familiar?” (much laughter)

Based on clock frequency, maybe 50% more capacity, except for 4K, which stays the same.

How to win an Emmy? Merge networking and video. 256×256 video switchers are commonplace, 128-port Ethernet switch is huge. In video, switch on vertical interval; in Ethernet, how do you find that switching point?

Who wants AVB now? Automotive industry. 2ms latency is only 2.5 inches at 70mph. Airlines: weight savings is huge, simple networking scheme, multiple channel seatback video.

Qs: commercial products for video on ABV? No, not yet. Maybe for airlines, nothing you'd want. SMPTE is working on AVB for professional application. It's not baked yet, still a way to go. Why use copper for short runs if your long runs are optical? Depends on what your boxes support. Fiber has been harder to terminate, it's getting better. If the IT world is moving to fiber, maybe we should also move to fiber? Economies of scale may drive us that way.

Converting SD and HD Content to 4K Resolution: Traditional Up-Conversion Is Not Enough

Jed Deame, Cube Vision Technologies

How do we get legacy content onto 4K displays?

4K interfaces: quad / octal HD0SDI, dual / quad 3G-SDI, HDMI 1.4 a/b (coming soon: HDMI 2.0), HDBaseT, DisplayPort 1.2.

Building a 4K canvas: feed multiple HD images to a 4K multi-viewer.

4K format conversion: input signal, de-interlace, scale, colorspace convert, processing (enhance, NR), output.

Why de-interlace? Progressive displays; odd & even fields not spatially coherent, de-interlace prior to scaling. Non-motion adaptive (bob & weave), motion-adaptive, motion-compensated (preferred).

Motion compensation: still items, take both fields. Moving things, toss one field, interpolate from remaining data. Low-angle interpolation, interpolating (sampling) across diagonals, not just verticals.

Types of scalers: bi-linear (mostly unused), polyphase FIR filters (1D and 2D), performance depends on # of taps, # of phases, the algorithm.

Image enhancement: get more perceived detail without adding noise. Exaggerate contrast but avoid ringing.

How to implement? ASICs, HDV is EOL, VXP can't support 4K, EOL? Consumer ICs, low performance, cost-focused, no b'cast support.

FPGAs, only practical solution for b'cast/pro market, requires IP, takes years to implement (or license, 3rd party IP available). Xilinx, Altera, Lattice. FPGAS can handle all steps of format conversion process: what used to take big box can now be done on a chip.

Cost of materials: about $50. Output can go 15ft as-is, 30ft with active equalization. True system on chip.

Qs: Can the FPGAs be reprogrammed for higher resolutions? Yes, but the challenge is bandwidth. Can also add improved NR, enhancement, etc. Why aren't more people using DisplayPort? Good question. Computer folks using Display Port, video using HDMI, b'cast using SDI. Downscaling? Frame rate conversion? Yes, we do that, too. But 4K FRC probably won't fit in a single $50 FPGA. Does the order of de-interlace / scale / colorspace / etc matter? Yes, for example want to linearize color first, process, then add gamma back in. If the parts cost $50, what does the IP cost? A lot more than $50! But you're saving 5-6 man-years of effort and 2-3 years of risk. Costs much less than doing it yourself.

REDRAY 4K Digital Cinema Player Project

Stuart English, RED Digital Cinema

This is where we do design and manufacturing:

Cameras: we've deployed 2K, 4K, 5K capture. Raw YCC images. Shoot motion and stills, PL or APS (Canon EF) lenses.

4K color gamuts: television, cinema, print, special events (in most industries gamut is defined, but wider-gamut displays may be used elsewhere)? All different.

Stereo3D: synced 5K raw, lightweight, 24/48fps acquisition. A body of work is saying we need at least 4K per eye for good stereo.

Processing: debayer, grade, monitor, crop & scale, file export (DPX, TIFF, OpenEXR, ACES, h.264, ProRes, DNxHD). All available in REDCINE-X.

What about 4k distro? Limited: no b'cast, no packaged media, some DCI cinema.

Applications for 4K / UHDTV display: consumer, advertising, theatrical, corporate, dailies?

White items are strong points of each system.

20 Mbps data rate originally, new quality profiles, both higher and lower. Very secure. No optical disk; networked.

Scales down to HD, HDMI 1.3. 4K DCI, UHDTV, 12-bit 4:4:4. Also synced quad HD outputs for fused HD panels, or 4 panels in digital signage. Even RS170A, can sync multiple REDRAYs for multichannel output for advertisers. Special events: stereo 4K, HFR up to 60fps (in stereo3D), multi-player sync option.

Ingest: SDHC card: 32 GB holds about 200 minutes. Can play from card or copy to internal store. USB drives can also be mounted. Preferred method: OTT (over-the-top) Internet CDN (content delivery network), non-realtime transfer, also a WiFi app (iOS and Android) to control it.

Storage: 1TB internal HDD, about 100 hours of content. Also external USB-2 and eSATA (FAT32 format) drives can be connected.

Audio: HDMI 1.3, HDCP. Stereo 5.1, 7.1. 24 bit 48 kHz.

Security: AES-256. ASIC serial number used to lock it. Secured firmware. Broadcast or conditional DRM. Firmware has some APIs to control it but the firmware itself is locked down. There is no option on the DRM, the box IS rights-managed. But you can use broadcast DRM to allow play-anywhere.

Video: RREncoder, REDCINE-X plugin. Converts DPX, TIFF and R3D raw. Color can be 709, P3, XYX, sRGB. Audio: 48Hz WAV, allows trimming. Output 2D or 3D, specify frame rate, quality (high, medium, low), licensing (title UUID, ASCI serial #, play dates allowed, expiration, max # of plays; distributor license verification).

All screenshots are copyrighted by their owners. Some have been cropped / enhanced to improve readability at 640-pixel width.

Disclaimer: I'm attending the Tech Retreat on a press pass, but aside from that I'm paying my way (hotel, travel, food).