I know this post has a clickbait sounding title, and for that I apologize, but I’m not writing this for click throughs or ad impressions. This is about the things that software can do now and guessing at what it will do in the very, very near future.

Right now, software “reads” articles and emails, and this is how Google analyzes and ranks what we write and figures out what to sell us. Software even “writes” articles, more and more everyday. Software doesn’t write the opinion pieces, or the long, interesting New Yorker think-piece, it goes for the easy stuff: financials, sports scores, crime beats. The kind of stuff that feels all boiler-plate and perfunctory.

http://blog.ap.org/2014/06/30/a-leap-forward-in-quarterly-earnings-stories/

The architecture of these algos is derived from the intellectual work of millions of human beings over a massive span of time. Of course, none of these writers have been remunerated for this work, it’s simply the aggregate data set used to develop the models. This is the central critique in Jaron Lanier’s book “Who Owns The Future?”

http://www.nytimes.com/2013/05/06/books/who-owns-the-future-by-jaron-lanier.html?_r=0

My thesis here is that what has happened in the world of print will happen in the world of video post-production, specifically in the job of the editor.

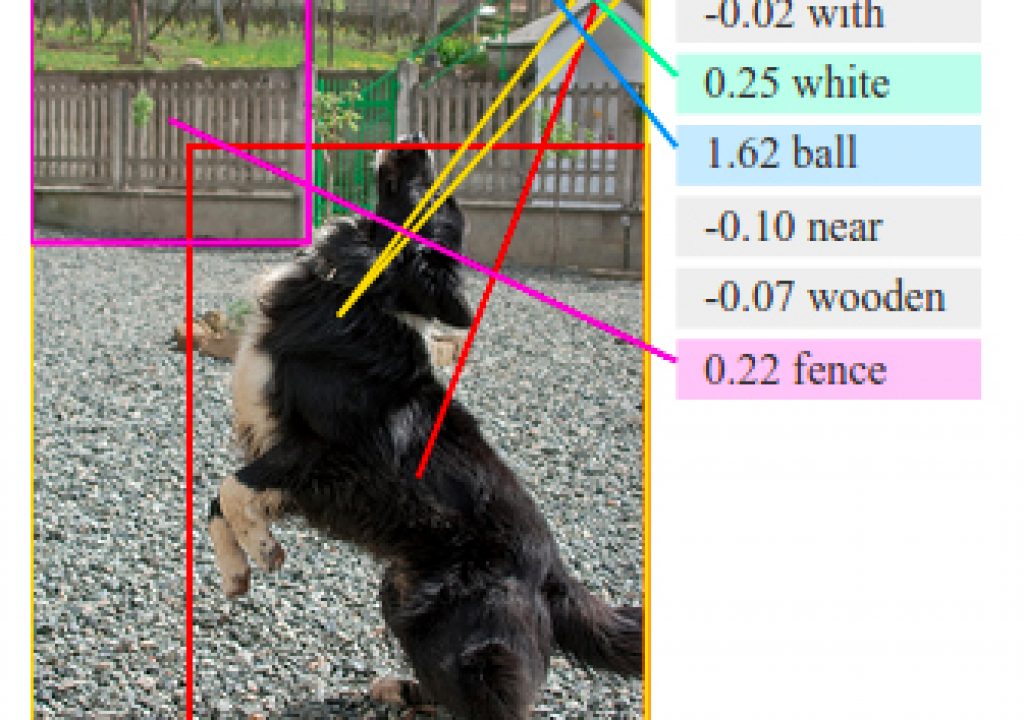

The use of deep learning and neural networks for image recognition is a hot topic in the tech press. The trippy images coming out of Google’s DeepDream project are amusing, alien and unintelligible but that’s really just the Kai Power Tool, pseudo-acid-head, arty front-end of a larger, far more ambitious project: Google Photo.

http://www.imore.com/google-photos-may-be-free-what-personal-cost

In exchange for free, unlimited on-line storage of photos and videos, you agree to let Google scan your data to identify people, places and things. The platform then tags and organizes everything for you, automatically. This is really handy for me, the user, and amazingly data rich for Google, the big data mining company.

But what does this have to do with editing?

http://cs.stanford.edu/people/karpathy/deepimagesent/

As far as editorial style, there are already millions of movie and television shows on-line that can serve as an enormous database to teach a deep learning algorithm what editing is and how it has been done. There will be a generation of media creators that will use the digitized and aggregated knowledge of a hundred years of cinema to drive editorial decisions the same way publishers now rely on Natural Language Generators to create books, articles and blog posts.

Where does this leave professional video editors?

For the professionals working in corporate and industrial video, it will be a further eroding of the craft towards a deskilled workforce reliant on software to generate “content”. It will push the workload more and more on the producers as it promises a post pipeline with fewer people and a faster turnaround. For the narrative film and television editorial departments, much of the assistant editing work is already handled by digital asset management software with less and less human input needed. On the indie, no-budget front, having intelligent software do the heavy lifting of media management and edit assembly will be an amazing time and money saver.

Just as there is a generation of editors that have never touched a flatbed or edited in a linear video suite, there will be media creators that aren’t clear on the language of how editing works. In order to edit, it won’t be a requirement to know when or why one would use a medium or a close up, or how best to juxtapose images in a sequence. There will be an easy to use piece of software that does a decent enough job about ninety percent of the time to do it for you.