A.I. Artificial Intelligence is not just the name for a 2001 Spielberg film, a project that Stanley Kubrick had in mind after acquiring the rights to Brian Aldiss’ story in the early 1970s. AI is everywhere these days, so much present, sometimes, that some will say it is a virus that has grown with this generation, used in imaging both for marketing purposes and – misleadingly – touted as the way to transform your bad photos into works of art.

AI, or Artificial Intelligence use has advantages, and we’ve shown some of them here at ProVideo Coalition, recently, in applications as RTX Voice or the Krisp app, used to remove your neighbour’s barking dog from your Zoom meetings. AI is now present in a variety of software, helping in multiple ways that would not be possible without it. It’s also a buzzword adopted by marketing, sometimes to explain something in ways that exceed what an app really does.

Now Sony announced it has developed the first intelligent vision sensors with AI. The company will release two models of intelligent vision sensors, the first image sensors in the world to be equipped with AI processing functionality (among image sensors and according to Sony research as of announcement on May 14, 2020). According to the company, “including AI processing functionality on the image sensor itself enables high-speed edge AI processing and extraction of only the necessary data, which, when using cloud services, reduces data transmission latency, minimizes any privacy concerns, and reduces power consumption and communication costs.”

These sensors are not going to be part of your next camera, at least not for the near future, but they are an interesting advance that may well contribute to change future designs of sensors. For now, these products expand the opportunities to develop AI-equipped cameras, enabling a diverse range of applications in the retail and industrial equipment industries and contributing to building optimal systems that link with the cloud.

Before we continue, and share with ProVideo Coalition readers some more information about the sensors, it may be interesting to travel 30 years back in time and look at the introduction of the Maxxum 7xi 35mm SLR by Minolta in 1991. It was the first camera with “fuzzy-logic “ making it the first camera in the world able to “think”, a feature loved by some and hated by others. As soon as the photographer looked through the viewfinder the microchip brain in the camera would begin to focus and frame according to its 16-bit CPU with 20MHz clock speed and the “Expert Intelligence of the world’s best photographers” to create the best picture.

A series of Program-Cards, of which Minolta offered a variety, pre-programmed for things as Landscape or Portrait, allowed users to easily configure the camera to different needs… as long as they had acquired the cards. This led to some funny situations experienced at the time, with amateurs stating, for example, they could not take a portrait because they had forgotten at home the specific card. Although some of the Program-Cards available offered interesting options – like the Customized Function Card xi, which allow to configure specific parameters not accessible any other way – the story I mention clearly suggests what happens when people depend too much of the technology.

This brief note looking at the past should not be seen as a negative comment about the use of AI, more as a sign of the evolution of cameras, and how Sony, who after all inherited the Minolta technology that made the Maxxum 7xi possible, has now taken us to a new level, after fuzzy logic. It’s no longer the camera that has AI, it’s the sensor.

The two first sensors with AI

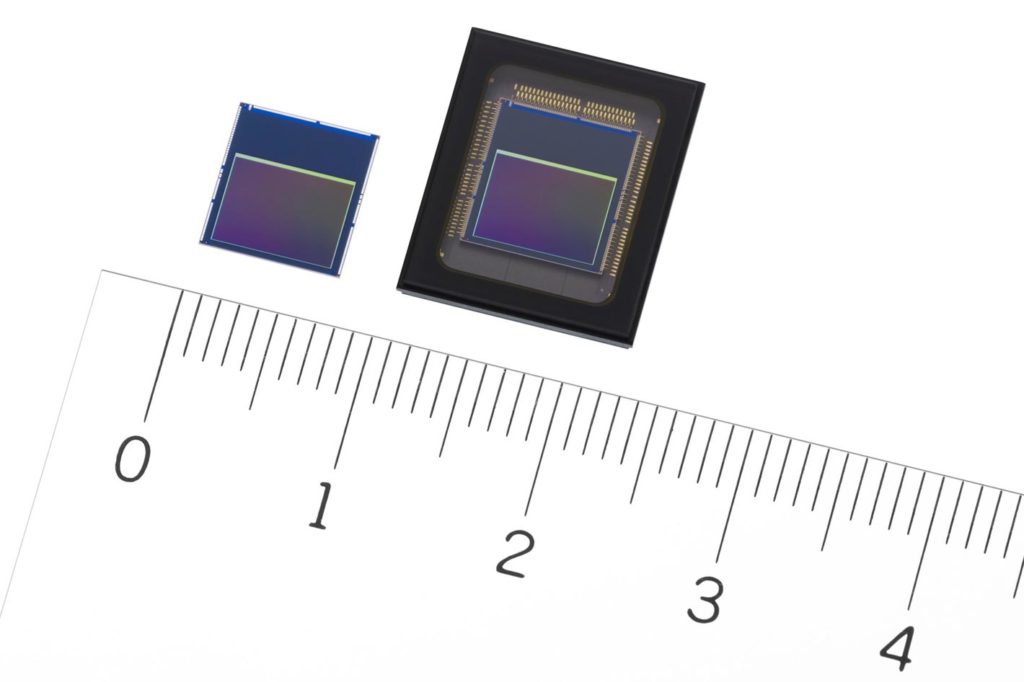

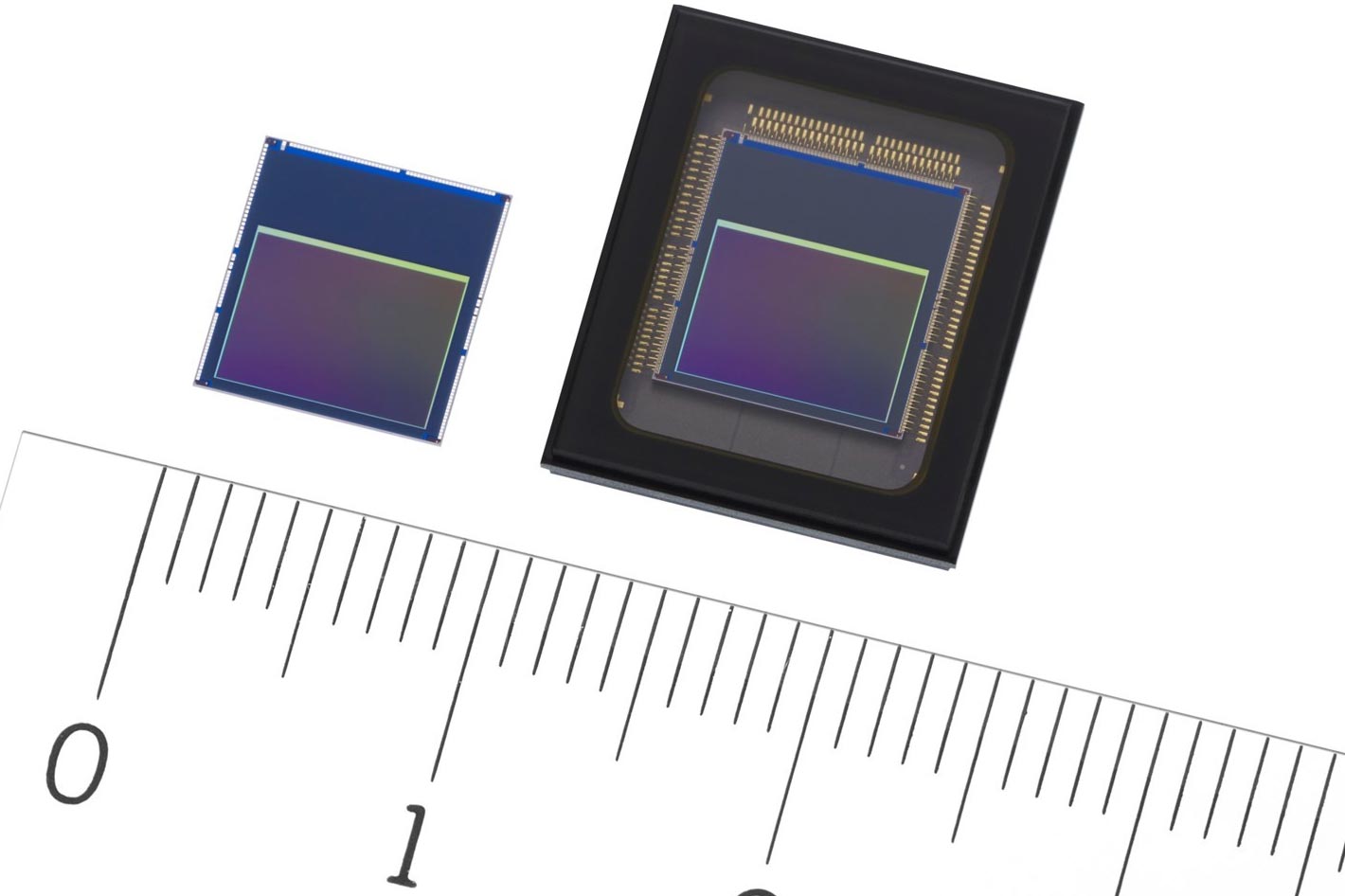

The new AI sensor from Sony comes in two options, the IMX500 1/2.3-type (7.857 mm diagonal) approx. 12.3 effective megapixel intelligent vision sensor (bare chip product), now available, and the IMX501 1/2.3-type (7.857 mm diagonal) approx. 12.3 effective megapixel intelligent vision sensor (package product), which will be available in June 2020.

The new sensor products feature a stacked configuration consisting of a pixel chip and logic chip. They are the world’s first image sensor to be equipped with AI image analysis and processing functionality on the logic chip. The signal acquired by the pixel chip is processed via AI on the sensor, eliminating the need for high-performance processors or external memory, enabling the development of edge AI systems.

The sensor outputs metadata (semantic information belonging to image data) instead of image information, making for reduced data volume and minimizing any privacy concerns. Moreover, the AI capability makes it possible to deliver diverse functionality for versatile applications, such as real-time object tracking with high-speed AI processing. Different AI models can also be chosen by rewriting internal memory in accordance with user requirements or the conditions of the location where the system is being used.

Sensors with Selectable AI model

Here are the main features of the IMX500 and IMX501 sensors from Sony:

■ World’s first image sensor equipped with AI processing functionality

The pixel chip is back-illuminated and has approximately 12.3 effective megapixels for capturing information across a wide angle of view. In addition to the conventional image sensor operation circuit, the logic chip is equipped with Sony’s original DSP(Digital Signal Processor) dedicated to AI signal processing, and memory for the AI model. This configuration eliminates the need for high-performance processors or external memory, making it ideal for edge AI systems.

■ Metadata output

Signals acquired by the pixel chip are run through an ISP (Image Signal Processor) and AI processing is done in the process stage on the logic chip, and the extracted information is output as metadata, reducing the amount of data handled. Ensuring that image information is not output helps to reduce security risks and minimizing any privacy concerns. In addition to the image recorded by the conventional image sensor, users can select the data output format according to their needs and uses, including ISP format output images (YUV/RGB) and ROI (Region of Interest) specific area extract images.

■ High-speed AI processing

When a video is recorded using a conventional image sensor, it is necessary to send data for each individual output image frame for AI processing, resulting in increased data transmission and making it difficult to deliver real-time performance. The new sensor products from Sony perform ISP processing and high-speed AI processing (3.1 milliseconds processing for MobileNet V1, an image analysis AI model for object recognition on mobile devices) on the logic chip, completing the entire process in a single video frame. This design makes it possible to deliver high-precision, real-time tracking of objects while recording video.

■ Selectable AI model

Users can write the AI models of their choice to the embedded memory and can rewrite and update it according to its requirements or the conditions of the location where the system is being used. For example, when multiple cameras employing this product are installed in a retail location, a single type of camera can be used with versatility across different locations, circumstances, times, or purposes. When installed at the entrance to the facility it can be used to count the number of visitors entering the facility; when installed on the shelf of a store it can be used to detect stock shortages; when on the ceiling it can be used for heat mapping store visitors (detecting locations where many people gather), and the like. Furthermore, the AI model in a given camera can be rewritten from one used to detect heat maps to one for identifying consumer behavior, and so on.

It’s evident, from reading the information available, that this type of sensor makes no sense in your next photo or video camera, but some of the technology presented here may well contribute to change the sensors now being used. Aspects as High-speed AI processing or the fact that the configuration of these sensors eliminates the need for high-performance processors or external memory, may be adopted in the future, or contribute to sensors, in our cameras, that are able to “think” as the brain in the Minolta Maxxum 7xi did three decades ago. Despite its flaws, it contributed to move the industry forward.