For decades, the push for resolution in electronic cameras was practically a war, a way of life, an epic Dungeons and Dragons Quest for the Holy Pixel. Entire careers were dedicated to it. It’ll show up in the archaeological record. We really, really wanted TV cameras to be as sharp as movie cameras.

And now they are, and way beyond. We have so much resolution we quite literally don’t know what to do with it. One example – though certainly not the only one – is Blackmagic’s Ursa Mini Pro 12K, which won’t produce HD or 4K ProRes files internally as some of its potential users might have liked.

To be fair, it’s hard to question the technological validity of the a raw-only approach. From a purely theoretical standpoint, it doesn’t make much sense to interpolate full RGB images out of the sensor mosaic, then decimate it (often) down to subsampled component, then compressing that. That’s an inflation followed by two stages of deflation. Raw does make sense, much as it’d be nice to see it standardized.

From a rather less theoretical standpoint, there are lots of reasons we might want a more conventionally-sized and conventionally-encoded picture, from viewfinding and monitoring to compatibility with clients who might not want to deal with raw, no matter how easy the company manages to make its raw workflow. So why didn’t Blackmagic simply add the HD and 4K ProRes options to the 12K, even if just for the avoidance of doubt?

All cameras do have HD and 4K resolution outputs

Well, to an extent, it did. More or less every modern camera must have downscaled viewfinder and monitoring outputs. The real problem is actually one which goes back all the way to 2009 and Canon with its EOS-5D Mk. II. Of all the many drawbacks of that delicious-but-flawed epoch-maker was the terrible moire, which sent tiny dots dancing all over an otherwise persuasive image. It did that because there were far, far too many photosites on the sensor for the camera to handle all at once. The only solution was to offload only a scattering of them, creating a sensor that constantly missed things as they fell between the active photosites, which is what causes aliasing.

That certainly isn’t the case with Blackmagic’s camera, but the issue is the same: more pixels than we want or need for HD or 4K images, leading to compromise in other areas. Let’s be clear; everything about the 12K is vastly, vastly better than the miserable aliasing on the 5D Mk. II, but the issue of having more pixels than is convenient for some applications is not a new one.

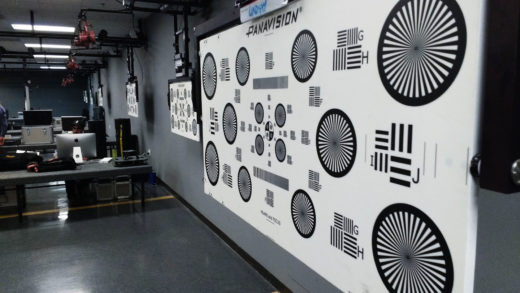

What’s widely misunderstood is that scaling digital images well, without introducing a variety of quality issues, is difficult. Even if a camera like the 12K can offload all of the photosites on the sensor at video rates (which the 5D Mk. II couldn’t) it still has to do a lot of work to generate a viewable image at a lower resolution. Just leaving things out leads to aliasing. The proper technique is to blur (experts will say “filter”) the image, then leave out a lot of the photosites, depending on exactly how the demosaic works. Unfortunately, applying a good-quality blur to a big digital image is a lot of work; it’s not quite clear how well very high-resolution cameras can do this with their onboard electronics.

Filters have no render time, no matter the resolution

Doing the same thing with an optical filter is a lot easier because physics does the heavy lifting. The optical low pass filter that most cameras have is, to all practical purposes, a minutely-controlled blurring filter. So, one way to consider this problem is that when we want a lower-resolution image from a given sensor, our real issue is that the OLPF isn’t strong enough to prevent aliasing; companies have offered more powerful OLPF replacements for DSLRs for exactly this reason. Some camera manufacturers have made low pass filters interchangeable. That’s a task to be done carefully on the workbench, under relaxed circumstances, not something you’d do on a Magliner as the rain spatters down, but it’s not impossible to imagine interchangeable OLPFs taking at least some of the grunt work out of better low-resolution images on some cameras.

Of course, the camera we’ve been rather unfairly picking on as an example here doesn’t actually have an OLPF, possibly because 12K is such a high resolution that diffraction and other lens limits will come into play before much aliasing does, at least at full resolution. In any case, our purpose here isn’t to criticize Blackmagic in particular; using the highest-resolution cinema camera that’s commonly available was bound to back us into a few corner cases. Perhaps now, though, we can approach camera specs with a greater comprehension of what they might imply, and a better understanding of why it isn’t necessarily that easy just to make pictures smaller.