Let’s talk about some fundamentals, because, well, sometimes people don’t so much grab the wrong end of the stick as superglue the stick to their hands and march around proudly displaying it as if they’re being followed by a military band.

The idea that higher-resolution sensors have poorer noise, sensitivity and dynamic range performance is embedded in the brains of film and TV camera specialists like a carelessly-thrown tomahawk. Like all good logic bombs, it’s true in part, but there’s a deeper story about how real cameras actually work, and how much information about the scene we can wring out of the photons we capture.

Resolution basics

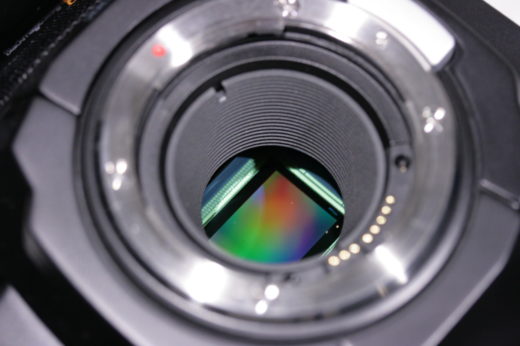

This’ll be a recap for many people, but for clarity, photosites on a sensor convert photons into electrons and collect them in a place that has a maximum capacity. As such, the point at which the sensor clips to white is fixed by its design. The black point is less well-defined; the number of electrons we read will always have a small random variation, which is what causes noise. When the signal becomes unacceptably small compared to the noise – that is, noise becomes unacceptably visible in the dark areas of the image – we decide that’s the black point. Opinion is involved.

If we can reduce noise, we can reduce the black point, increasing sensitivity and, assuming the white point is fixed, dynamic range. As new designs have risen through twelve and thirteen stops of dynamic range, sensitivity has doubled and doubled again, which is not a coincidence. Assuming the white point doesn’t stay where it is gives us another way to increase dynamic range, and we can make the white point higher just by making the photosite itself physically larger. That’s a lot easier than somehow managing to engineer a lower-noise sensor.

And this is where we get the idea that sensors with bigger photosites are less noisy, and it’s true. Attentive readers will, however, have noticed a subtle contradiction there. In the opening paragraph, we talked about “higher-resolution sensors” having poorer noise performance, and we just talked about “sensors with bigger photosites” being less noisy. Often, those concepts are used interchangeably, but they shouldn’t be; we can have bigger photosites and higher resolution if we make the sensor itself bigger.

Sensor size selection is limited

More often, though, we’re comparing two

sensors of similar size where one has more resolution, and thus smaller photosites, perhaps something like an Ursa Mini 12K and the more conventional 4.6K option. In that situation, it’s instinctive to assume that the 12K pictures might have poorer dynamic range, sensitivity and noise performance. And they do – but not nearly as much as we might instinctively assume, at least once we’re viewing the results on the same, non-12K monitor.

The 12K sensor has something like three times linear photosite count of a 4K sensor, so each photosite should be one ninth the size. Shouldn’t the noise performance be terrible? If we take the central 1920 by 1080 HD area from the 4.6K sensor and the same 1920 by 1080 area of the 12K sensor, sure, the 12K camera’s output will look noisier, all else being equal. It has the same number of photosites and they’re smaller, so they won’t perform as well.

The thing is, that’s not what we’d instinctively do, not least because the HD area of a 12K sensor is so tiny as to demand really, really short lenses to achieve a wide angle. We’d ideally take the whole 12K sensor output and then scale it down later (that’s oversampling). The photosites are still smaller on the higher-resolution camera, but we’re averaging-out a huge amount of the resulting noise penalty.

Practical considerations

So the higher resolution sensor, on paper, doesn’t necessarily suffer a noise penalty assuming we’re interested in result of a fixed resolution, which we usually are. Of course in reality we know that they often do suffer a noise penalty – the 12K is about a stop down on its lower-resolution brethren – and that’s likely to be partially down to the gaps between the photosites. More photosites means more gaps, meaning more photons not recorded and a poorer signal-to-noise ratio. We call that a lower fill factor, and that’s not something we can average out.

There are lots of reasons why sensors as different as those 4.6 and 12K designs differ in performance, and we consider them only as examples. They have different colour filter array layouts and the 12K probably embodies some fundamental advances over the older 4.6, all of which upset the comparison. Still, while the fundamentals behind all this are fairly well understood, as cameras begin to routinely offer massively more resolution than anyone has a real need to distribute, a better understanding of how all this stuff interacts is going to matter more and more.