After years of wondering what exactly the user matrix does I think I’ve figured it out. I’m not sure I can explain it, but I’m going to try. Put on your propellor hat and rub your brain with soothing salves, ’cause it’s gonna hurt…

I’ve known for a while that matrices add and subtract color channels from each other, but until now I couldn’t explain the actual effect of changing the numbers in the user matrix. Then I stumbled across this video, courtesy of XDCAM-User.com:

The video spells out how the matrix numbers affect the image in a way I’d never heard before. It was hugely informative, and combined with what I’ve intuited about the matrix I think I can explain something of how it works. (This is my first shot at this, so please hang with me.)

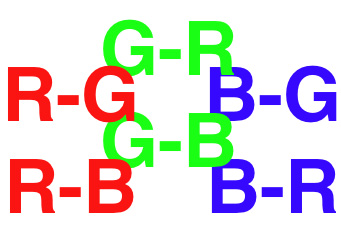

Let’s use matrix setting R-G as an example, where R is the red channel and G is the green channel.

It’s important to realize that the color channels, or signals, don’t actually carry color information. They carry luminance information. Silicon sensors can’t sense color, they can only sense brightness, and it’s up to the camera’s processor to determine what color goes where: when a photosite is struck by light it reports this in the form of an electrical signal, which is digitized and then read by the processor and matched to a color. If the processor knows that a photosite is covered by a red filter, it assigns that brightness value to the red channel. The result is basically a black and white signal that will be converted to red and mixed with the other colors later on.

The processor will convert any signal that’s in the red channel into the color red. That doesn’t mean that everything in that signal really IS red: if the red dyes on the photo sites pass a little green and a little blue as well, then the processor is going to see those tiny bits of extra luminance and make them slightly red as well.

We can also add other signals into the red channel. Adding some of the green signal into the red channel tells the processor that green objects contain some red as well, and will red to those parts of the image. All the processor knows is that anything in the red channel is supposed to be red, so adding some of the green signal into the red channel “fools” it.

Think of a channel as a “pipe.” The signal is what flows through that pipe. Anything in a color’s pipe will be made into that color in the image by the camera’s processor. That means if you mix other signals into that pipe the processor will see anything in that pipe as one color, regardless of where the extra signals came from.

That’s where the matrix comes in.

This is hugely important: just because a color channel is labeled with a color, that doesn’t mean that it is comprised of only that color. The dyes on a red photosite (in the case of a single sensor camera, or dichroic filters on a prism came) aren’t perfectly pure, which is to say they don’t pass only red. They pass a little green and a little blue as well. Some of this is necessary, as secondary colors (in the case of red that would be colors like purple and yellow) are only created where two channels see brightness in the same part of the frame. A big part of color science, however, seems to be figuring out how to keep the color channels separated enough that pure colors can be created. If the channels overlap too much then the camera will never see a pure red, because it’s always sensing some green and blue as well and mixing them all together.

Normally the color channels have a little bit of overlap to help them resolve secondary colors.

The way this is done is by subtracting color signals from color channels. Not colors themselves; this is all happening before the channels are converted into color, so they are essentially black and white images. A red object in the scene will reflect red light that passes through the red filter, registering as a bright object in the red channel, while green and blue objects will appear much darker and result as blacks and dark grays. The same is true for the other color channels: green and blue objects appear bright in their respective channels, while appearing dark in the others.

Subtracting a color signal from a color channel means that if we take a bright area in the green signal and subtract it from the red channel, and the red channel saw a little green in that spot due to it having a slight green “leak,” the red signal becomes darker in that spot, making the red channel more pure.

Earlier I said we’d be looking at the single matrix setting R-G as an example. That dash is literally a minus sign. Here’s where you have to think like an engineer: if the goal of color science is to selectively subtract colors from each other, then doing that is actually a positive goal. Positive values mean we have successfully subtracted one color from another.

If we make R-G = +99, then the camera’s processor is subtracting 99 “units” of the green signal from the red channel, making red less sensitive to things that have green in them (like the color orange) and making both red and green more pure. The higher the value, the more it does this. (These numbers are arbitrary and don’t relate to anything in the real world, as best I know. What numbers have what degree of effect varies from camera model to camera model and manufacturer to manufacturer.)

Subtracting green’s influence from red makes both colors more pure, because there’s less overlap between them. Secondary colors, though, won’t look so good anymore as the red channel will see less of them.

Going the other way, such as R-G = -99, means we are now adding 99 “units” of the green signal to the red channel, so the processor will add red to objects that are green.

Adding a lot of the green signal to the red channel makes red less saturated while adding red to greens, making them yellow or orange.

Remember how the video said that the first letter can only change in saturation, while the second can change in both hue and saturation? I’ve just described why:

A positive R-G value means we’re removing green’s influence from red, making red more saturated.

It also makes green more saturated because red won’t overlap with green anymore.

A negative R-G value makes red objects less red because the green signal starts to overwhelm the red signal, and as there’s less information about red objects in the green signal the processor makes red things less saturated.

It also makes green objects less green because the green signal in the red channel convinces the processor to add red to green objects, shifting them toward yellow.

The first letter is always the channel that’s affected. The second letter is the color signal that’s being added or subtracted and changes in hue and saturation.

The trick in all this is that the matrix doesn’t affect white balance at all. You can frame up a gray scale chart and add all sorts of crazy numbers to the matrix and see no change until you zoom out and see some colors in the frame. Matrix adjustments should be made while looking at an accurate color chart (I prefere a DSC Labs Chroma Du Monde) and a vectorscope, so you can see what’s going on.

Does your brain hurt? Me too. I’ll be experimenting with this on a Canon C300 at the DSC Labs booth at NAB. Stop by Monday, Tuesday or Thursday morning if you want to see if I’ve made more sense of this and can explain it better.

Show some love to XDCAM-User. It’s a great site. And thanks very much for such an informative video.

Any errors in this article are strictly mine. If you have a better way of explaining all this, please give it a shot in comments.

Disclosure: I have worked as a paid consultant to DSC Labs, whose charts I will be demoing at NAB.

Art Adams is a DP who will be experimenting with matrices and other things at the DSC Labs booth, C10515, near Sony at NAB. His website is at www.artadamsdp.com.