In the middle of the last century, observational astronomy had hit a wall. Larger telescopes were needed to see farther out into the universe, but massive glass mirrors like the one used in the 200-inch Hale telescope had become technically and financially impractical to build and maintain. The solution to this problem was the multiple mirror telescope, or MMT. The primary reflecting mirror was split into an array of smaller mirrors and a computer system was used to control the shape and position of each mirror element. The 400-inch, 36-mirror element Keck telescopes in Hawaii are testaments to the success of this approach.

Today, digital photography is quickly approaching a similar wall. Rapid advances in digital image sensor technology and the changes in consumer habits for digital photography in the last several years have begun to outstrip the capabilities of conventional optics.

Consider these facts:

Camera optics are lagging behind electronics

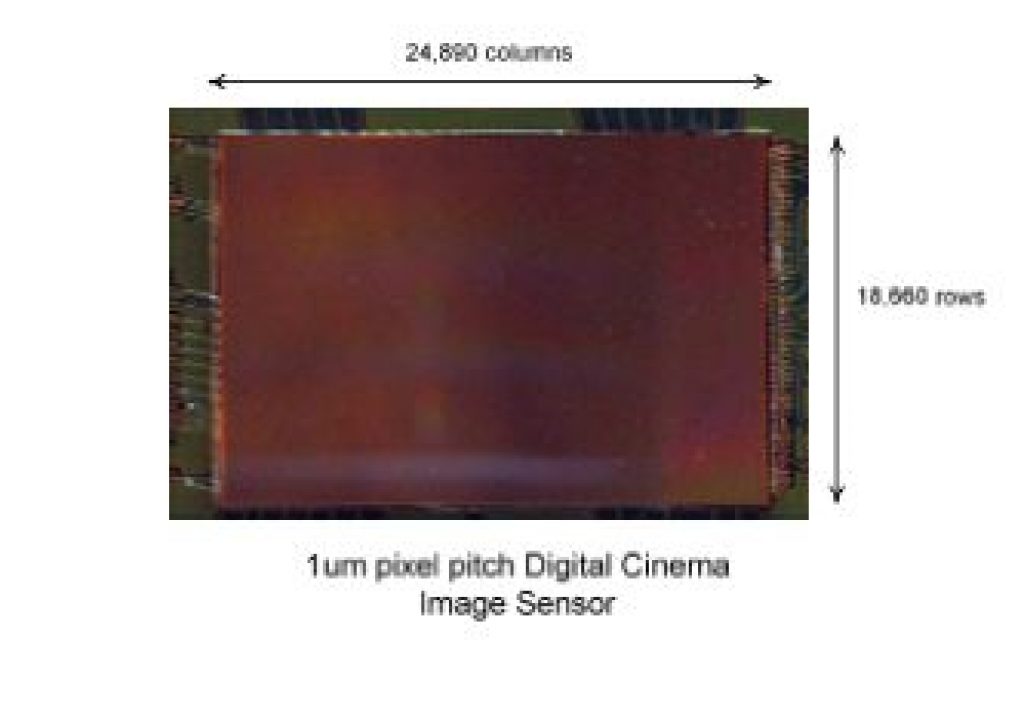

Image sensor manufacturers are now able to produce pixel arrays with an inter- pixel spacing (or pixel pitch) of 1um. If someone were to make a true Super 35 (4 perf) image sensor using this pixel pitch, it would be a pixel array of 24,890 (H) X 18,660 (V), or approximately 500 Mpixels! The spatial resolving power of such a sensor expressed in 50% MTF values could far exceed 200 lp/mm. By comparison, most ARRI Prime lenses used in cinematography quote MTF values at 10 lp/mm.

Big Optics are expensive

Purchasing high end professional camera lenses requires a major financial outlay. Here’s some examples from the B&H catalog:

Fujinon 14.5-45mm T2.0 Premier PL Zoom Lens:$99,800

Canon CINE-Servo 50-1000mm T5.0-8.9 (PL mount) – $70,200

By comparison, an ARRI ALEXA camera body, widely considered as the current gold standard in digital cinematography, retails for around $45,000, or less than half the cost of the Fujinon lens.

Big lenses are heavy!

Both the Fujinon and the Canon lenses listed above weigh in at over 14 pounds. These are not the kind of lenses you throw in a back pack and tote around all day.

The Multiple Mirror Telescope (MMT) Solution

Maybe it’s time to re-think how we capture photographic images. Instead of trying to make bigger lenses with higher spatial resolving power, why not make groups of smaller lenses and sensors work together to form high resolution aggregate images? This approach has several compelling advantages:

Image sensor manufacturers prefer to produce smaller-sized image sensors

The product category driving most image sensor manufacturers today is the smartphone/mobile device. The production volumes for these far outstrip any other application. These devices tend to use image sensors that have an optical form factor of 1⁄2 inch or less. Also, the production yields for these smaller sensors are much higher than for large area sensors. Why is that? Simple geometry. The odds of getting an image sensor with no defective pixels are much higher for a sensor with a small area array than with a larger area array. So a silicon wafer fabricated with 2000 small sensors on it may have 1600 perfect sensors, while the same size wafer with 20 large sensors may only have 10 perfect sensors.

Image sensors are getting smarter.

In the early days of digital photography (1990 – 2000), image sensors (CCDs and CMOS) were no more than arrays of photodiodes connected to electronics designed solely to transfer the photodiode signal to external image processing electronics. Now it is not uncommon for image sensors to incorporate complete image processing pipelines, so that the output of the image sensor is not just raw pixels but de-Bayered, color-corrected formatted images. Given the additional capabilities made possible by Moore’s Law in electronics, it is not unreasonable to consider groups of image sensors that can communicate with each other during the image capture process to optimize parameters like spatial resolution, color fidelity or dynamic range.

Small lenses are getting better

When cameras began to be integrated with smart phones and mobile devices, the lenses for these tended to be simple, low resolution designs with low-cost plastic optical components. As the image sensors for mobile devices have improved, and competition among lens manufacturers has increased, the image quality from these small lenses has increased dramatically. A number of Chinese lens manufacturers like Evetar (www.leadingoptics.com) and Genius (www.gseo.com) are now producing S-mount lenses with characteristics rivaling those of DSLR lenses. In fact, the GoPro Hero cameras (www.gopro.com), arguably the most popular action cameras ever built and a workhorse of cinema and broadcasting, use these types of lenses.

Some Notable Examples

There are already some efforts underway to use multiple lens / multiple sensor designs to achieve the next level in digital imaging performance.

LIGHT

A Silicon Valley startup, “Light” (www.light.co):

“… aims to put a bunch of small lenses, each paired with its own image sensor, into smartphones and other gadgets. They’ll fire simultaneously when you take a photo, and software will automatically combine the images. This way, Light believes, it can fit the quality and zoom of a bulky, expensive DSLR camera into much smaller, cheaper packages – even phones.” (MIT Technology Review, 4/17/2015)

AWARE

On a more ambitious scale, a DARPA-funded project at Duke University, dubbed AWARE (www.disp.duke.edu/projects/AWARE), “focuses on design and manufacturing of microcameras as a platform for scalable supercameras.”

Their first prototype camera produced a staggering 1 giga-pixel image based on 98 micro-optic elements and image sensors covering a 120° by 40° field of view. The optical design of this system is somewhat of a hybrid as it uses a combination of a single monocentric objective lens in conjunction with an array of identical secondary lenses along the focal surface of the objective. (See diagram below.)

Challenges and Prospects

If the success of the segmented-optic approach in astronomy is any indication, the future for this technology looks bright. However, the technical challenges facing this approach are not trivial. Some of issues that need to be addressed before segmented optics cameras have mainstream success are listed below:

Good image quality from multiple lenses depends on how well individual lenses are matched to each other

This was a lesson learned from 3D stereo camera developers. The process of producing optical elements (glass or plastic), coating them with the appropriate filters and assembling them into useful imaging lenses can lead to significant variations in lens performance characteristics like distortion, MTF and color rendition. Developers of multiple lens camera systems will either need to have tight control over the lenses used in these systems or develop back end processing to correct for differences among lenses.

Segmented lens/sensor cameras require tight opto-mechanical tolerances.

Possibly the most critical design task for these types of cameras is maintaining good and consistent alignment not just between one lens element and it’s associated image sensor, but also among groups of lens/image sensor elements. With image sensor pixel spacing approaching 1 micron, a mechanical tolerance of +/- 0.1mm means an uncertainty of 100 or more pixels between images. While this uncertainty can be compensated to some extent by back end processing, the best approach is to reduce the uncertainty on the front end with good opto-mechanical design.

These cameras require massive image processing

The ability to stitch and blend multiple images together to form a seamless whole has been around since the days of space probes in the 1970s. However, it was not uncommon back then to take several hours (or days) to create a final image. To make a successful commercial product, all of these operations must now happen within seconds (or possibly microseconds.) It is no accident that companies like Light are looking for software and algorithm developers rather than optical engineers. It has been said that we are entering the age of “Computational Photography”, where the image processing applied to a raw captured image is now more important than the raw image itself.

If these obstacles can be overcome, and the MMT analogy gives us reason to believe that they will, we can look forward to a quantum leap in photographic technology that may signal the end of “Big Optics” as a tool for image capture.

About the Author: