I was talking with a client recently about some of the challenges they were experiencing with a new workflow they had implemented. It was a bit of a departure from their existing workflows, in that it relied much more heavily on some external data providers. Up until this point, their existing workflows were primarily driven by data that was originating from internally created data, for which they had defined strict guidelines and relied heavily on throughout their workflow systems. This client had expanded their operations into a new area of business which required them to rely on their outside data supplier partners to a much greater extent for the quality and consistency of the data which entered their systems. Needless to say, these new data streams were experiencing much higher failure rates, and as a result, were stressing their workflow systems in ways they had not experienced before. These failures were causing a “log jam” of media files which would quickly fill up the workflow storage reserves and clog parts of their IT network due to the re-routing and reprocessing of these files. A cascade of completely new workflow challenges had caught the organization off guard and was causing a significant amount of stress on their operations.

While talking through these problems the client was experiencing, the concept of “trust” surfaced in our conversation. Since my background in workflow development has always tended to involve at least some amount of external data integration, “trust” was a concept that I had deeply internalized and always included in my workflow designs. It was through this conversation that made me realize that building this concept of “trust” into my workflow designs was something that slowly evolved over time from experience. This client’s existing workflows had made assumptions regarding “trust”, which turned out to be no longer valid when applied to the new data they were receiving.

So what do I mean when I refer to “trust” within the workflow design? Trust in this circumstance is the level of confidence one has in the quality and consistency of the data used as an input to any component within a workflow system. Trust can be applied to your business partner’s data feeds, your employee’s work quality, a server, the network, software applications, hardware devices or sub-components within your workflow systems. Each link in your workflow chain can be assigned a value of trust that you can use to drive your workflow designs and increase the robustness and reliability of your workflow automation. And as the saying goes, “trust should not be just given, it must be earned”.

This was the mistake that my client had made. Their level of confidence that these new data feeds would have the same quality and consistency as their internal data feeds, was simply set too high. As a result, their workflow systems were stressed in ways they did not anticipate and now they needed to re-evaluate how they did things.

So, how does one build the concept of trust into one’s system design? What I have learned to do is to develop profiles for the various components that make up my workflow systems. There are no hard and fast rules as to the level of granularity one must establish for these component profiles. It really just depends on your situation and the level of detail that you want to track, monitor or control. Each of these component profiles may also include data level profiling. The idea is that you assign a trust ranking to each data input component that can be used to programmatically make decisions on how to process the data. For example, if your data is coming from a workflow component that has a high ranking of trust and you receive an update to a previously delivered data record, you might programmatically design your workflow systems to automatically accept that updated data record. If, under the same scenario, the data is coming from a low trust ranking data component, the data update might be further scrutinized by running consistency checks against other data sources or routing it to a manual process for human review. Each component within your workflow is designed to check an input’s trust ranking before processing the data and in doing so, may receive instructions on how to process the data.

This trust ranking is not a bulletproof way to guarantee that your workflow systems will not inadvertently allow low quality data to slip through. There will always be data quality issues that will surface that are unanticipated. However, this approach, if designed properly, will enable one to expand the granularity of these data quality checks and decision-making responses over time. And remember, the data quality of your workflow systems is not static. Your data suppliers and workflow components may change over time and their level of trust may go up or down. This trust ranking mechanism injects a level of intelligence into your individual workflow components. Before a workflow component starts to process the data it receives, it can be designed to quickly check who the supplier of the data is and what other sub-components had processed the data before it and from there, be instructed on what data elements should be scrutinized during the processing of the data. At the same time, this trust ranking concept should not unnecessarily impede the data flow through your systems. One needs to balance the need for total data quality with the rate at which data must flow through the system. In most workflow systems, it is unacceptable for the output of any workflow component to fall too far behind the data rate of its input.

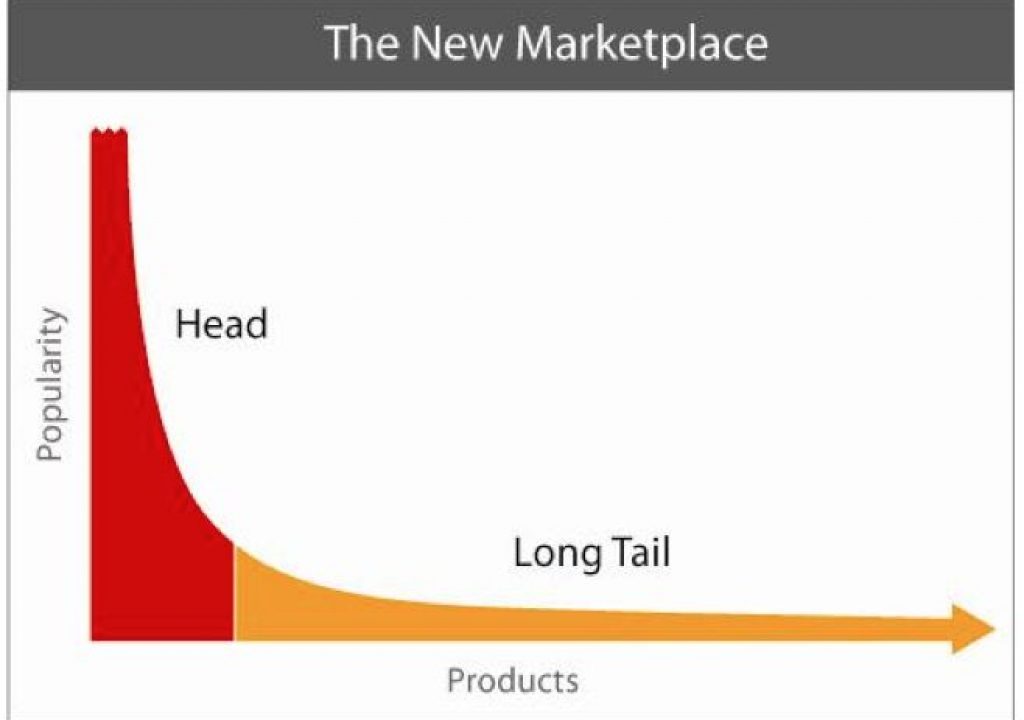

One situation where I found the greatest need for this trust ranking concept was when I was working with systems that mixed data associated with content from the opposite spectral range of the “Long Tail”.

The concept of the “Long Tail” was made popular by Chris Anderson’s book “The Long Tail: Why the Future of Business is Selling Less of More”. One side effect of the long tail that I noticed in my work was the quality of the metadata degraded the further down the long tail one went. I don’t remember Chris Anderson discussing this “feature” of the long tail in his book. From my perspective this was the “dark side” of the long tail that the book failed to mention.

In a typical marketplace, there are a number of parties involved making content available. Typically there are creators, manufacturers, distributors and retailers. Even in a purely digital environment, these same roles tend to persist. The “dark side” of the long tail is the further down the long tail one goes, the less incentivized one is to spend time on metadata quality and consistency issues. Time is money as the saying goes. The fewer copies of the content one expects to sell, the less likely you will earn back the money they have invested in the content’s metadata. If the creators of the content do not supply high quality metadata with their media, the responsibility of doing so gets passed on to the manufacturer. If the manufacturer does not have the incentive, they will pass the responsibility on to the distributor. If the distributor lacks incentive, the responsibility continues on to the retailer. And if the retailer is not motivated to cleanse the metadata, it will simply get passed on to the consumer.

So, when you are mixing metadata associated with both front tail content and long tail content, the concept of trust plays a very big role in how you design your workflows. Professionally produced content tends to have much greater metadata quality because the suppliers of the content have a vested interest in making sure the content is properly prepared so it can be easily received and processed by each party within the retail supply chain and on to the consumer for purchase. The opposite tends to be true for long tail content, for the simple fact that every minute spent addressing content metadata issues, the lower the probability one will make back the money spent in doing so.

But if you think about it, this same situation exists in almost all content environments. Even in your company’s internal content systems (perhaps not to the same extreme). There will always be high value content and low value content. Too much time and effort spent on the quality and consistency of low value content could result in a net loss to the organization. Your organization probably already internalizes this reality in the way they run their business by putting more effort into the quality and consistency of the data surrounding their high value content. And if you think about it a little further, shouldn’t your workflow systems also be able to internalize these same concepts?