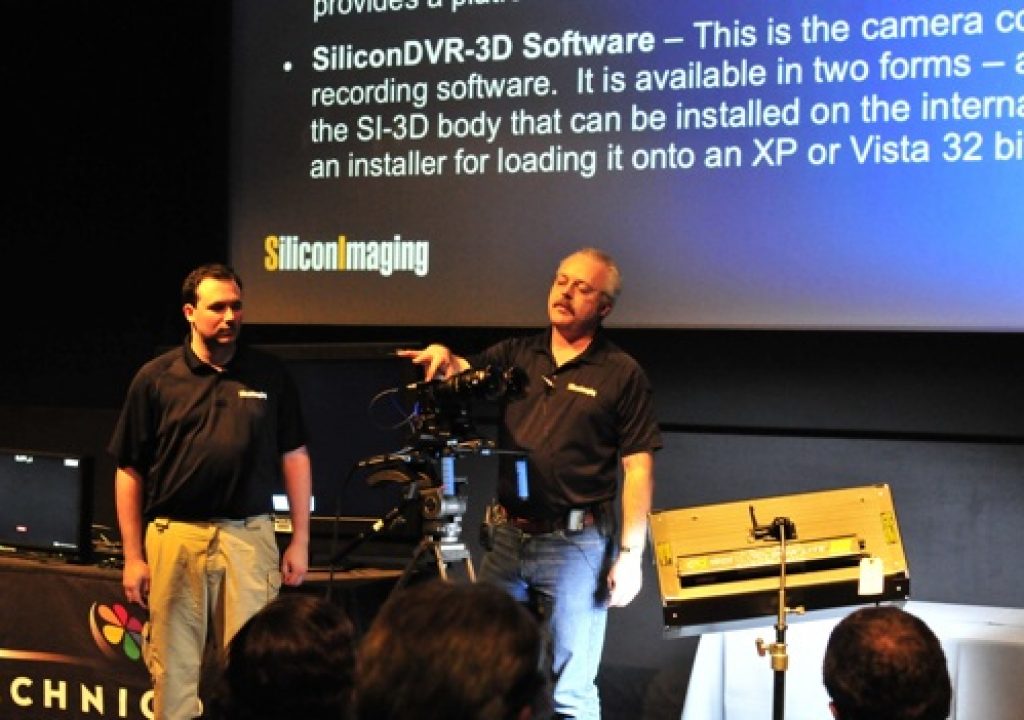

Silicon Imaging opened Band Pro’s 3D day with an impressive demonstration of their progress.

While Red has garnered most of the attention in the last couple of years, Silicon Imaging has been busy making impressive improvements – some of the goodies seen today:

-a comprehensive 3D capture solution, including recording 2 streams (left/right eyes) to one file on one capture system

-a tiny little box (1/3 of shoebox sized) that can capture 12 bit RAW files

-a comprehensive software UI for doing metadata based geometry corrections to fix things like offset, keystoning, etc. in the 3D rig

-further tools to embed nondestructive LUTs (in .look format) for color correction – this metadata stays all through post and works as editable settings in Iridas Speedgrade

-and more, read on after the jump.

Silicon Imaging

Takeaway – because their camera is really a sensor with a computer behind it, they can customize their UI VERY quickly and make it very powerful. The flip side is lack of realtime quality outputs (since their mantra is based on deferred processing and maximum flexibility). You can record in up to 12 bit RAW, compressed or uncompressed. You can now record two cameras into one computer that writes one file with stereo views – nice. There’s also a tiny little computer, with touchscreen, battery powerable, that can record as well ( I _think_ stereo as well, not sure – checking….). The UI allows for variety of previews, and metadata based corrections – things like offsets, rotations, etc. for the 3D files – as nondestructive metadata based fixes. Color corrections can also be saved as Iridas compatible .look files. This metadata survives with the source material until conforming at the end of the process into Iridas Speedgrade DI, and those settings pop right in – not only has the correction been made, but you can STILL change it, nondestructively.

Here’s my detailed notes from the session:

Useful pictures from the talk with their slides and demos here

==============================================

Intro and Silicon Imaging

Band Pro One World Preso

Michael Bravin opens up the event, wondering what would be the big thing of interest – and it quickly looked like 3D was the hot topic that everyone was excited about. This is the fourth year that Band Pro has had their One World events, and this is the third one I’ve been able to attend

3D – the tech everyone is excited about

commoditization of the 3D tech is the big thing this year

people looking to get into it – a lot of misinformation and people that THINK they know what they are doing, or SAY they know what they are doing, and “only have part of the total information” (I’d count me as part of that partially informed group)

24 hours before the US premiere of Avatar

“Avatar is going to be a mountain for people to focus on”

Cameron has put in about 10 years of dev work, 2 1/2 years of production, crew called it “Forevertar”

Sinclair Fleming from SI-2K, then Cineform, then Steve Crouch of Iridas US

Chris Burket from Element Technica (3D rigs)

then lunch, then Mark Obario from Technicolor, then a panel discussion

Sinclair Flemming from from Silicon Imaging

=====================================

wanting to take the SI-2K system into 3D

2K Mini – PL/B4 mount adaptors w/PS Technik kit, can run Gig-E to a record application = 2 flavors – 2D version and a 3D version – the 3D version is new and interesting –

Licensing – there’s a license enabling to light up the 2D to 3D functionality

-camera philosophy – defers processing until later as much as possible.

Can record uncompressed to to Cineform, nothing is baked into the file, that is key – never regret an on set decision is the goal

-anything that is modern and competent (MacBooks are viable, or build your own) can be used as the recording host – commoditized – shouldn’t be locked into proprietary hardware unless it is crucial to solving a particular problem

-for SI-3D – 2 2k-Minis in two SI-2Ks/computers

two 2K-Mini in one SI-3D system

-can run both signals into a single higher horsepower machine to have a design specific to task 3D interface, and a gateway into your post workflow designed for 3D

-can drive different kinds of displays (50/50, side/side, anaglyph and wiggle)

-virtual parallax adjustment (flip, x/y shift and rotation)

-new Cineform modes enabled, as well as a way to create a single file, which is a big deal for data wrangling and file management and workflow – is where things needed to be

-timecode is still there, convergence alignment screen guides

-added file recover, file export, focus, exposure tools built into the app

-SiliconDVR running on an XP 32 bit platform is their app to run this – THAT is your bootable UI

UI is touchscreen optimized – why big buttons

tic-tac-toe pattern – 10 hot spots to select different quick functions

10 1/2 to 11 stops of dynamic range, lots of lattitude

since viewfinders aren’t full res, they have an edge detect mode for focus assist

a live histogram for EACH eye

all of the different preview modes are things that people asked for

3D lookup table – apply color correction – (ASC CDL compliant?) – gives a rough preview of debayering and color correction based on look file you select

(.look files from Iridas format)

none of the looks are baked in – just metadata

can declutter the UI

can make the external display, the second viewfinder, can be more useful now

now a digital slate with metadata, embedded into first frame of the shot

viewfinder hotspot now split left/right

different modes – red/cyan anaglyph, 50/50, wiggle – toggles L/R eyes really fast, side/side for those that can defocus, splitscreen

if you have a mis-set lens, if you have a vertical shift between the two lens, can go into the setup as a non-destructive fix live before you hit record

-very few 3D mount rigs are mechanically perfect – you’re going to have to tweak it in post

-to get as close as possible before you hit record, can dial in your rig with alignment modes that do a non-destructive metadata fix

-is there a way to close the loop and callibrate the image offsets? In theory yeah, but practically it isn’t being done yet – there are folks working on it

-“there are no rules in 3D, only guidelines – that book has not been written yet”

You’ve got so many different things that can go wrong, you should try to minimize error at every step along the way – try to catch and fix it early

-beam splitter rigs – can flip if you need to – L for R, top for bottom, etc.

-L and R aren’t hard locked, can flop it around and switch in software to re-sync it up

Q: interaxial on these cameras?

A: limit of your PL mount is the limit of how close they can get – somewhere under 3 inches minimum, anything less than that and you are into a beam splitter rig

spot focus meter – can see a zoom-in, no lens identity in the metadata, no focus into in the metadata. There is capability of integrating lens metadata into clip metadata (in theory, limited applicability implied)

can do AVI or QT, cineform or 12 bit/ linear10 bit log uncompressed, SSDs can record uncompressed 12 bit RAW @ 2K in realtime @ 24p now (how much higher unknown)

single or dual file for 3D, single file MUCH easier!

3D external display – can do mesh, split, interlaced, side-by-side, etc.

3D alignment guides can be set,

can reduce the complexity – turn off options in the prefs that will display later – only show what you want to show

-sensor aspect ratio – 16:9 – is exactly DCI – 2048×1152, Bayer pattern, effective resolution is scene dependent, (mikenote – usual Bayer rule of thumb is 1.4 – so roughly 1500 x 800ish res at best in THEORY – dunno practice!!!)

Q&A:

——-

Q: always connected by Ethernet, no SDI output?

A: Correct – if connected SDI to record to some other device, would entail realtime bebayer and cooking in the look – not what htey are into. Always connected to something that is running the SliconDVR application

Q: menu to set up timecode to sync 2 cameras?

A: Time of Day set by PC’s internal clock, can start from zero for every clip, or slave it to a timecode reader- Ambient, Adrian TC readers, etc. – it’ll sync to whatever that is, and it’ll drop that onto each feed.

Q: one feed split or two separate?

A: depends on whether recording one or two files – old setup had grief about figuring out what was the first frame with two record systems – now isn’t a problem

Q: combined file – howzat work?

A: Cineform can answer that better?

Q: tech requirements for single recording system for 3D:

A: fast disk, fast processor, dual core is fine, something wheeled, weighs 50 pounds, takes 2 folks to drag it along, or use “one of these” – a little bitty CineDeck- a tiny box you put a battery on the back, SSD slots in, touchscreen on front, record time is a function of compression and 2D/3D, frame rate/resolution, etc. – generally speaking in the 30-60 minute record time frame ona commodity sized SSD

Q: if in the middle of nowhere, how do I monitor?

A: you’re remoting it in some way – SI-2K has an OLED viewfinder, but there is no viewfinder per se – you’d need to send a wire or fiber back with a display

Q: delay?

A: YES – usually less than 2 frames from when captured to displayed. The best displays are slightly less than one frame display, so not too much of a display

Q: a way to record without someone on a computer?

A: Yes, you can single user operate with this, but it depends on what you’re field setup is – some people put laptops in backpcks with a remote record button, etc.