Last November, RED Digital Cinema Camera Company released an installer adding RED functionality to Apple Final Cut Studio 2:

- RED QuickTime Codec v3.7.0 (read-only REDCODE decoding for QuickTime).

- An FCP Log and Transfer plugin, v1.0.0, to read RED’s compressed raw R3D files and import them as REDCODE-native or transcode them to ProRes422.

- A RED tab in Color’s primary grading room, letting you tweak the RAW parameters of an R3D or MOV file using the REDCODE codec.

- A white paper telling you what to do with all this stuff.

Let’s look at how these additions enable using RED footage in Final Cut Studio, how you set them up, and how they can benefit you.

Why Bother?

FCP already lets you use the QuickTime Proxies made by the camera or by RED Alert, and you can use the RED tools to render REDcode to ProRes422 HQ (and nowadays, in REDrushes and REDline, you can even preserve the audio while doing so [update: REDCine 3.1.8, released 28 Jan 2009, also embeds camera audio in its QuickTimes]).

Arguably, we don’t need anything more. However, consider these points:

- For ProRes clips, the RED FCP plugins do a faster transcoding job (at least on my MacBook Pro) than the standalone RED tools do, for the same level of quality.

- For REDcode-native clips, FCP-imported clips are self-contained movies, so they’re much easier to manage than the reference files written by the camera and various RED tools. Also, FCP sticks ’em into your Capture Scratch directory right away, just like any other FCP-imported clip.

- FCP can apply basic color and exposure corrections upon import, whereas camera-generated QuickTimes are stuck with camera-viewing settings.

- The RED FCP installer is a free download.

What’s not to like?

As to why stay raw when the final product will render out as a 2K ProRes, uncompressed, or DPX sequence?

- Freedom in grading: you don’t “bake” any decoding/debayering decisions into the media at ingest time. When you go to color-correct your clips, you still have the 12-bit, 4:4:4 RGB data to work with, as opposed to a 10-bit, 4:2:2 rendering thereof.

- It’s a lot faster to ingest raw files than to transcode them upon ingest; if you’re doing a rough assembly on a tight schedule, or even just looking at dailies, raw gives you satisfaction sooner, even if you still take a rendering hit down the road.

The RED FCP Installer requires:

- OS X 10.4.11, 10.5.5, or later.

- QuickTime 7.5.5 or later.

- Final Cut Studio 2.

- FCP 6.0.5, Color 1.0.3, Compressor 3.0.5 (contained in the Pro Apps Update 2008-04)

- An Intel Mac with 2 GB RAM.

RED highly recommends a 4-core or 8-core MacPro, and all I can say is: it can’t hurt. In these distressed times, though, not everyone can pony up the scratch for a fire-breathing Octocore, or even a hot-sauce-snorting Quadcore. Right now, I’m using a tea-sipping Dualcore: a 2.33 GHz Core2Duo 15″ MacBook Pro. While you might not choose to cut long-form RED projects on such a machine, it’s very common to use a MacBook Pro as a field ingest station, and more folks than you might imagine have main machines of this class. If your cutting station is blessed with more cores, read on; consider that your performance will only be better.

New Choices in FCP

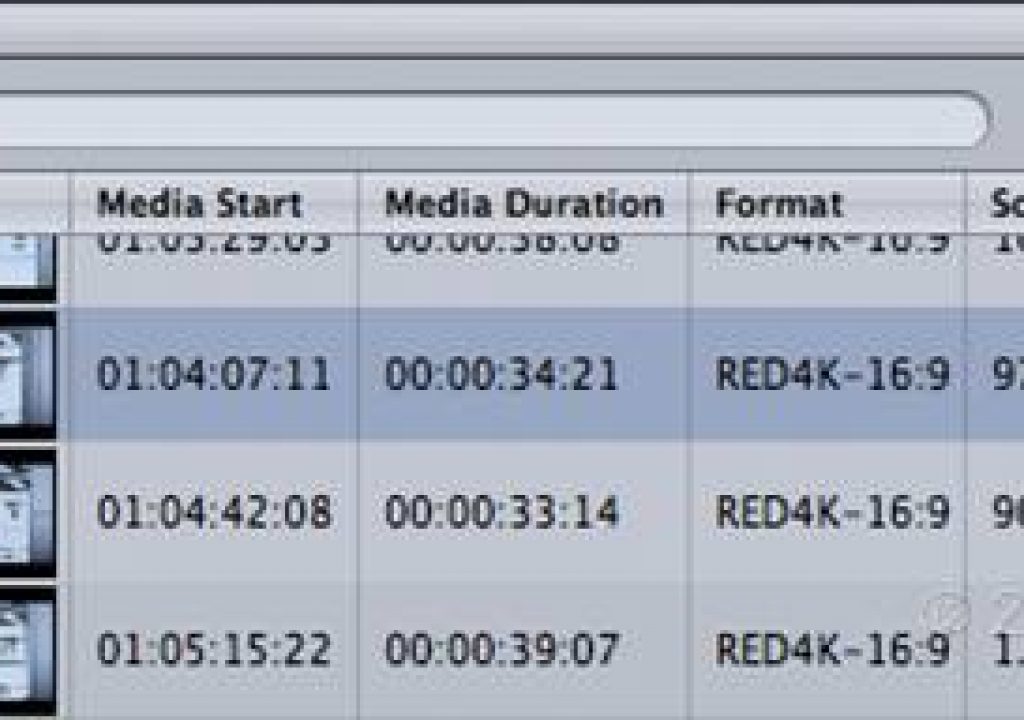

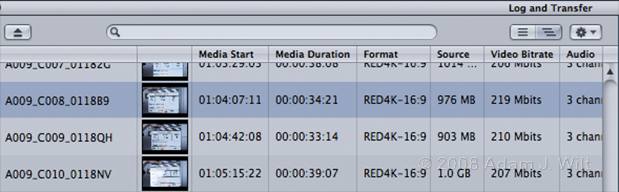

Once you’ve installed the RED add-ons, you’ll see some new options integrated into FCP and Color. In FCP, the Log and Transfer window shows REDCode clips.

FCP’s Log and Transfer window viewing disk dumps of REDcode CF cards.

The Log and Transfer Import Preferences has an entry for the RED FCP Log and Transfer plugin (scroll down in the Import Preferences window to see it, or drag the window a bit larger, as I’ve done in the screenshot):

REDcode preference set for native imports.

You can choose ProRes422, ProRes422HQ, or REDcode-native importing. The ProRes options are just what you’d expect; FCP will read the RED’s R3D files and transcode them to ProRes. The native setting is just that: the R3D file essence—the 12-bit, wavelet-compressed raw data—is rewrapped as a self-contained QuickTime file, and placed in your Capture Scratch directory.

Now how much would you pay? But wait, there’s more… the “gears” dropdown menu has a RED FCP submenu, letting you choose a “look” to apply to the imported footage:

Log and Transfer looks, with “6548K” and “posterize” looks created in RED Alert.

“Native” uses the settings in force when the clip was recorded: the color space, gamma, color temperature, and tint set up for monitoring (some folks on REDuser.net report that clips recorded with “raw” monitoring come across with REDspace gamma and color). The other presets apply different preset color balances; very handy if you shoot, as I prefer, with the camera always set to 5000K, so that the false-color level meter can be relied upon (the purple “overload” color only works for non-raw viewing modes at color temperatures close to 5000K… but I digress).

You can also create a “look” file in RED Alert—with some limitations. The look file is the same .RLX preset you normally save and load in RED Alert (one of RED’s free utilities), but the RED FCP plugin only pays attention to the “ISO” and “Matrix” parameters; any curves you create are ignored, as are color space, gamma, denoise, and OLPF Compensation settings.

The controls in RED Alert. Only the ISO and Matrix settings are used in Log and Transfer.

That’s important; let me say it again: the color space and gamma you shoot with are what you’ll get using Log and Transfer.

If you’re trying to match Logged and Transferred clips with clips processed in REDCine, REDRushes, RED Alert, or other RED tools, you won’t have any luck in FCP unless you’ve used the same gamma and color space in the RED tools as you used in camera as you shot with (though you can change these parameters in Color, you can’t in FCP).

Trust me: If you shoot something in REDspace, Log and Transfer it into FCP, and then try to match it to a REDRushes or REDCine rendering of the same clip transcoded with Rec.709 gamma, you can easily drive yourself mad trying to make the two versions look alike, because they just can’t be matched with simple level, gain, and gamma tweaks. If, on the other hand, the RED-rendered clip uses REDspace just like the camera was set for, you can quickly match one to the other with just a couple of adjustments.

When you Log and Transfer a RED clip, it gets treated differently depending on its size:

- 4K clips import at half size, e.g., 4K 16:9 comes in as 2048×1152.

- 3K clips come in at original size.

- 2K clips come in at original size.

Note, therefore, that if you shoot 4K HD (3840×2160) it’ll import as 1920×1080, ready to drop into a 1080p timeline with no further rescaling needed.

Next: Color, Quality, and Performance…

Changes in Color

In Color, you can import RED clips directly from the R3D files, or from Logged & Transferred QuickTimes in Final Cut.

Browsing a RED “folder” in Color.

Note that Color shows a REDcode clip’s timecode using the time-of-day timecode. If you’ve set up the camera to use edge code for the displayed timecode track, FCP’s Log and Transfer shows edge code, but Color still shows time-of-day (regardless of whether the clip is a raw R3D or a Logged and Transferred QuickTime). This display discrepancy doesn’t break sending an FCP project using edge code to Color, but it can be a bit disconcerting.

As in FCP, Color halves the size of 4K files, importing them as 2K. Unlike FCP, Color also haves the size of 3K files, whether loading them directly or through an FCP-logged QuickTime.

This asymmetry of treatment makes 3K RED-native files problematic in an FCP/Color workflow; you might find it useful to work with 3Ks for some room to zoom / motion-stabilize / pan ‘n’ scan, but Color will return you 1.5K rendered versions—probably not what you were hoping for! If 3K is in your plans, you should render it to ProRes, uncompressed, or another format before sending it to Color, and bear in mind that Color can’t work with project resolutions beyond 2K regardless of how the clips come in.

The installer adds a RED tab in the Primary room:

Fiddling with REDcode raw decoding in Color.

FCP-imported QuickTimes come in with their color temperature, tint, and other parameters intact; if you brought them in with a look created in RED Alert, those settings persist. If you bring a raw R3D file in, you get the look metadata set in the camera during the shoot.

You can tweak the RED tab parameters to affect how the raw data is decoded and deBayered (crucially, you can change the color space and gamma used for the decoding/deBayering in Color, which FCP does not allow you to fiddle with). Since tweaking happens with the full 12-bit raw image, you’ll preserve quality by making these changes in the RED tab compared to making comparable changes “downstream” in the primary in, secondary, and primary out rooms.

I only ran into one issue with the FCP-Color roundtrip, and that may be due to my unfamiliarity with the workflow. All my 2048×1152 REDcode clips are shrunk to 93.75% to fit into a 1920×1080 sequence. When I use FCP’s File > Send To > Color, grade my clips in Color, render, and then use Color’s File > Send To > Final Cut Pro, I get a new 1920×1080 sequence with graded 1920×1080 ProRes clips in it… shrunk down to 93.75%! I had to go through manually and reset the clip’s sizes to 100% to properly fill the screen. (I’m sure that someone will add a comment telling me what I should have done to avoid this problem… please!)

Quality

- 2K and 3K files import at full resolution and size, equivalent to choosing “Debayer Quality: Full Res” in REDRushes.

- 4K files come in at half size using “Debayer quality: Half Res (Standard)” by default; you can change this to “Half Res (High)” if you prefer.

If you want to tweak 4K decoding, use the System Preferences REDcode control panel. This requires installing the v.3.5.0 (beta) QuickTime codec to get the control panel—yes, I know it’s an older version than the one that’s in the RED FCS Installer. Quit whining, willya?

The REDcode settings option appears in the “Other” section of System Prefs.

REDcode settings in 10.4.11. Whoops! The SysPrefs window isn’t wide enough for this panel, which was designed for 10.5. (The settings work nonetheless.)

REDcode settings look a lot better in 10.5.5, and work the same way.

You are offered three simple choices:

- Half High: Higher-quality, but slower decoding.

- Half Standard: default; roughly twice as fast as Half High, but slightly less accurate.

- Auto: The codec chooses based on what the app requests: REDcode-native clips are rendered in standard or high quality depending on whether the timeline is set for 8-bit or higher quality rendering. Clips transcoded to ProRes422 during Log and Transfer always seem to transcode as High quality in Auto mode.

The quality of the decode appears to be identical to the standalone RED tools given the same parameters. As discussed above, if the same gamma and color space parameters are used in REDrushes or REDCine as in FCP, the color and tonal scale of the FCP-imported clips will match those of clips from the standalone RED tools, with one exception: FCP-imported clips have darker midtones.

This is the same 1.8 vs. 2.2 gamma shift that has plagued FCP users for years, and no, setting user preferences to import RGB at a 2.20 gamma level won’t fix this one: the gamma shift appears to be hardwired. You can match things after the fact by adding a gamma correction of around 0.82 to the FCP-imported clip, or a 1.22 correction to the RED-transcoded clip, and then adding minor gain and saturation tweaks as needed. Tedious, but workable.

High quality debayering takes roughly twice as long as Standard quality on my MacBook Pro, but the actual time ratio seems to depend on clip content; I’ve seen time ratios ranging from 1.4:1 to 2.3:1 depending on the clip.

High quality is very slightly better than standard: at first glance, a High quality and a Standard quality clip are identical. It’s only when one looks at pixel-level details that one sees marginal improvements in the High quality images compared to Standard. Even then, one might prefer Standard: it’s a bit coarser, but a bit sharper looking.

For most HD shows, I might not sweat the difference. For film-out, or if I’m feeling really finicky, I would. All the following images are from ProRes422 HQ renders:

An entire frame (4K 16:9 original, REDCODE 36 capture).

Detail: Standard quality half-res decode to 2048×1152.

Detail: High quality half-res decode to 2048×1152.

Of course, there’s just a tiny increment more detail in a full-res 4K decode, which gives the sharpness of Standard but with smoother diagonals and contours:

Detail: REDrushes full-res decode to 2048×1152.

That’s the advantage of supersampling; 4K of data in a 2K frame looks better than 2K of data in a 2K frame.

Of course, a full-res decode takes about four times as long as a half-res decode, whether using REDCine or REDrushes; whether the slight increase in detail is worth it given the time hit is something you’ll need to decide for yourself (but then, if you’re on a time-sensitive schedule, you’re probably using, or should be using, an Octocore MacPro—or several of them—to crunch your data for final output).

Don’t worry if the differences don’t leap out at you; they’re subtle. You might better be able to see them by downloading the images, enalarging them 2x or 3x, and flipping between them. Arguably, if you have to go through this much work to see the differences, then “half standard” is good enough for real-world work, or “half high” if you’re really picky—and practically speaking, there’s probably not a lot to be gained from a slow, full-res decode of a 4K image at 2K size.

For resizing the 4Ks, the standalone RED tools let you choose the resampling filter, while the Log and Transfer plugin does not. For what it’s worth, I normally use Lanczos filtering in REDrushes for downsampling, and the Logged and Transferred clips look identical in the fine details. I haven’t done any side-by-side tests with other REDrushes resampling filters yet, but I haven’t found anything to complain about with Lanczos (which, like sinc, is frequently recommended for downsizing images).

Performance

I measured performance using a couple of half-minute clips, and also when processing all 24 minutes from a recent fake commercial shoot. I saw a fair amount of variance in performance using the standalone RED tools, with some test running about 30% faster at some times than at other times. Generally, the faster times were seen on the single clip, with the slower times on the entire show, but sometimes I’d see variance even on the same clip. Based on this, and on the nature of my setup, take my performance numbers as rough indicators only, not firm measurements.

All my performance measurements were done on my dual-core 2.33 GHz MacBook Pro, reading and writing to a two-drive ESATA RAID 1 (using OS X’s software RAID, so writes require twice the data transfer that reads do). I used 4K 16×9 (4096×2304) REDcode36 originals, which were converted to 2K 16×9 (2048×1152) clips in all the methods I tried.

Importing REDcode into FCP is much like importing DVCPROHD from a P2 card: you’re simply rewrapping the native essence in a QuickTime wrapper. It’s a lot faster than transcoding, taking about 1.6:1 to 2:1 to transfer, or about 60% longer than real time to 2x real time. That’s mostly I/O time; the lights on my ESATA card were almost constantly illuminated.

Importing ProRes422 HQ at standard quality ran at about 8:1, or 8x real time. That’s not fun, but consider that the same transcode done in REDrushes took about three times longer (23:1 to 27:1), and REDCine, transcoding at “standard” quality, took twice to three times as long (16:1 to 26:1).

Clearly, on a dual-core machine, Log and Transfer is a much quicker way to generate ProRes 2K clips than the standalone tools. (Posts on reduser.net imply that 4-core and 8-core machines fare considerably better with the standalone tools, using all cores, while Log and Transfer does not scale quite so well across more than two cores. Once I get my hands on an 8-core machine, I’ll test the tradeoff myself.)

Bumping up to high quality led to an increase in ProRes transcoding times: Log and Transfer ran at about 18:1, while REDrushes took 37:1, or twice as long as Log and Transfer.

For comparison, a REDrushes export at full, 4K debayering quality took 128:1, while REDCine’s full-quality transcode took 69:1.

While Log and Transfer is the clear winner here, I think it’s safe to say that regardless of transcode or transfer methods, you probably don’t want to crunch all the data for your feature, or even a half-hour TV show, on a solitary MacBook Pro!

As far as playback goes, I was pleasantly surprised. When I dropped my 2048×1152 REDcode-native clips into a 1920×1080 sequence, and turned on Unlimited RT with dynamic quality and dynamic frame rate, I got a solid 6 fps update rate and continuous sound, and that’s with a second display showing Digital Cinema Desktop output (Video Scopes, however, refused to play in real time with either REDcode-native or ProRes 2K files). During playback, image resolution dropped perhaps by half, but it was still fully usable, and it sharpens up as soon as the playhead is paused.

If I selected high playback video quality, I still saw 3-4 fps updating, with a pin-sharp display.

Setting REDcode preferences to “Half High” slowed high-quality playback to roughly 1 fps, but didn’t affect dynamic-quality playback speed.

(By comparison, ProRes-coded 2K clips play back at what appears to be around 12fps in dynamic quality, or about 8fps at high quality: the slow playback appears to be due to the resizing needed to fit a 2K clip into a 1080p timeline; 1920×1080 ProRes clips plays at high quality at a full 24fps.)

If I left playback quality at dynamic but set playback frame rate to Full, I was able to get 10-12 fps from the REDcode clips (after turning off “Report dropped frames during playback”), and 24fps from the ProRes clips, even as they were being resized to fit the 1080p timeline.

Turning on both full frame rate and high quality proved to me that quality wins for REDcode footage: sharp, 3-4fps playback, or 1fps with REDCode prefs set to “Half High”. But I don’t recommend this; it made the Mac cranky. I’d sometimes see a brief spinning pizza of death when I started playback, as the MacBook Pro futilely tried to preload enough frames for full-frame-rate work, and its responsiveness to mouse clicks or keypresses slowed down. High speed or high quality is the way to go to on a MacBook Pro: I found that the blurrier 12fps playback with “full” frame rate was surprisingly usable.

Overall, performance, even on a MacBook Pro, is adequate for ingesting and editing short projects, like the :30 spoof spot I’m currently cutting. ProRes transcodes complete in an acceptable amount of time, and even REDcode-native10-12 fps is tolerable for rough cutting, when I choose to preserve the raw files through my editing process. I can cut long, or at least choose my selects; export the project to Color for grading, and then finalize my edit using the ProRes clips output from Color.

Still, I’d want more cores to throw at the problem for longer-form work, especially if there’s a client looking over my shoulder instead of simply reviewing exported versions.

The whole process feels very much like working on DV25 footage in the early days: I had to set my Canvas to 25% on my 233 MHz Wallstreet G3 Powerbook to get full-frame-rate playback in FCP 1, and onscreen playback was always fuzzy (the G3 just didn’t have the oomph to fully decode DV25 in real time). The DV25 editing experience got better; the RED cutting experience will grow better, too.

Now, where can I find an Octocore MacPro (or five) on the cheap, eh? 🙂