At the Tech retreat today: technical aspects of 4K capture, grading colorspaces, ACES 1.0, and… wait for it… drones for dummies!

[Update 19:15 PST: Final again (save for any additional corrections); added missing Josh Pines preso and “Snowflakes to Standards” panel; added closing presos and panels; fixed many scary typos.]

The HPA Tech Retreat is an annual gathering of some of the sharpest minds in post, production, broadcast, and distribution. It’s an intensive look at the challenges facing the industry today and in the near future, more relentlessly real-world than a SMPTE conference, less commercial than an NAB or an IBC. The participants are people in the trenches: DPs, editors, colorists, studio heads, post-house heads, academics, and engineers. There’s no better place to take the pulse of the industry and get a glimpse of where things are headed; the stuff under discussion here and the tech seen in the demo room tend to surface in the wider world over the course the following year or two, at least if past years are any guide.

I’m here on a press pass, frantically trying to transcribe as much as I can. I’m posting my notes pretty much as-is, with minor editing and probably with horrendous typos; the intent is to give you the essence of the event as it happens. Please excuse the grammatical and spelling infelicities thfollow.

Note: I’ll use “Q:” for a question from the audience. Sometimes the question isn’t a question, but a statement or clarification. I still label the speaker as “Q”.

(More coverage: Day 1, Day 2, Day 4.)

Understanding the New Acquisition

Sensor-Lens Options for 4K Acquisition – Mark Schubin

Common HD field camera & lens; 3-chip prism, B4 lens mount. Scale it up?

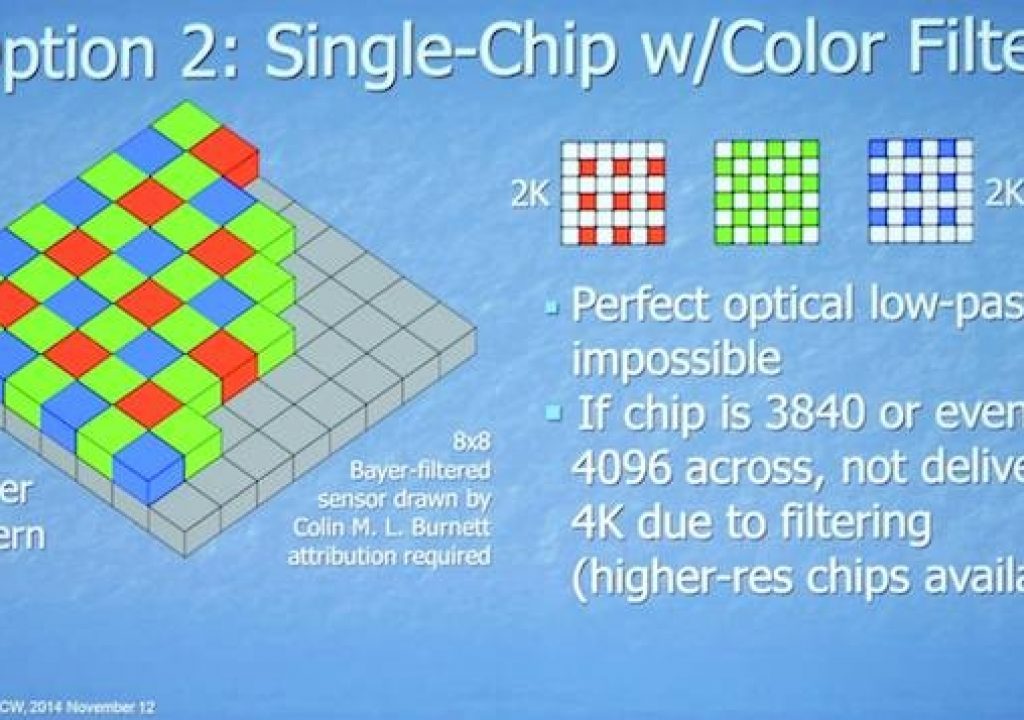

Or go to Bayer-mask single sensor:

No PL-mount long zooms yet. Adapters? Lose light; and b’cast lenses resolve for HD, not UHD. Shrink the sensors? Photon noise, etc. Pixel-offset? Hitachi is doing that in their 2/3” 4K camera. Or upconvert HD, as GV is doing.

Solar Activity and Lit/Stuck/Dead Pixels – Joel Ordesky, Court Five Productions

Why discuss lit pixels? You get them, that’s why. A client had six cameras, all with lit pixels. How did that happen? A big solar flare, one of the largest seen at the peak of an 11-year solar cycle. Nice theory; where’s the proof?

Myths: imager aging, manufacturer defects, too much heat cause lit pixels.

Truth: cosmic rays damage pixels: high-energy particles. There’s more exposure by flying, so camera vendors ship by sea. More gamma-ray exposure in Denver than in LA, as is being closer to the poles.

Sources: pulsars / collapsing stars 400 light-years away; lightning bursts (secondary emission off the top of thunderheads); large solar flares 9 light-minutes away. You can’t block this (practically speaking).

What is a pixel? a photodiode and photon well. A gamma particle shorts the path between photodiode and well: it stays lit. A stuck pixel is one that shows up at high gain / long exposure; dead pixels are shorted to ground. These last two can be fixed by automated pixel masking (often invoked by black-balancing). Or you can fix it in post!

Solar weather happens; lightning happens. So black-balance frequently: morning, noon, and night.

Design Challenges of Long-Range Zoom Lenses for 4K S35 Digital Cameras – Larry Thorpe, Canon

What can you get for 35mm-format long zooms? Up to 300mm, maybe 600mm. In 2/3”, more choice, 40:1 and 100:1 zooms. The 35mm equivalent would be 1500mm. For 4K S35 sensors, need longer lenses for sports, nature work. Can use B4-PL optical adapters, works, but no good in low light.

People need to shoot something 4-5 feet high from 100 meters away, 1.5x extender, wide apertures. For S35mm you need 1000mm.

Design challenge: need ease of focusing, servo drives, wide aperture, 4K-capable, aberration control, constrained size and weight for portability: < 15 pounds, < 16”. By comparison, a normal S35mm 12:1 is much heavier/longer.

Just scaling up a 2/3” lens makes for a HUGE lens. So months and months of design work to get this:

14.5 pounds, 15.9” long.

Newly developed inner focus; large aspherics, new extender design. T5.0-8.9 to keep front diameter down to 136mm; a T2.8 would be 400mm in diameter. T5.0-8.9 is OK on a high-ISO camera, even at dusk and at night.

Covers 13.8mm image circle, resolves 80 line pairs / mm, very sharp at 1/2 Nyquist:

MTF holds well through zoom range, too.

Aberrations: [a massive list of possible aberrations and how they’re dealt with, too fast to keep track of or record! -AJW] Example, in a 36-element lens, might be 20 different types of glass to control chromatic aberrations. Hundreds of millions of parameters to simulate in lens design, can take months.

Optimizing use at near focus: Focus rotation 180º to tradeoff speed and precision. Trading off spherical aberration and MTF (they work against each other). Adding an extender increases aberrations by square of the extender factor, so adds more challenges.

Video/D-Cinema Camera/Sensor Noise – Charles Poynton

Photon noise: how does noise change as pixels get smaller? As Larry showed, big sensors need big lenses, so can we make ‘em smaller?

What if there were noise in the light as it hits the lens? At the size of pixels we’re using, you can count the # of photons that arrive at each pixel, maybe in the 1000s to tens of 1000s. The discrete nature of that optical signal becomes the dominant source of picture noise. Noise measurement today happens with a capped camera (read noise), but we need to measure photon noise (a.k.a. shot noise) as well. Key sensor parameter becomes “full well capacity”.

5 micrometer-wide pixels are typical in modern sensors (by comparison, thin compostable garbage bags are 16 micrometers thick). Cameras tend to be 12 to 14 bits. Read noise forms the noise floor, but not a constant SNR though the scale; concentrated at the bottom.

Consider popcorn: as you can hear, kernels pop discretely, though the average rate is constant. So on a photosite in 1/60 sec, you’ll have an average exposure but it’s more a Gaussian distribution (Poisson distribution). On a pixel, the full-well capacity might be 30,000 photons, so with only tens of photons the distribution might look like this:

The more light the better:

The more photons, the less variation (or noise). State of the art is 1000 photons or electrons / square micrometer; so we get around 45dB SNR real-world performance with light coming in, not with the lens capped!

Role of Non-Linear Coding of the Television Image – Masayuki Sugawara, NHK Science and Technology Research Laboratories

As in gamma encoding. Should we redefine these codings? New standards and proposals for HDR coding, such as ST2084. Current system :

It is usual that this 709 signal is modified while being monitored, to adjust highlights, for example. Monitors tweaked using brightness/contrast settings, which will differ between studio and consumer settings.

The role of EOTF (electro-optical transfer function). Rec.1886 specifies this:

Gamma compensation not needed any more to compensate for CRT physics, but by happy coincidence it improves noise performance, adjusts quantization steps so quantization errors are less visible, also perceptual linearity (allows use of WFMs for QC and general image examination due to straight-line representation of visually-linear ramps); and an end-to-end gamma of 1.2 is well suited for TV color appearance.

We should think carefully how changing the nonlinear coding affects working practice; a move from relative approaches to absolute approaches. We need to discuss this high-level concept and how it applies to HDR.

4K, HDR, and Imagers – Peter Centen, Grass Valley

Sensitivity: 2000 lux, 89.9%, 3200K, f/11 gives you, per pixel, about 3000 photons for green & red, 1100 for blue (about 2/3 that for electrons produced). How many bits are enough? Given this discrete sampling of light, 15,000 electrons, 14 bits needed. More than that, you’ll capture read noise more accurately, but not more of the light. Modern CMOS camera are about 100 times cleaner compared to CCD cameras from 1987.

A CCD has 1 or 2 outputs, pixels processed 7-14 nsec. CMOS processing lasts a full line time per pixel, or 16 msec, 100x better, so CMOS makes HFR easier, lower noise, better sharpness, HDR possible.

Why 4K in 2/3” (for sports, motion)? Customers have B4 100x zooms, want same sensitivity and depth of field, CA corrections.

Resolution = MTF and aliasing. Half-pixel offset doesn’t affect MTF in itself, but it reduces aliasing, so you can enhance MTF without boosting aliasing.

How to suppress aliasing? No brickwall filters optically; Nyquist will always be violated in real-world designs. Half-pixel offsets between images causes alias cancellation.

Solid lines are MTF, dotted are aliasing. That secondary aliasing at 40% is quite visible.

Wide-gamut broadcast camera: either you match at 709 primaries, and you won’t get out of that triangle, or you match 2020 primaries, you’ll get a color between 709 and 2020

ACR: pixel with built-in knee; starts linear, then goes logarithmic. Biggest hurdle is the inflection point between linear/logarithmic parts (for maintaining white point).

HDR in 10 bits: range is huge, 120-160dB. Mapping in 10 bit SDI is about 85dB. But with Dolby PQ curve you can use 10 bit and maintain this full range.

“How much time do you need in post?” No worries: it’s live! Life is so much easier for the shader with PQ coding; image quality is almost insensitive to f-stop, and the shaders on these cameras have almost nothing to do.

Knee and 709 conversions set per situation and often not reversible.

HDR-live: set 100% at code level 512 (100 nits), Black level set by trial and error. There is already legacy wiring for 3D in the studio; use one “eye” for the SDR feed and the other “eye” for the HDR feed, and you can handle both streams simultaneously.

Questions for anyone in the previous talks:

Q: For Dr. Sugawara: nice to see happy coincidences explained. As we move into HDR, gamma function can’t efficiently represent the range. HDR may not have happy coincidences; can’t we create intelligent designs for new EOTFs, and shouldn’t rendering at home be referenced to the creative intent, or just left to consumer? Dr. Sugawara: in current operation we still need happy coincidences, when introducing new transfer function, we have to address alternative measure to get them.

Q: with solar flares, why doesn’t satellite imagery crap out? A: NASA has seen it; sat imagers need to compensate for bad pixels.

Do You See What I See? – Josh Pines, Technicolor

A little color science never hurt anyone… Metameric failure. Look at these spectra:

But cameras and eyes and film don’t always see spectra the same way. Spiky spectra are seen differently; AMPAS Solid State Lighting report (download it from the AMPAS website [oscars.org, I think? -AJW]), iPad app to simulate how different lights are rendered. [Josh running though slides too fast to capture -AJW] Different spectral distributions; different observers. Rec.2020 primaries are very spiky.

From Snowflakes to Standards: Maintaining Creative Intent in Evolutionary Times

ACES Version 1.0 – Andy Maltz, AMPAS

Infrastructures evolve: At first, nothing works, pioneers get arrows in back. In the end, it all works. In motion pictures, the container and metadata are described by standards. So are supporting things like reel core dimensions. Tech specs of the images like spectral curves, film development times, etc. supplied by film manufacturers. In digital, pretty good shape on standards, but we lost some standardized infrastructure. Started off with standard flows: film, process digitally, film out or DCP. Now it’s all digital (mostly), but no standard containers & metadata & image specs.

But box office has never been higher. We’re great at ”making things work”. Next-gen: HDR, wide gamut, etc. DR is growing. Still no archiving standards.

ACES is the infrastructure replacement for the old film pathway from acquisition through archiving. Perceived need for interchange standards back in 2004 when development began. Color management, future-proof design. Field trials, first SMPTE standards 2012, 2013. Now being used in actual production.

We learned: 16-bit half-float was the right choice. Simpler output transforms are better; makes things easier. Input device transforms really took off: report of pre-grade efficiency of 90% when matching cameras. ACES allows small facilities to “punch above their weight.”

Open architecture: you know it’s successful when institutions grow to support it. Independent training events grew up; textbooks with ACES chapters; wikipedia articles; applications in medical and broadcast.

ACES 1.0 developer kit in Dec 2014. Industry-wide adoption. Finalized core encoding and transform specs. Standardized file formats (SMPTE standards), Color Transformation Language, reference image library.

And more: new working spaces for CC and VFX; improved on-set support; HDR support; look and feel improvements; a versioning system; OpenColorIO for VFX; user experience guidelines.

ACES2065 color space; new AP-I working space a bit bigger than Rec.2020 but less aggressive than 2065.

Improved on-set: ACESproxy, same primaries, ASC-CDL grading space definition. Dim-surround output device transforms, new ODTs for on-set. Common LUT format. Clip-level metadata container ACESclip XML, lightweight. Look Modification Transforms (where to insert global look transform), with common LUT format. HDR support with new ODTs for 1K, 2K, 4K nits; let’s see what the industry learns.

Versioning system : there will be a new ACES version, but not for a while. Enables vendor and end-user contributions.

OpenColorIO geared towards VFX, OpenColorIO.org.

User experience guidelines: common terminology, software guidelines for consistent intuitive presentation of ACES features.

Calibration: just do it.

Everything available from oscars.org/aces (for free!)

The M word: we need to tell people about ACES 1.0: ACES Universe. academy and developers, product partners, facilities and active end users, networks and studios and archivists. ACES logo program. Ask your vendors when they’ll have ACES-logoed products!

ACES will improve with wider adoption.

Why Grading Colorspace Matters – Peter Postma, FilmLight

What colorspace should you grade in? Many people don’t understand the impact of colorspaces and how they affect the image.

History: film negative into the printer, printer lights, film positive. Most choices made in front of the lens. For TV: print into telecine, lift/gamma/gain, display; more creative options. For DI: scanner, lift/gamma/gain, print film emulation for display, then output to film. Just digital: camera, lift/gamma/gain, display, but that’s not great for log images, so then we added LUTs between lift/gamma/gain and the display.

One of the problems is the multiple color spaces on the capture and the display sides; you can’t match between ‘em all with just lift/gamma/gain and saturation. Do you need a new LUT for each new camera and display?

ACES provides a complete color management system. In BaseLight we’ve put in all the IDTs and ODTs we can find. Tag an incoming image with its color space, set the working space, and what the output color space is. Then, just using IDT & ODT won’t always give you a pleasing image, so there’s also a display rendering transform to account for viewing conditions. You wind up with the “color space journey” showing the chain of color transforms:

[Demo of BaseLight, showing lift/gamma/gain transforms vs. an ACES-based approach. Makes grading disparate cameras more consistent. Boosting exposure on Arri LogC footage lifts blacks in LogC, but ACEScc color space allows easier exposure boosting without elevating blacks; ACEScc is log all the way, where LogC and other camera log curves typically have a linear portion in the shadows for noise control. -AJW]

Efficient for multiple inputs & outputs; you focus on creative grading, not fixing technical issues.

Panel: The 101 on Implementing ACES 1.0

Moderator: Carolyn Giardina, The Hollywood Reporter

Bill Baggelaar, Sony Pictures Entertainment

Bobby Maruvada – Digital Imaging Technician and Colorist

Theo van de Sande, ASC, Director of Photography

Carolyn: Sharing experiences using ACES. Theo used ACES for “Deliverance Creek”, what did it enable you to do?

Theo: I got control of the image back. This gave us a more efficient way of showing the image to everyone on set, so the confidence becomes higher. ACES gave me a base look quickly so I could spend my time in creative grading.

Carolyn: Bobby, talk about putting together the ACES workflow.

Bobby: On set I first need to talk to DP, what’s the intent. ACES removes all the background noise of camera and output differences. We’re working on creative stuff, not just matching cameras. In terms of the color pipeline, everyone needs to know. You need to show it to them, and they get on board.

Carolyn: Bill, when do you get involved. how is it used in post?

Bill: From the beginning, we want post involved. ACES provides a common language. Get everyone involved. Here we go, here are the cameras, then another camera comes along; ACES handles it, allows a common language.

Carolyn: Biggest advantage?

Theo: The confidence that the director can see the image as intended, immediately. The final result and the DI work isn’t that far from what we grade on set. It’s about moods, if you can’t show moods, you’re in trouble.

Bobby: Preservation of director’s intent through the entire process. Saves money, too, since you’ve made decisions on set, so final color, DI happens faster and simpler.

Bill: How do we use this stuff later on; how do you get original intent when outputting to TV or streaming or whatever. The confidence that the system allows you to translate the original intent, that you have the original image. It’s efficient; that common language.

Carolyn: Misconceptions?

Bobby: ACES is not “a look”, it’s a container or workflow. The workflow allows us to get there quicker. ACES doesn’t rope you into a corner or constrain you.

Bill: in early days there were some constraints, but those are gone.

Theo: This film was done in ACES 0.2.2, wasn’t as stable. It’s not complicated; seems that way because it’s enormous, but working with it is very simple.

Bill: The technical talk was because it was early. Now it’s more about the creative language.

Bobby: From a colorist’s perspective it’s more fun since you get right into creative work.

Carolyn: Wish list? Stuff you need?

Bill: Nothing so far; 1.0 is pretty complete. You’ll see the toolsets become more robust now that 1.0 is out.

Theo: 1.0 gives us much more control over DI. Very important that you bring more color correction to the set; a good DIT will help the product, so in DI you’re dealing with DI issues, not color correction.

Bobby: In 2235 I want people to see what we see today. I’m really happy with ACES 1.0. We do need more education about ACES: DITs, colorists, director & producers.

Bill: DPs and DIT are critical; they need education.

Theo: there’s a learning curve; on set the original system reacted so strongly that it took time to get used to it. 1.0 is less aggressive.

Bobby. My dream is the colorist is on set…

Theo: … even in previz. ACES is fantastic for that.

Carolyn: Archiving?

Bill: At Sony Pictures we’ve pushed ACES partly for archiving, what will we have in the vault, how do we repurpose it? ACES gives us that container and metadata; we have high confidence we can get back to original intent. We have a lot of flexibility to do things we didn’t know we could do.

Questions:

Q: You can tweak so much it starts to look like CGI. A: But it’s not.

Q: Archiving: what do you need to archive from ACES? A: The final EXR files; VFX pulls from the VFX facility; pulls from the original conform; on the TV side and uncolored version that’s conformed, and a colored version. And all the metadata. Easy for us to discover which RRT was used as there are only 4 or 5 we use.

Q: Perceptions from those who don’t use it, who bake in a look, will ACES pull apart the look? Are there guidelines for IDTs going into under/overexposure issues, for customized IDTs? A: If you’re baking things in at the wrong point all bets are off. If you lock into a look when you shouldn’t be… that not a fault of the system. IDTs: published procedure for them.

Drones for Dummies – Tom Hallman, Pictorvision

Formerly WESCAM, name change to Pictorvision. We have an FAA special operating permit for commercial drones.

What is a drone? Predators, multirotors, nanobots? The rules for a 3000 lb aircraft and a 3 ounce aircraft need to be different.

Under 55 lbs, under 400 ft, in line of sight for movie applications. Must be electric (to stay under 55 lbs), flight times 7-15 minutes. RED Epic, Panasonic GH4 are most common aerial cameras, but we can fly anything we want. Short lenses, mostly. FIZ drives are coming; it’s a power-to-weight ratio issue, trade off flight time for weight. HD downlinks. Gimbals are normally the Movi and the Zenmuse from DJI.

Legal restrictions: manned A/C are much easier: 3000 lbs of jet fuel just overhead? No problem! Unmanned: Private pilot’s license, visual observer, under 50 knots, no night flying, no ops from a moving vehicle, 500 feet away from civilians (on set, not an issue, but in downtown LA? Problematic!), 500 feet from clouds.

What they they good for? Low-altitude shots. Difficult locations, small spaces, close range (but not too close), inside (sometimes), shots between those from a crane and those from a full-sized helicopter.

[Nice reel of GH4 aerial footage]

Mostly short primes, but zooms are coming.

Not good for: high altitude, really long runs, high speed, inclement weather, large cameras/lenses, over crowds or developed areas (for now), when recording sound.

Are drones cheaper? Often, not always. 4 man crews, serious FAA maintenance records (just like manned helis), extra steps needed for flight permissions, slower to reposition via ground than flying.

Steps: use an exempt production company, all the normal film permits, all the normal permissions, all the normal safety personnel. UAV company: have a section 333 exemption, get Certificate of Authorization for the specific location (may take months, but good for 2 years), 3 days prior file flight plan, 48-72 hours before file a NOTAM (notice to airmen), get agreements with local airports, coordinate with any military operations in area.

Future opportunities: commercial-grade gear, smaller dedicated cameras and lighter optics, better batteries, complete aerial systems, more efficient designs, safer designs.

Last week we got two new drones approved: PV-HL1, an 8-arm, 8-motor heli, flies an Epic; PV-HL2, the one you see here with 4 arms, 8 rotors:

Q: I see you have a GPS antenna; can you record a path and replay it? A: Not yet: GPS for safety, so it’ll return to home if signal is lost. Not precise enough for MoCo, not quite yet.

Q: Autonomous flight? A: FAA recommended we strike any mention of “autonomous” from our application. Needs to be controlled by a human at all times.

Q: We shot going off over a cliff, so probably violates the 400 ft rule? A: Depends on how you define above ground…

Q: Frequencies? A: We’re using non-licensed band equipment right now. Waiting for something small and light enough.

Q: Safety provisions stricter than for model A/C? A: Yes. remember, this is an exemption, a test. FAA can shut us all down at any time. We file reports every month to tell ‘em what we’re doing.

Q: DJI’s drone are programmed to land or avoid no-fly zones; that’s autonomous, right? A: It will prevent you from flying into such a zone, but it’s still a human-controlled flight.

Q: DJI is a non-commercial application; make that distinction. That’s part of the difference between a hobbyist and this usage.

DPP and IMF panel

Moderator: Debra Kaufman, industry analyst

Sieg Heep, Modern VideoFilm

John Hurst, CineCert

Bruce Devlin, Dalet

Stan Moote, IABM

Debra: Poll: who was had problem with received files? (everyone) A common problem (yes). Talk about DPP and IMF, attempts to enable interoperability.

Bruce: DPP, Digital Production Partnership. BBC, BSkyB, Channel 4, etc. suffering a plague of formats. No consistent interchange format. Over lunch (there might have been alcohol involved), someone said, “this is nuts! Why can’t we have a common delivery format, like a tape format was?” Due to the plague of formats, there was a mistrust of file-based formats. The DPP file format standard was only part of it, educating the community that a file-based workflow was trustworthy and workable was just as big.

Stan: Everyone got involved with MXF and remembers the pain of it. Over the years people got confused about MXF (which MXF variant?) MXF is a very open wrapper. DPP defines what UK b’casters want, and defines the metadata fields specifically.

Sieg: DPP impacts our work; we made some DPP test files, it’s just another deliverable. We have ProRes, XDCAM, transport streams, even a lot of tapes still. But it helps us understand what we’re making. DPP helps producers define what they want from us, a boilerplate for deliverables helps define it.

Debra: Avid has DPP in Media Composer, will that affect things?

Sieg: It depends.

Stan: from a production house viewpoint, it’s just another chargeable deliverable, but at least it’s very, very consistent.

John: MXF is s helpful as XML: too wide to be specific. Need app-specific implementation and constraint. DPP is one of the many steps towards how MXF can solve these problems.

Sieg: IMF and DPP comparison. IMF oriented to studios, DPP towards b’casters. Both are MXF wrappers. DPP uses AMWA AS-11, similar to AMWA AS-03.

IMF uses JPEG 2000 or MPEG-4. DPP uses AVC-Intra 100Mb/sec, and MPEG-2 for SD. DPP requires 50i while IMF has flexible timebases.

DPP has 4 or 16 audio channels, and uses separate closed caption files as specified by b’caster.

DPP program material may be segments with specified head-build and run-out, countdown clocks, IN and OUT metadata, audio loudness and photo-sensitive epilepsy PSE data (required by UK).

IMF program material uses metadata markers for commercial breaks etc., cuts-only EDLs, add more languages when they become available.

(All sorts of challenges to OFCOM on the PSE rule; hard to QC since different decoders may cause different PSE triggering levels.)

John: When trying to understand MXF applications, good to know the context. DPP is a single file (except for timed text) for playout delivery. A channel servicing workflow needs masters with separate files to allow shuffling and reassembling of components. Tradeoffs: cost, flexibility, interoperability: choose any two!

Stan: Just starting to be used. The nice thing about DPP is there’s a metadata app to quickly get things into a DPP delivery format.

Bruce: Key thing is that encoders & decoders had ability to be certified and approved. In 2 years after first test there were 30 companies interoperating at the BBC. We then tried to define a conformance procedure; difficult. We though we’d be testing just the MXF levels, but we wound up testing AVC libraries for interoperability; same for audio coders/decoders. Example: run a DPP player, things are in sync. If grab playhead and scrub back and forth, will stuff still be in sync?

Stan: Everything is not 100% checked; can’t ever be. It’s a practical verification, but you might still have a few problems.

Bruce: we won’t fail you if you misspell “programme” but we will if you lose lock or fail to play.

John: We’re working the same issues in IMF. Regular meetings and plugfests. Socializing the idea of componentized workflows. Interoperability is coming along; we still have stuff to sort out. Benefits: if you’re in a channel servicing workflow and want to be efficient and preserve intent, IMF doesn’t require media replication.

Sieg: JTFFMI Joint task force on interoperability. 132 user stories, 27 current practices, requests for metadata. EBU Core metadata spec, based on Dublin Core spec. Some consideration for a DPP for North America.

Stan: Also discussion of a DPP for Europe.

Debra: Who is going to pay for all this?

Stan: It’s not what you’re paying, it what you’re saving.

Bruce: It seems odd for a vendor to support DPP because that’s fewer formats to sell, but it’s fewer to support, too.

John: The film festival standoff: DCPs don’t always work across projectors! Helps reduce the incompatibility.

Bruce: One of the IMF advantages is it’s based on DCP, where DPP is invented from scratch.

Sieg. Avoiding the unexpected: even if you do a good job, things come up. A consistent way is a good thing.

John: Avoid the desire to add features; less is much much more!

Sieg: Choose the right format for what you need to do.

Debra: Real-world examples?

Bruce: BT Sport is based on AVC-Intra and very DPP-like, so it’s easy to rewrap as DPP. “Coronation Street”, oldest soap opera, each episode goes to 100s of destinations, rolled DPP-like formats all the way back to the cameras so the DPP delivery becomes easier.

John Sony Pictures using IMF, Netflix interested in IMF as a deliverable. Componentized workflows at Fox and Warner. Uptake at the top end. Can save costs for anybody with multi-format delivery.

Debra: How many are planning to use DPP or IMF this year? (not many hands go up)

Q: Codec same as P2? A: based on it.

Q: With HDR, wide gamut, how will we master? A: It can all be accommodated in the same basic framework. On the DPP side, the metadata fields hold that info.

Q: The importance of compliance testing is essential.

Q: One aspect with DPP is same AVC codec. J2K is a heavy codec in IMF. A: You can use a different codec in production, no one has requested a lighter-weight codec for delivery.

Q: The success of DPP seems to be that b’casters got together in a bar agreeing to make it better. I’m willing to buy those drinks here tonight!

Bruce: It was economically interesting because enough people were interested.

Not Your Mama’s Post

Moderator: Kari Grubin, NSS Labs

Loren Nielsen, Entertainment Technology Consultants

Patricia Keighley, IMAX

Christy King, Levels Beyond

Loren: film distribution formats:

But there are so many different formats for digital delivery. 135,000 digital projection systems worldwide, not so much film. DI delivery with huge control, but a proliferation of formats. In this world of differentiation, we have the challenge that mastering for an idealized delivery isn’t viable any more, because any master we make will be shown on thousands of different displays of different gamuts, dynamic ranges, frame rates, sizes, primaries, etc.

Patricia: Over a billion people have seen an IMAX film. Not one thing: resolution, digital remastering, high light levels, powerful sound, custom theaters. That’s where we’re all going for. Started 1967, 1970 premiere, 1971 first permanent theater, 1972 IMAX Post, 1997 brought everything together. 50 person team, 400 films over 4 decades. We specialize in helping filmmakers make films for IMAX. Started with 2D 20-minute nature docs. Evergreen film moving from theater to theater. Now we have 2D and 3D live-action; $100,000 for a feature-length print. We consume content so fast the show might only be in theater a week. DCP is only $200. 1983 dome theater, 1986 live-action 3D film.

Late 1990s shift from film to digital, lots of learning. In 2000, “Fantasia 2000” repurposed for IMAX, film recorders for this. “Apollo 13” remastered with DMR, “Matrix: Revolutions”. IMAX Digital, film post adapted for digital. IMAX film print can be 94 reels. 2008, “The Dark Knight”, 27 minutes in IMAX. “Avatar” followed. $243 million in IMAX revenues. 2010 film in China; international expansion. Sound is carried separately so you don’t have separate IMAX prints for different languages (not an issue with DCPs). The magic of digital is we can send a hard drive to Kuwait, get censor list, we can simply email the edit list and the DCP will play as the censored version.

Big business with KDMs, especially for trailers. 2012, R&D into next-gen lasers. 72 minutes of “Inception” is IMAX. 2013 first laser agreement with Smithsonian; IMAX private theaters. 2014 $50M original film fund for docs; electronic DCP distribution. 2015 large laser deployment. Maintenance and monitoring is key; we remotely monitor lamp brightness and sound and warn operators of problems. We invite comments; we always respond and take comments seriously. “Game of Thrones” experiment; IMAX on a cruise ship. We’re always trying new stuff for IMAX audiences.

Christy: How we help content owners manage distribution. The data associated with material, and the outflow, what info can come back? The big light bulb in 1994 was when military customers needed training; I figured out how to use Avid, bought a web server, put training videos online, teachers on bases loved it. So much easier than trying to ship tapes! Now at Levels Beyond in asset management. Instead of getting a phone call back from a military base, I can now see play counts, did the sound work, did people use closed-captions, when did people buy things during the program. How do we know when to stop delivering some of these formats? The only way to know is to measure using audience-driven data.

Kari: How much do you think what you’re doing is impacted by creatives, or consumers, or device manufacturers.

Loren: much of the tech is driven by technologists, but it takes creative pull to get it into the marketplace. Without powerful content it won’t reach an audience. But audience gets the final vote. So we’ve seen some studio responses, surveys, but we don’t have votes yet. Some things will just happen; doesn’t make any sense to make 2K panels when 4K panels are just as cheap.

Patricia: We decided from a tech perspective to go to digital. We took all our procedures for film and applied them to digital. The audience is voting with their ticket buying. It’s a business; if you don’t have the content to pull those buyers…

Loren: Average ticket for premium content is $17-18. Content perceived as premium can command more money.

Christy: Sports tickets are $90. But you expect WiFi, a Jumbotron, cushy seats, an in-box video feed. We still don’t have a lot of tech to measure the impact of these things. The sports world throws another layer of stuff into the experience. Can we do that in the theater?

Patricia: China is soon going to be the biggest market for us.

Christy: And the more we expanded overseas the more our stuff was stolen!

Kari: Will be see a return of the 20-minute model? YouTube stars in the theater? Nolan reinvigorated film!

Loren: It delayed the inevitable; how many more years?

Patricia: Film for a couple more years at a time, but we don’t know about the future. Oils rather than watercolors. It’s not easy to make films these days, extra work, but you can’t do it for every program.

Christy: Archives: it’s rare you pull 1990s material out and use as-is. People remixing and modifying old content for new uses. Archives as asset management, what else can you do with this material?

Patricia: 11.5 times more expensive to archive digital than film. We’ve brought out VHS tapes of an IMAX show. We can go back to IMAX with all the resolution to pull anything new out again.

Loren: Mastering: concept of mastering is to keep original pixels as long as possible, as untouched as possible. Find a way for just-in-time delivery, derive the proper distribution format. We don’t have a way to deliver the look of the image to all these different devices consistently.

Kari: As we have these new formats and color spaces, how are you helping people make that transition?

Loren: Seeing is believing, doing is even more powerful, It’s not hard. They need to take their own content and play with it; HDR has such a wide palette. Fear it’ll look like video, like Telemundo. Do they want big ol’ grain, bleach-bypass? Yes, you car recreate that look. We can re-create super-soft looks. With HFR, the soft-shutter work we’ve seen,…

Q: SMPTE 2086 defines mastering environment. Looking at scene-dependent metadata to convey the artistic intent to the consumer.

Q: 3D is different with large screens. What’s your perception of shallow focus for 2D on a big IMAX screen vs a small screen?

Patricia: We take our cues from the filmmakers. They’re often surprised seeing on the big screen, but it’s becoming familiar with the environment. If they know they’re going for IMAX they can tune their design for it.

Panel: Cloud Demystified: Understanding the Coming Transformation from File- to Network- Based Workflows

Moderator: Erik Weaver, Entertainment Technology Center, USC

Guillaume Aubuchon, DigitalFilm Tree

Christine Thomas, Dolby Laboratories

Erik: I run Project Cloud. We’ve gone from film to tape to file, now to network. It’s like mainframe timesharing on crack. Cloud has become such a marketing term, but there’s a lot behind it: almost everything you do. NIST definition; Frank’s definition “somebody else’s computer”. Private, hybrid, and public clouds. Cloud defines: Iaas, Paas, SaaS.

4 principles: people are bad, automate everything; switch from CapEx to OpEx; federation or data gravity (who has access to what); data gravity (the files you create are so big, you bring the assets to the data, not vice versa).

Christine: my group was a few people who knew transport was a problem. Vendors, technologists. How do we bring tech to the media industry, it’s a heavy lift. We’re burdened by legacy workflows.

But the tech exists, already being used. Internet is shockingly physical; fiber everywhere. In the metro area the cloud providers co-located. Right now, to connect to each other, takes 100s of people. 60K emails sent on Hobbit 1, only regarding file transfer. How do we get around this?

Today we use cross-connects at data centers. Post service providers have 30-40 of these cross-connects. We want to go to one cross-connect. Too often we’re limited by corporate decisions about service providers, so we pul a tape, send it across town. If we have a common high-speed, low-latency (under 1 msec) connection between companies… Doesn’t compromise security because it’s just a circuit. Very excited about SohoNets’s announcement this week. But what if my company didn’t choose SohoNet? If we’re all connected to the media exchange it’s not a problem.

Guillaume: Universal cloud project on a USC student film. Our plan is camera to cloud. DigitalFilm Tree started on our OpenStack platform in 2012.

The pillars of cloud: accessibility, instantaneous (expected if you’re under 40; if over 40, this is frightening), logged, encrypted. The centralization of metadata: technical metadata, audio, video, subjective metadata. Multi-vendor support: On student film “Luna” we had SyncOnSet, Scenechronize, Pix, and Critique all on OpenStack. Working on an open model with ETC: 3rd party API, open database, open API. Workflow: shoot, ingest, checksum verification and proxy generation, metadata association, access granted to material, data and efficiency analysis; a.k.a. shoot / dailies / metadata / access / analysis.

Universal ID: a SHA-256 hash that travels through the process, so you have continuity, from Pomfort Silverstack Onset, through AAF and EDLs, through VFX, to archiving.

Search engine analytics: SEO, production metadata, analysis results. You can measure how much material was shot, how much was usable, how much makes it into the cut.

Bandwidth still a major concern. There are co-los all over the world, just takes a bit of work to find ‘em. Many software vendors aren’t ready to contribute metadata. Personnel: takes a bit of training. Processing power; getting compute power to the footage. Another issue: metadata duplication. There’s too much replication of common data, like vendor-specific versions.

Q: Connectivity readiness, I don’t want to write new APIs… who do we have to take to lunch to get the API standardized? A: the standard should be “you just need to have an API.” Doesn’t have to be standardized. It’s irrelevant, largely. There’s tech to take unstructured data and make it structured (translation layers); our APIs don’t have to match. You can just give me a data dump and I can make sense of it: full-text search!

Q: Post can involve a lot of different apps. Can you convince those apps to run under virtualization? A: I run a lot under virtualization, in violation of the EULA. It’s a licensing and legal issue; the tech exists. Avid Interplay is great, but it’s not scalable. Virtualization in OpenStack is a great way to run Interplay. Adobe is a little more progressive.

Q: Standards are for the weak and the slow [laughter], talk to anyone who uses MXF, structural vs descriptive metadata. A: We separate it by “metadata created by computer” and ”metadata created by humans”. We trust the former, need to validate the latter.

Q: We had content without a UMID, none for PDFs, iPhone footage. The way to deal with it is just to fingerprint it all.

Q: How long before you can emulate quantum computing as in the speed of simultaneous rendering? A: We went to a virtual platform so our hardware was always at 95% capacity. In a virtual world we don’t scale facility in individual services but on aggregate work. All CPUs and GPUs can be tasked with whatever task is at hand.

Q: There still must be a prediction model for monetization? A: I’ll be honest and say that in my agile business, I use gut instinct! As we get further on, we’ll develop those algorithms.

Q: What about Kim Jong Un? A: There are companies better than us at security. We rely on Rackspace for security; they’re getting hit every day. Don’t go it alone, reach out to those with the experience.

Q: What did you learn? A: Don’t work with students! [laughter] We have to have partnerships in place with telcos and colos and such. We learned how to train creatives so they realize how the tech supports their vision.

Erik: The whole purpose was to see what worked and what doesn’t. We’ll do another shoot this summer, see what falls down. It’s a hybrid cloud, with OpenStack in house and also stuff at Rackspace. We’ll be demoing federated access with multiple cloud providers.

Q: Why no students? A: We trained their crew in this workflow, then 80% didn’t participate in the project. That’s a student dynamic that made it more challenging that it needed to be. Also never try networking a set. Easier to use old-style on-set working procedures and then enter metadata afterwards.

Erik: What are those issues: security, metadata, long-term storage, frameworks, This project is open to participation. We’re running a 35-speaker series across the lifecycle at YouTube studios, recorded for the ETC YouTube channel in advance of NAB.

IP or Not IP: What Is the Question? – Eric Pohl, National TeleConsultants

Context: we a know that there’s a lot of diversity: news, sports, entertainment; very different. Thus different perspectives, technical solutions. My context regards large TV facilities: production, acquisition, playback, networks, editing, graphics. Large common infrastructure, large investment; keep that in mind.

Why such a silly title? In the long term we’ll all be using IP (Internet protocol). It’s not new to us, but at high bitrates for live productions, it’s newer. We’re at an inflection point; all the major manufacturers are working on next-gen routing products. There will be a Fox truck that’ll be IP-based, not router-based.

Other interpretation: what’s the problem that IP solves? What are we expecting from this migration? Lower cost; IP = commodity = cheap. Better cabling options. Bidirectionality and multi-function support. UHD. Multi-resolution capable. Simplicity; zero configuration. Does IP provide this, or only enable it?

Can and should this transition be about more? A reason to embrace new developments in IP space? We must not build the same thing with merely a new physical layer. How do we do this?

What do we want from infrastructure? We need to solve current problems, also problems for the next 5-10 years. We can’t afford more forklift upgrades. An opportunity to re-examine infrastructure; see what other industries are doing for clues. We’ll get different solutions, but the motivations are similar.

We must define a control architecture independent of transport layers.

Future infrastructure needs: multiple formats, signal deliveries with different characteristics and costs, require “controlled agility”. Entire layers of routing are now used for control-room monitor walls, rivaling traditional routing cores. The complexity of this is going up.

The importance of software-defined networking is that it can’t be done at command-line interfaces. A separation of the data plane from the control plane; OpenFlow. Their challenges aren’t very different from our challenges, perhaps our solution should be similar. I’m not saying adopt SDN, but apply our expertise to the abstract nature of our problems, so we can manage them.

New design concepts: question existing design patterns, no normalization to a single format, different classes of signal access for different needs (e.g., monitoring signal vs. monetizable signals), explore centralized vs. decentralized models; this all mandates a new level of “controlled agility”.

Conceptualize the multi-format infrastructure. The core should provide sources, destinations, and transports networks with capability (constraints). Provision for an activity using the API for a facility. Decide what we want for our “industry specific control plane”. Enable “programmatic control of the infrastructure.”

All this requires intelligent end devices, either new ones or adapters/encapsulators with embedded IP stacks. Close to a forklift upgrade? Only an acceptable strategy if we’re working to a better vision. But now, even the simplest devices have systems-on-chip with IP stacks and an embedded Linux kernel.

Example of an edit system. Either the edit system or the hardware talks to the infrastructure to get the material the human being needs. And that’s just the beginning. If we’ve abstracted it right, we can hide the complexity from the users.

So in the long term we can create a framework that allows a new class of equipment that can talk directly to our infrastructure.

Conclusions: Use the transition to IP to work towards goal of “abstracted multi-format infrastructure”. Be active in standards organization with a clear vision of what we’re working towards. As we plan and design for the future and work with vendors… we measure our steps in terms of moving towards this vision. Turn impending chaos into “controlled agility”.

Q: A few more motivations: cloud-friendly, SDN-friendly. Control plane vs data plane, indigenous way of thinking in the IT world. So many IETF standards for the control plane, we should leverage it. Not much invention required, a lot of assembly required.

Q: Perhaps most important thing is getting vendors to expose APIs. As we move to IP, class structures, stuff we’ve dealt with in automation for years. Native exposure of APIs would be a terrific step.

What Just Happened? – A Review of the Day by Jerry Pierce & Leon Silverman

What did we learn? Seven-letter acronym: JTFMMI (OK, six letters).

Great panel on ACES. Now they have a logo, we’ll get T-shirts, and Josh’s will be calibrated. What did we learn about ACES? ACES is not a 4-letter word. It’s ready, try it!

Nice examples of ACES workflow. Only 2 days to time 90 minutes of content!

How many formats did we deal with in film? Not many. How many think that film distro will end soon? (Half think it’ll be 2-3 years.)

If you Google something, you can tell a producer that all his problems are caused by gamma rays.

Standards are for the weak and the slow! They take too long.

If you want a standard, you have to work on it yourself. For the engineers, we could use help (in SMPTE). If you say someone else will solve it, you get the standards you deserve!

Business will dictate the adoption of standards. We have a wider toolset (OpenStack, etc.) available. Use all this stuff, you can just get it done.

How fast is fast? SIGs and ad-hoc standards; not everyone respects the standards in our industry.

The ACES model worked well: get the group together, develop and test the standards, then present it. SMPTE is good at publishing unambiguous standards. There’s a difference between something vendors develop to make some gear interoperate for a few months, and things like MXF and ACES that will last a long time.

How we communicate to displays, what should pictures look like? There are no standards at all for this.

What’s HDR? No good definition. “I’m nuts for nits.” Like “what’s HD”: it’s a moving target.

A new definition of propellerhead: someone with a drone. How many have drones [tens of people].

Sieg lives in a world with lots of tape. How many here deliver on tape? [A few deliver on tape, none deliver more tape than files.] How many archive to tape? Disk? Film? [Various hands go up.]

There’s the CALM act. Will there be an eye-CALM act to keep HDR ads from using too many nits?

It’s hard to believe that we can all see what Josh Pines sees without chemical or other aids!

Disclosure: I’m attending the Tech Retreat on a press pass, which gets me free entrance into the event. I’m paying for my own travel, local transport, hotel, and meals (other than those on-site as part of the event). There is no material relationship between me and the Hollywood Post Alliance or any of the companies mentioned, and no one has provided me with payments, bribes, blandishments, or other considerations for a favorable mention… aside from the press pass, that is.