Continuing my notes from the Future of 3D Panels at FantasticFest, Sean Fairburn (familiar if you read the Cinematographer’s Mailing List) gave an excellent talk on the basics of, and advice on, shooting stereoscopic aka 3D moviemaking. My full, raw, unedited, as-I-typed’em notes are below.

EDIT – here’s pics of his slides on a Flickr set

Sean Fairburn is next up to speak

3D Stereolab is up after that on capturing guerilla 3D content

Sean Fairburn – how to shoot it – just take two cameras? Not quite that easy!

At least get the terminology right

“3D” is a weird nebulous thing – is like saying something is digital – what does that mean?

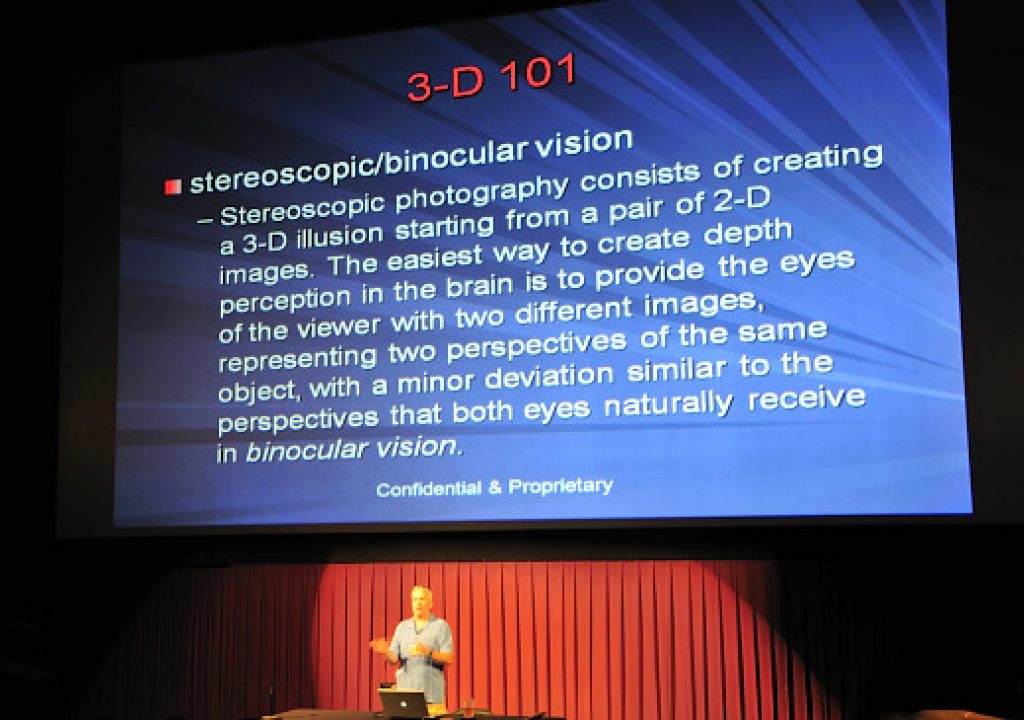

When discussing 3D, need to have some foundational groundwork for what’s going on.

A number of different philosophies, the main thing to be aware of is how does it work for you? Post Production is a large part of all this. How it gets screened is not that big a deal.

One of the main things to understand -the concepts of interocular and convergence

Interocular (Interaxial is proper, interocular is commonly used) – the distance between two eyeballs

is the distance between the center of the two lenses

Stereoscope.com has a gallery going back to the 20s that have different interaxial distances

average of 65-70mm, 2 1/2, 2 3/4, it varies between people – there’s a bell curve

those cameras have variable and varying interocular distances

pick 10 friends and average them

if working with little cameras can do it, but with big cameras, the lenses are too big to get them together close enough

we ned to get 4708 – pushing things too close togeher

smaller than normal interocular will make the scale of a larger screen more appropriate for folks watching

the size of the interocular for small screen is different than that used for big screen

there isn’t a comfortable way to do both that works for both small and big screen

CONVERGENCE – is the point where the projected centers of the two lenses intersect – in the scale of the depth of the center to the screen, convergence is the screen – if in front of the point of convergence/screen, is in front of the screen, beyond the screen is behind the screeen

the screen is somewhat transparent to a very large degree – but when shooting, where you put convergence

Peter Anderson was his 3D guru over last 8 years – NEVER put point of convergence on the other side of the backmost object – never have convergence on the other side of the wall – the entire 3D space would be thrust out into the audience, creates DISPARITY – this is a bad bad thing

Q: Do 3D cameras allow for dynamic or static screen plane?

A: Some do, some don’t, highly recommend gives dynamic convergence mobility, as well as dynamic interocular capability – there will be times to do stuff where needed

Divergence – on the far side of convergence, you separate into divergence

if your divergence exceeds about 5% of screen size, you’re creating a problem for the audience -their ability to understand and visually comprehend will be challenged

don’t have to share the load with both cameras – one camera can be the one that moves – fix one, have the other handle the interocular and convergene

helps to be able to control that on the fly – there can be dynamic things that are happening and you might want ot change that while the shot it happening live

-instead of shooting towards the short side of a room, find something that plays better in a spatial point of view – let the subject work in and out of convergence, let people in the scene work in and out of that space – another reason to lock focus and convergence is simple but limits how you’d otherwise play the depth of the scene

if the action is coming towards you and you move convergence on them, let the action pass from behind the screen through to in front of screen is more interesting often – don’t trombone them to hold it there

test it, test it, test it! believe nothing anyone says until you test it for yourself

parallax – (see photo) – zero/neutral parallax puts the image on the screen. Offset behind the screen is divergence

Pre-Production – same way you do in 2D, takes on an understanding for 3D now, so do a 3D script breakdown –

while you have to do things right to not hurt the audience eye, you can stray from those rules if you do it briefly – be creative! You can get away with a lot – there are sound solid rules, but from time to time you can get away with a lot

the way you do your budgeting – roughly 25% more based on type of film –

the FIXING is an unknown factor – depends on how well shot in the first place. If you shot it well, save money, if you shoot it badly, is VERY expensive to fix!

CG is more expensive for 3D than 2D – how much of what type? Putting stuff in, taking things out? There is no one answer to give on how much things will cost

if you begin with the worst case scenario – marketing spin is spin – if you blew it on one eye, starting with one 2D eye you can make the other eye, but everything has to be cut out and moved over and layers created – 2D to 3D is the most expensive and time consuming way to do it.

-most common things screwed up – there’s aminefield of issues to be aware of – if you consider how your eyes work, both eyes are blinking together, converging together, focusing together, etc. If one lense is zoomed a bit more than the other, or the focus is a little off, etc. – you’re in trouble! If one is zoomed, can’t just crop it in. If focus is off a bit, is trouble. If convergene point is off, can shift to slide it over to horizontally overlap. If one’s iris is off, if one’s shutter speed, sync, etc. is off. If interocular is not set properly, it is EXTREMELY difficult to reset it later. If you don’t set it right at first, is WAY difficult to fix, or rebuild from the other eye.

-3D adds additional layers of “must be perfect for that take” over regular 2D filmmaking

-more time in prep than you normally would, an extra week at a minimum and shoot tests and take it all the way through workflow through projection to see how it holds up

-as you start a show, going to take longer to get things done, about 1-1.5 weeks later the crew will catch up to a normal pace. Schedule a lot more time on the front end the first 3-4-5 days, speed up to normal further into the schedule.

as you begin a show, if you had to go get a 3D rig right now, can’t go to usual suspects to get camera rigs from – will take more time to acquire a rig, every rig built right now is in use! Getting that gear is hard to come by. A beam splitter rig – the way you capture images – if the cameras are side by side, called side by side.

Beam splitter rig – one shoots through the mirrored glass, other 90 degrees off shooting against the mirrrored side

-side by side parallel (used on some IMAX) doesn’t work as well as converged

-beam splitter rigs can have some limitations on how wide to go

-ability to view the footage technically is another major limitation in the field – if looking at it in 3D while shooting it – you can’t see where that screen point is – one of the ways to see what is going on, see the things that are different – grey screen with colored overlap – one eye is black, one eye is white – 50/50 overlay can be tough to figure out where convergence is if middle of frame is nebulous

-viewing 3D in the field is tough – there are no “safe” answers – somebody wanted to shoot it all converged on one setting – he said why not focus all at 12 feet….

if you understand interocular and convergence, your ability to keep convergence in the room will let you work

-from a director’s point of view – there is something triggered in your brain – like that fight or flight sense – that view of the world – that exists much stronger in 3D than a 2D movie – in a 2D movie your brain knows it, but in 3D, your brain perceives things more viscerally – it chemically kicks off different chemicals in the brain more strongly

-in your reality, you don’t cut away to a closeup, things travel, they don’t cut – set up shots that move more than cut to, you’re going to keep them in the 3D place – drive them to the action, or bring them to the action – should be much less cutty – keeps the suspension of belief – why did you used to cut fast and go to closeup? That gave emotional connection – in 3D, try to make if flow more than cut. 3D is its own emphasis to connect to the audience.

pic of beam splitter rig – the pink/yellow tape are the left edges of the tape – you lose a stop of so of light on the

the F23 head looks like a film camera – an 11 inch footprint – Pace has started with that and rebuilding it into something smaller – the tech is up and back instead of off to the side (width)

-U23D was 950 t-block

-could hand held two 950s at SuperBowl 38 –

eventually a more efficient way to do it? Oh yeah! Sean is designing (he mentions the Element Technica rig) – Keslow is renting them

Sean working with them int he coming weeks/months and have them rig up some of his ideas for something faster/ligther/stronger for concert/theatrical

-Rob Legato – starting w/zero interocular 3D rig to double the amounts of information/lines – can use for HDR to get more dynamic range – layering in downstream keys etc. is another possibility – unless there’s a project, nothing to finance it

-is so expensive to do the 3D rig in the first place – people can’t get past that

-the other issue in terms of land mines – people want to use their same zoom lenses – those lenses do not track the same way unit to unit – they don’t track exactly the same – gotta take apart the back of the lens and calibrate them to get’em to center exactly the same – if one starts to rise as it zooms, the other must be made to match

-Animated projects – they get to cheat and don’t have to have physical optics to work with – they get to build every layer the way they want – can alter foreground convergence and not touch the background

-a lot of cuts is brutal in 3D

-when pre-pro-ing 3D that needs a lot of cuts – shoot such as to lessen the strength of the 3D during that sequence – pull down the interocular – less opportunity to create a problem

-2D images in a fast cut and the audience won’t tell –

-get in the habit of being able to look over the top of the lenses to check as you watch –

-primes are awesome for a lot of reasons – from a speed, timing, and etc. point of view – shoot in primes when posible – getting matched sets is preferred.

-moving the camera – your eyes are primes not zooms – 3D prefers wide since your eyes are wide -24mm and wider is a great starting place to move the camera in and through

-3D is much more powerful to cruise through the crowd and find woman in red dress, rather than wide shot, cut to woman in red dress

-play some little bit of someone in front of you, still looking at woman in red dress

-when you blink and turn and look, that is as close as you get to get to a cut

-when you jump cut and shakey cam, that is for emphasis. 3D is its own emphasis

-in a scene there is a cut, but try to move around and in

-GIVE YOURSELF AT LEAST 2.5 SECONDS PER SHOT!

-if diverged – angling cameras out – is called wall eye – can’t fuse them in your eye – if you did that, flip left and right eyes to get’em to converge rather than diverge

-the furthest most object you look at is going to be parallel –

test – look at your finger and a far person – if looking at finger, see two people. If look at him, see two fingers. THAT is convergence! Play with that concept -that is convergence

-YES want a divergent image in the background, but not more than about 5%

-practical limit to how close? No practical limit – 1/10,000 of an inch can still get great 3D – is all about scale

-go back to childhood school – things look smaller – isn’t because you’re bigger, because your interocular has grown! Your stereo pair in your memory had a smaller interocular – later grow up, bigger interocular – your stereo images from childhood are a different scale!

shoot with wider interocular, makes you seem bigger/target seem smaller

THAT IS A GREAT POINT – can imply scale of the viewer – can make things tiny or gigantic to create that sense of scale

-U23D stuff – started with Peter Anderson said wanted to shoot it as a feature film – wanted interocular to scale to the audience – Bono is about 5 foot 4 – shot it about 1 3/4″ instead of 2 3/4″ – giganticized them! To make the scale seem bigger – was NOT due to zoom, in the 3D space they appeared bigger – that is where they started from – was a better use of the perception to get the sense that Bono is larger than life – people shy away from that since it isn’t “normal” – sometimes you WANT to make things bigger or smaller than real life

-Operating – (see photo) – the modern tendency towards Bourne Ultimatum – that is an emphasis by itself, 3D is better on its own. 3D on Spidercam is great, 3D on cranes is great – the undulating pogo-ing crowd in U23D is amazingly effective, Steadicam that flows and glides is good in 3D

-Lighting – ghostbusting – when projected, make sure there isn’t an extra halo on high contrast backlit objects – is used to separate background with backlit to separate out – in 3D, they are ALREADY separated from background – they are already separated out!

3D does not like shallow depth of field – 3D likes deep depth of field for the audience to blink and look into the background and elsewhere, the emphasis is on the convergence point, not the focus

-the way to approach a movie is different – cinematic depth of field is the WRONG decision for 3D! So Red – so F23 not F35? NOT Red! is going in the wrong direction – is great for cinematography, is not as compatible for 3D due to depth of field issues. “Shallow depth of field is a disadvantage in 3D.” Deep depth of field is what you want.

Onset viewing – test test test!

Editorial – its own trouble – you MUST be looking at it in 3D! Why pick one shot over another? If focus is a driving factor in 2D, here’s another factor on which to pick for your shots? Picking one for great performance and bad 3D? Might want good 3D and not quite as good

shutter glasses? Anaglyph? Dolby digital dichroic filter is amazing anaglyph

IMAX or home theaters for active glasses – easier to employ there – I wouldn’t today start out saying whether audience sees anaglyph, etc. – let the distro companies make those choices for you

-“Qtake HD is rockin'” according to Sean – five different viewing flavors

-crosseyed view – one of the ways to take a look at something – take two pictures offset – find an anchor point and use that as an anchor point target –

-look right in the middle and cross your eyes with those two images side by side

14% of people CANNOT see 3D no matter what you try to do

-put in a link to QTake and Element Technica

———

personal notes – so if you follow Sean’s advice on proper 3D shooting techniques, that creates a challenge/dichotomy for the biz model –

the biz model that i’m hearing about right now is emphasizing 3D as a premium theatrical experience in parallel with a 2D distribution, with later 2D DVD, Blu-ray, TV, etc. But if you shoot optimally for 3D, that challenges the emotional effectiveness of the 2D….hmmm, how to resolve?

Also, if deep depth of field if preferable for 3D, that can be a struggle for Red, since it has shallow depth of field. Internally thinking – well (since I like Red and own one) – you could close own the iris to create deeper depth of field, but not very practicable because:

-you’re already losing light shooting through a beam splitter rig

-Red is already pretty light hungry, especially under tungsten light (improved notably with Build 20)

-the increase in the lighting budget to make up the difference for irising down to get into 2/3″ chip range would be insaaaaaaaane

end of notes….