Issues surrounding workflow, storage and archive are things video productions of every size are dealing with more and more these days. Whether you’re working with a team or just by yourself, if you don’t know how you’re going to utilize and handle your digital content in the short term and long term, you’re going to be in for some major headaches.

We talked to him about the challenges he’s seen people in media & entertainment struggle with when it comes to storage and archive, how Crossroads is able to address security concerns and what makes the Crossroads StrongBox NAS stand out from other storage systems.

ProVideo Coalition: At IBC this year, you talked about your commitment to the open standard. How has that belief guided your vision and product?

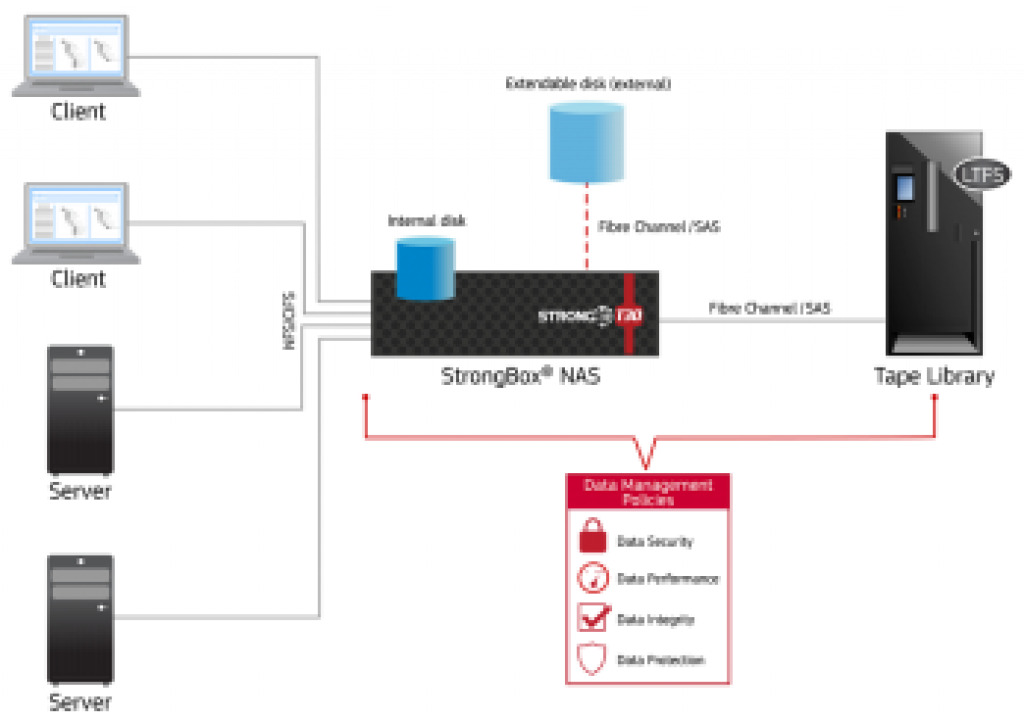

David Cerf: The open standard defines our product. We are a solution purpose-built around LTFS, linear tape file system, which is part of the LTO tape standard. With LTFS, tape can behave more like a flash drive, with simpler file-based data access. As of LTO generation5, every tape drive has this feature built into it to allow you to write to tape in a non-proprietary format. So Crossroads’ entire design principle is to stay within that guideline. That means that we enable you to utilize tape in everything you do, without requiring any proprietary software to access data. StrongBox is a network-attached storage appliance – it looks and acts just like a standard disk NAS, but allows users to benefit from the low cost and reliability of LTFS/LTO media. Data is written to the LTFS open standard; the StrongBox doesn’t change, modify or alter the file.

Keeping data free from “vendor lock in” has been a design principle from the very beginning. Applications, like CATDV, Avid or other MAMs, see the StrongBox just like any other NAS, but the way we store content means it’s open, available and can be read without a dependency on the StrongBox server. You could literally take those tapes out, put them into any other LTO drive, and read that data back as you would a USB thumb drive.

That’s a conscious design, unlike other products on the market which were probably developed to use TAR tape, or other proprietary formats and then added LTFS as an afterthought. The problem there is that it puts some proprietary barriers around data access. Since we integrate the LTFS standard seamlessly, we are not haphazardly pulling all these elements together – StrongBox presents one single storage system and stays within those open standards. This also means it can work with existing technology already integrated into the workflow.

How important is it to give users the ability to create their own workflow?

So we’ve evolved to support that concept, and it goes back to the way we designed and put together StrongBox. Even though we bring tape and disk together, we are now bringing the ability to control your workflow from an intelligent point of view in the storage. StrongBox’s intelligent policies allow users to customize their data accessibility at the share level. So one editor could have his own “archive” folder that isn’t mixed with another editor; then the project manager could have access to each networked share. That means users can customize their workflow, but they’re all addressing StongBox, and StrongBox intuitively manages the performance of the workflow to meet that particular users needs.

Moving forward, I think it’s that type of structure we’re going to have to see come from all vendors.

Any examples you can cite as being especially creative or effective?

We have a very talented customer, Major league Baseball Network. They are thought leaders in this space, and they’ve actually created their own broadcast workflow applications because they couldn’t buy what they really wanted. So they developed some of their own unique ways to track and keep all of the broadcast metadata together. Now, they use multiple vendor products, and of course they need it to all work together, but the way that they designed their own applications to function is amazing.

For every baseball pitch, there’s info about what the pitch was, what the speed was, who threw it, when it happened, where it happened and plenty more. There’s all this information that’s coming in real-time, and they’re tagging everything. And that created these interesting requirements.

They’re a user of StrongBox, and the ability to have file access tune-able to manage the way they want to organize infomation and workflows from various seasons is essential for them. They’ve also been able to beat their budget estimations with the system, and that’s a key factor for them.

And on the other side of that, any workflows or examples you can think of that really aren’t working?

In terms of what’s not working, it’s anytime someone is putting content on hard drives that sits on their shelf, and then waits for someone to come in and do something to it or with it. With all the solutions that are available to store info, not having two copies or more of your data or just leaving it on a single drive is something that really doesn’t work. Hard drives fail, so that exposes content to a massive and expensive risk.

It’s a crapshoot, because at some point that process or that device is going to breakdown. You just have to hope it’s not going to happen at a really inopportune time.

One StrongBox user, ProductionFor, had been keeping data on hard drives. When they switched to StrongBox, it was easy (and cheap) to create multiple copies for data protection and better organize archival content.

What are the most common challenges you see people struggling with in media & entertainment?

Tom Coughlin put out some numbers a couple weeks ago, and his comment was that today, an average movie production generates about a petabyte of data. And his statement was in less than ten years that same movie will be an exabyte of data. And that’s an incredible increase. A lot of that of course comes from 8K and camera technology that keeps rolling out, so the capabilities keep increasing which compounds the problem. There’s so much data, and it’s growing exponentially, even though budgets typically aren’t. So the big questions and challenges are around how we handle all of it within a budget.

It’s something that people all across this industry are dealing with though, big and small. Just think about sports. Whether you’re an individual athlete or Formula 1 racing team, you’ve got a GoPro recording everything. You have all this content, you’re editing what you’re going to use and then need to figure out where and how you’re going to store it. If you’re not ready to deal with all that content, you’re going to get stuck, lose your data and/or not know where that footage is when you need to revisit those shots.

Are there needs or desires on the part of users that you continually hear back about?

I think that the demand is for seamless integration and overall simplicity within a budget. That is, better ways for applications to interface back to storage, whether those are through APIs and RESTful interfaces that can give you combinations of on-premise/off-premise functions, and/or long-term retention, which is probably the most important aspect. We certainly have those technologies and methodologies, but getting people to realize and see how they can work for their production or system is a separate issue.

We talk about keeping stuff twenty and a hundred years, so when we look at the idea of storage, the concept is, “I need that same performance capability with my application to work back with something that’s going to provide long-term indefinite retention of that content”. And it all has to work easily.

How are you able to address security concerns with your customers?

Chain of custody is a huge issue. Especially in an industry where the content quite literally is the business, controlling data is key to success, and we’ve seen some really massive failures. Last year a major cloud provider went under and basically gave their customers two weeks to get their data back – not a comfortable timeline with terabytes to move and no where to put them.

Security in the cloud is a big one, but I think customers are getting really smart. They’ve started to understand the security concept there. No one wants to mess around with amateurish solutions when you’re dealing with a raw copy. So ensuring that protection is an issue is a priority, and a lot of that comes in the network and making sure there’s network security. As far as the actual protection of the content, I think much of this is about the number of copies you have.

This goes back to my earlier comment about how if you only have a single copy of your data on a USB or hard drive, you’re asking for trouble. I think most smart production companies, they’re making three, four…seven copies. So they’ve got it from a redundancy perspective, and they’ve got it in the multi-site. But what they really want is intelligence in the way the applications can interface with the storage, to where you can automate those multi-copies and ensure that data is secure. We’ve proven how physical security works so I don’t think that’s much of an issue, it’s really more about the desire to have data preservation copies that are automated and intelligently accessed for recovery.

You mentioned the concern about security in the cloud and how that concept was very much an issue for people. Do you think that concern is still around, or are people much more confortable with it?

I think what we’re learning is that it’s still very early. The technology and services are still new, but they consistently improve. It really isn’t about getting people to be 100% comfortable with the cloud, because the word we’re seeing a lot is “hybrid”. We’re seeing a lot of different combinations of technology brought together to deliver better solutions that will continue to improve over time.

What we’re seeing now is that you don’t just have to depend on that camera shooting onto a memory chip and physically getting that chip where it needs to go.

What are some of the questions that content creators should be considering around storage and archive as their project comes together?

Being able to answer the question of how many copies you want to retain and protect is something you should always figure out. How long do you want to keep this content is important as well. You should also figure out the access you want. Is it just one person, or are multiple people sharing?

Really though, these are things that should be taken into account at a higher level. As a production company of any size or even just as a single creator, you have to figure out how you want your data to be accessible, available and protected. With a little thought, those things are fairly simple to implement, whether you’re a small team or have a hundred people working within the same production group. And it goes from pre-production to shooting and all the way through post. There are great solutions in the market today that can integrate data as it’s shot and sent though a pipeline. It’s really about figuring out your approach as a whole as opposed to working through all of that on a project basis.

It’s a top priority, because if you don’t do it right your data might not be where you need it, when you need it, and in the form you need it. That could mean a lost project, a loss of work and a loss of revenue.

Will the approach of individuals and organizations when it comes to workflow, archive and storage change as they begin to realize what these tools are capable of doing?

I think people in this space are getting a lot more savvy about how they want to approach storage because we’ve seen integration become much more powerful. Storage and archives can work seamlessly with your MAM, all the way down to your off-site second copy for DR. All of this can now be seamless, and over time it will become a single solution to the user. They’ll know when they need a file they just click it and it’s there and they work on it, and when they’re done it goes back and it’s protected the whole time.

So I would expect to see more intelligence and more automation continue to come over time and it’s important because the value of content goes well beyond the initial use of it. Replaying old movies and syndication are concepts everyone is familiar with, but there are a lot of different ways to utilize, re-utilize and monetize the content people already have, but that can only happen if you can find that content when you go looking for it.